Content

Navigating the Future of AI: Resignations, Challenges, and Innovations

Navigating the Future of AI: Resignations, Challenges, and Innovations

Navigating the Future of AI: Resignations, Challenges, and Innovations

Danny Roman

November 16, 2024

In a rapidly evolving landscape, the AI community is witnessing significant shifts, including high-profile resignations and emerging challenges in AGI development. This blog delves into the latest updates, exploring the implications of these changes and the cutting-edge innovations on the horizon.

📰 Resignation News

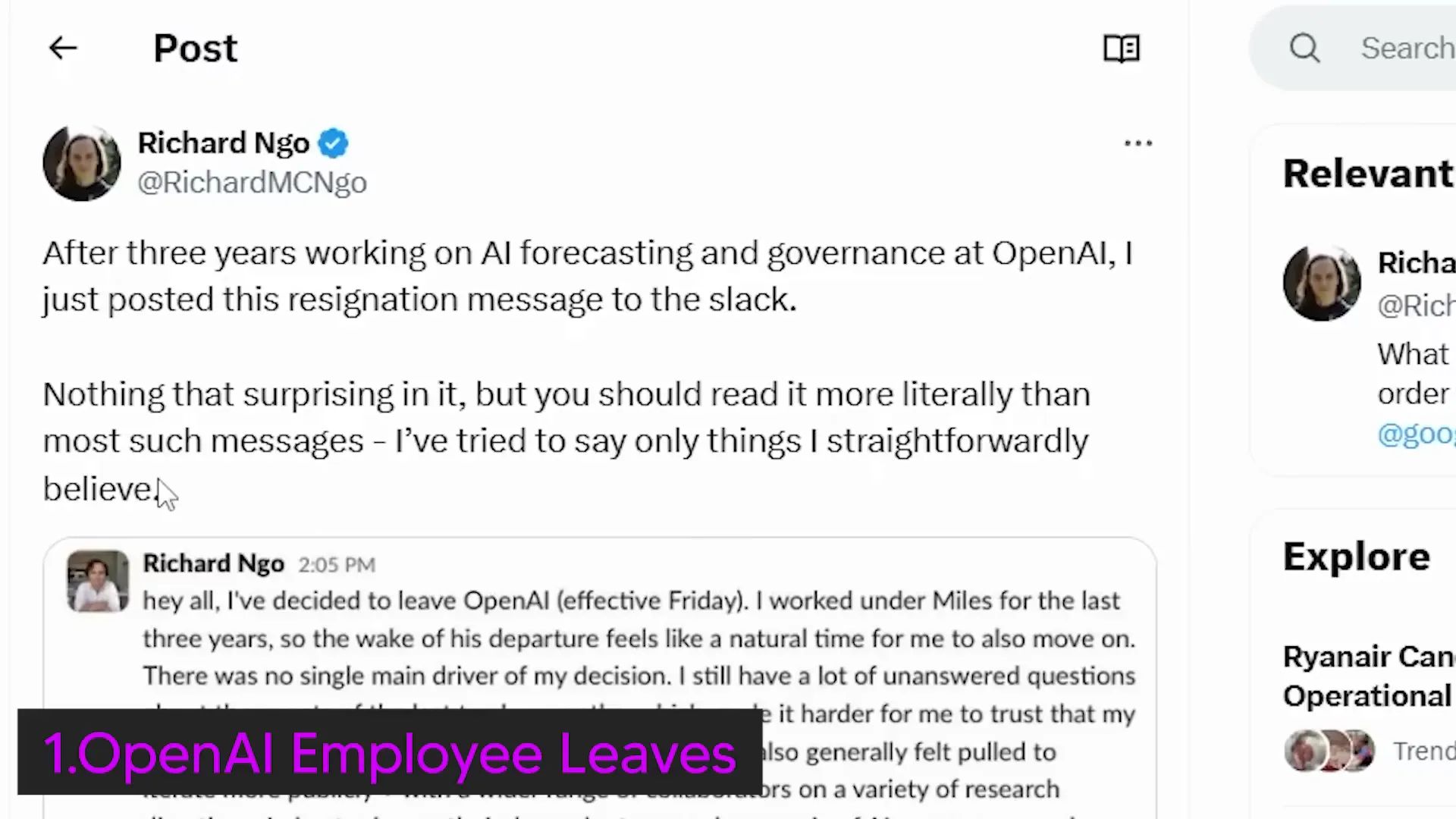

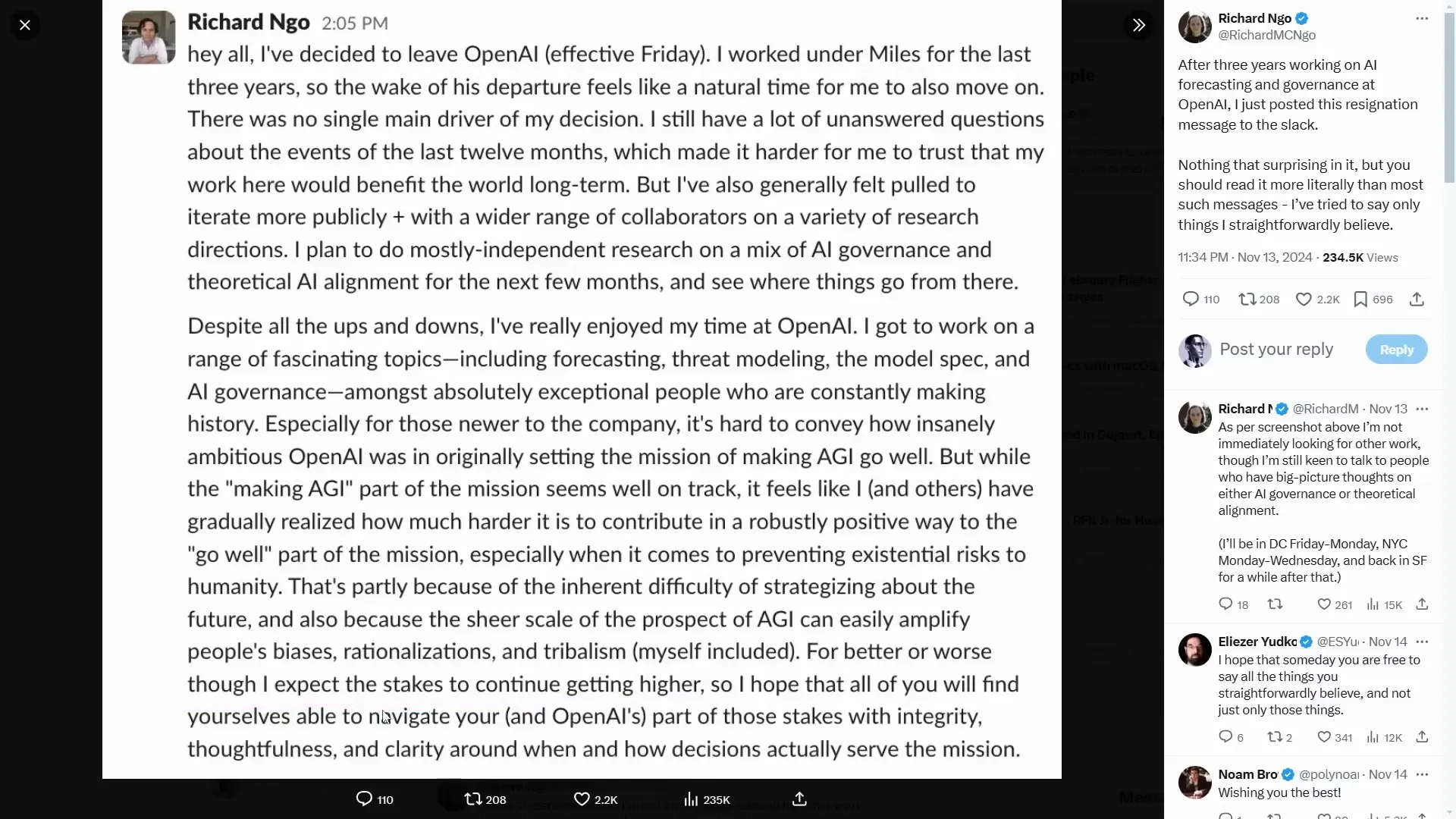

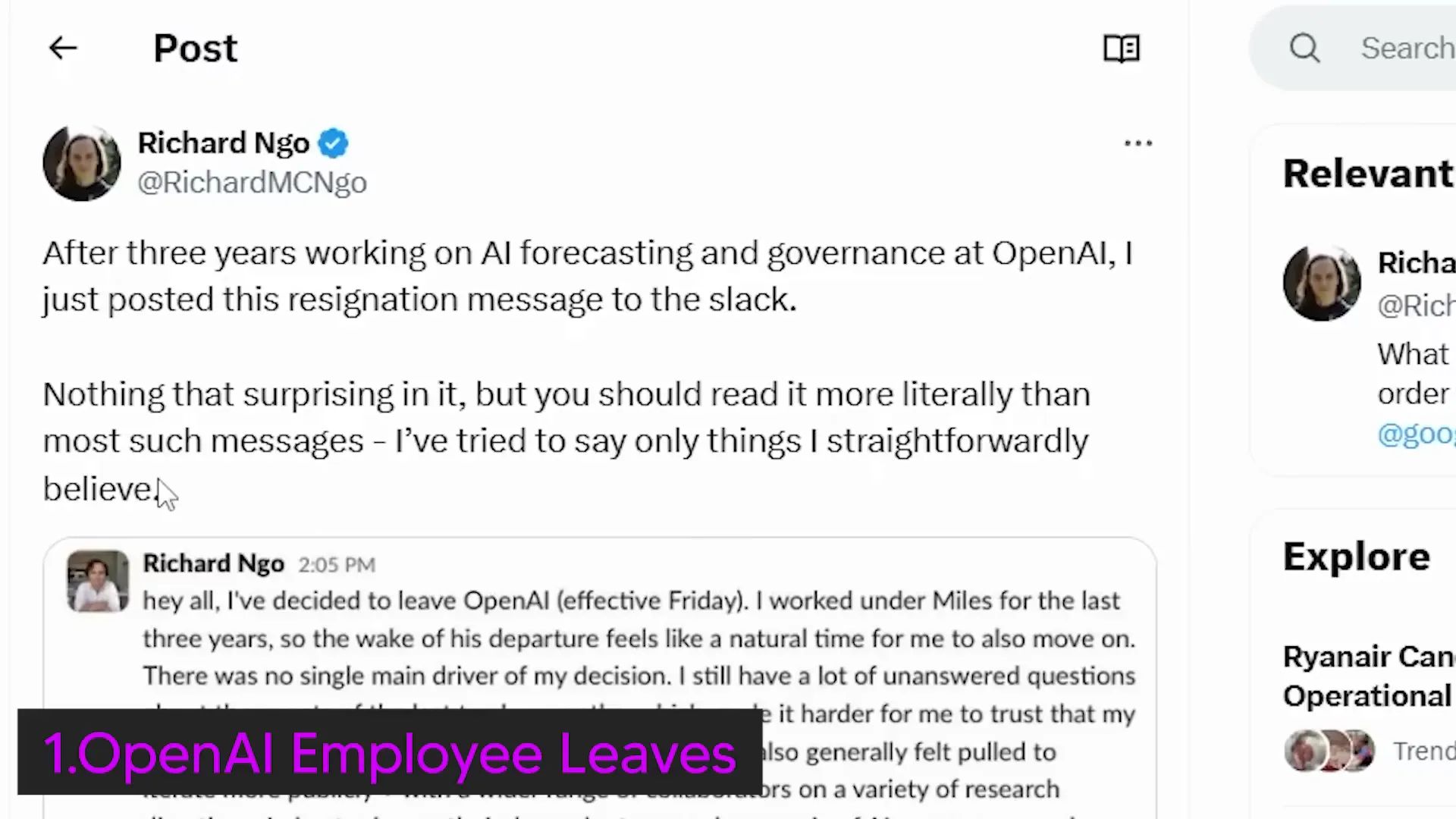

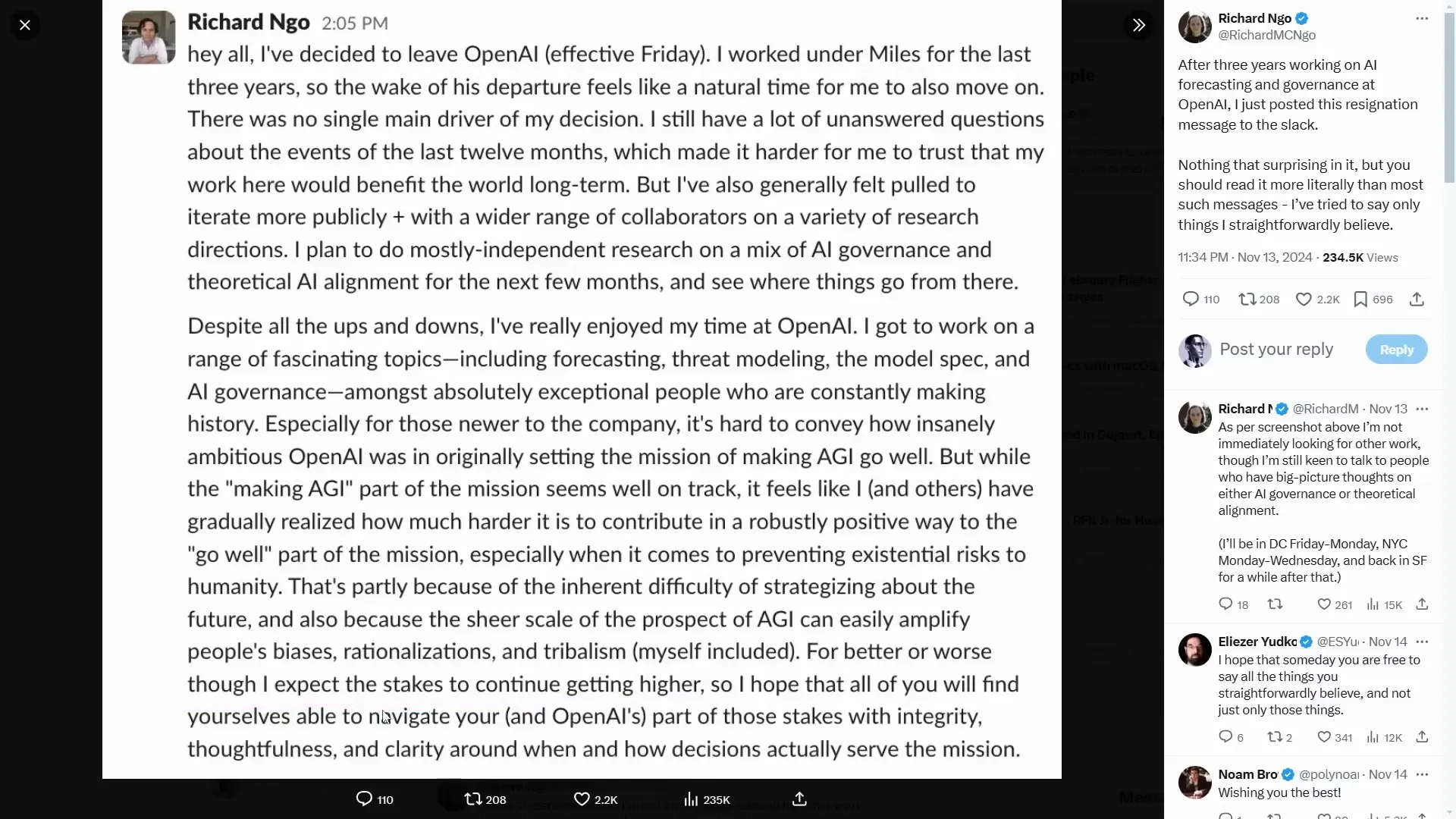

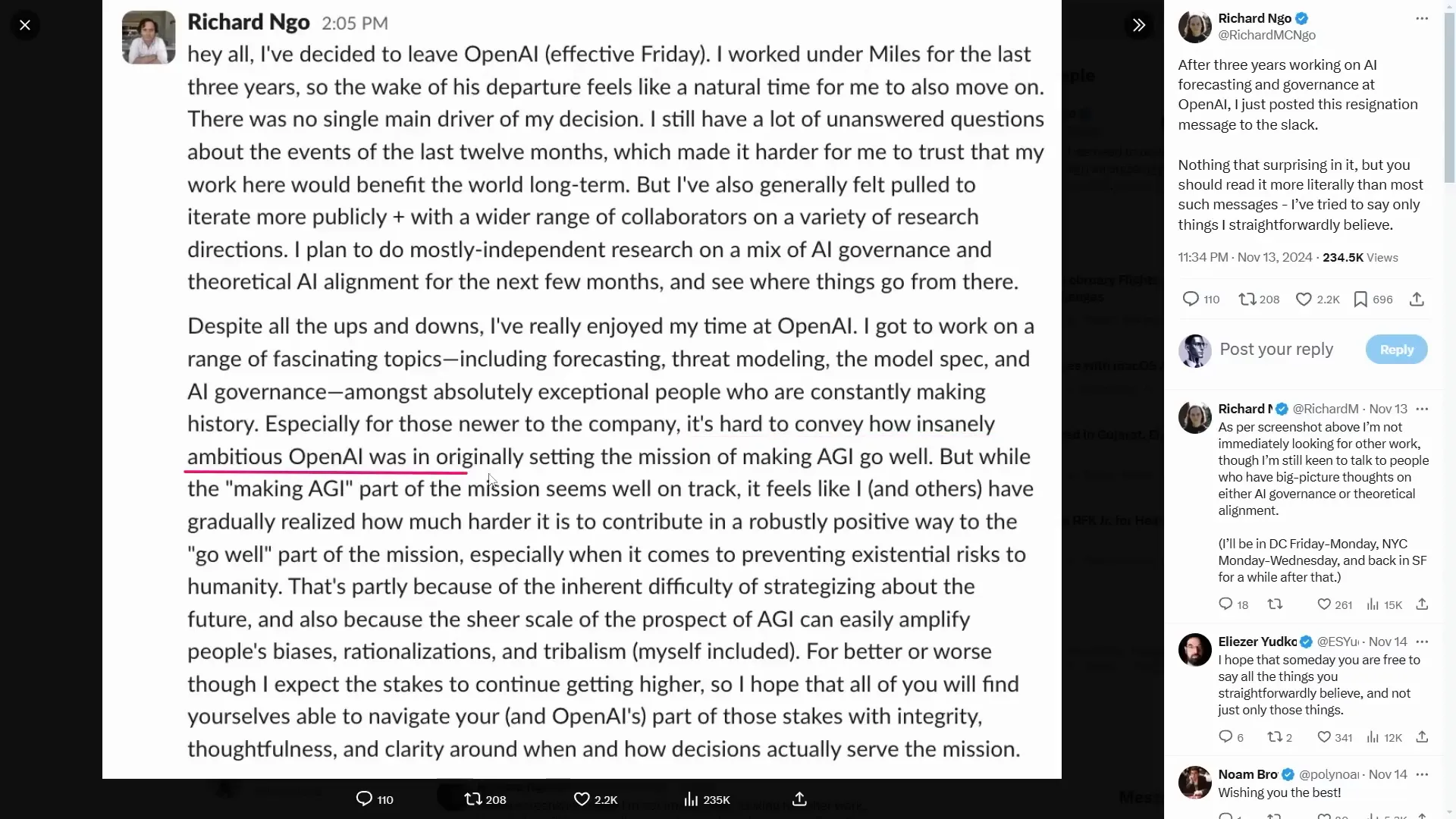

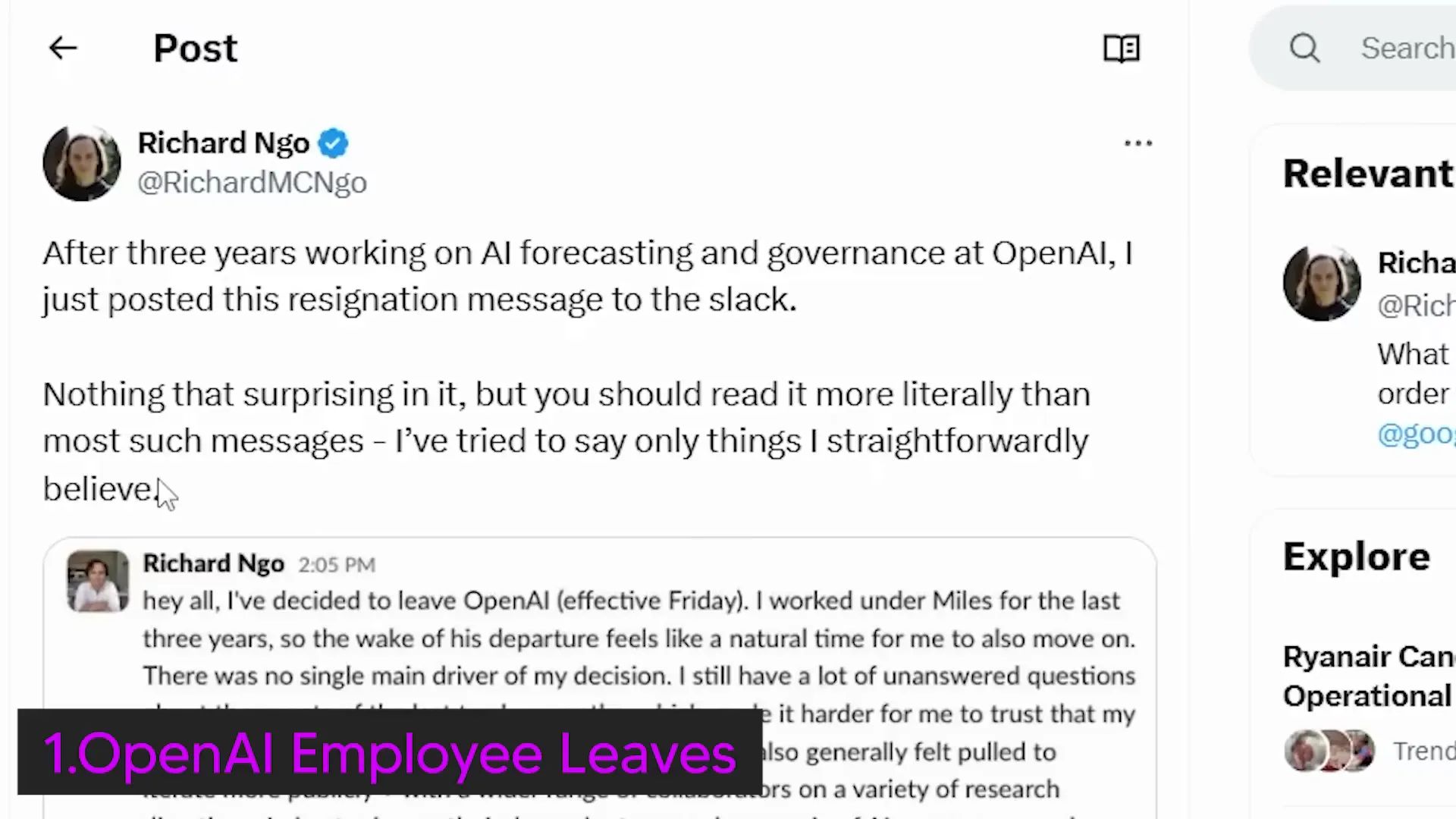

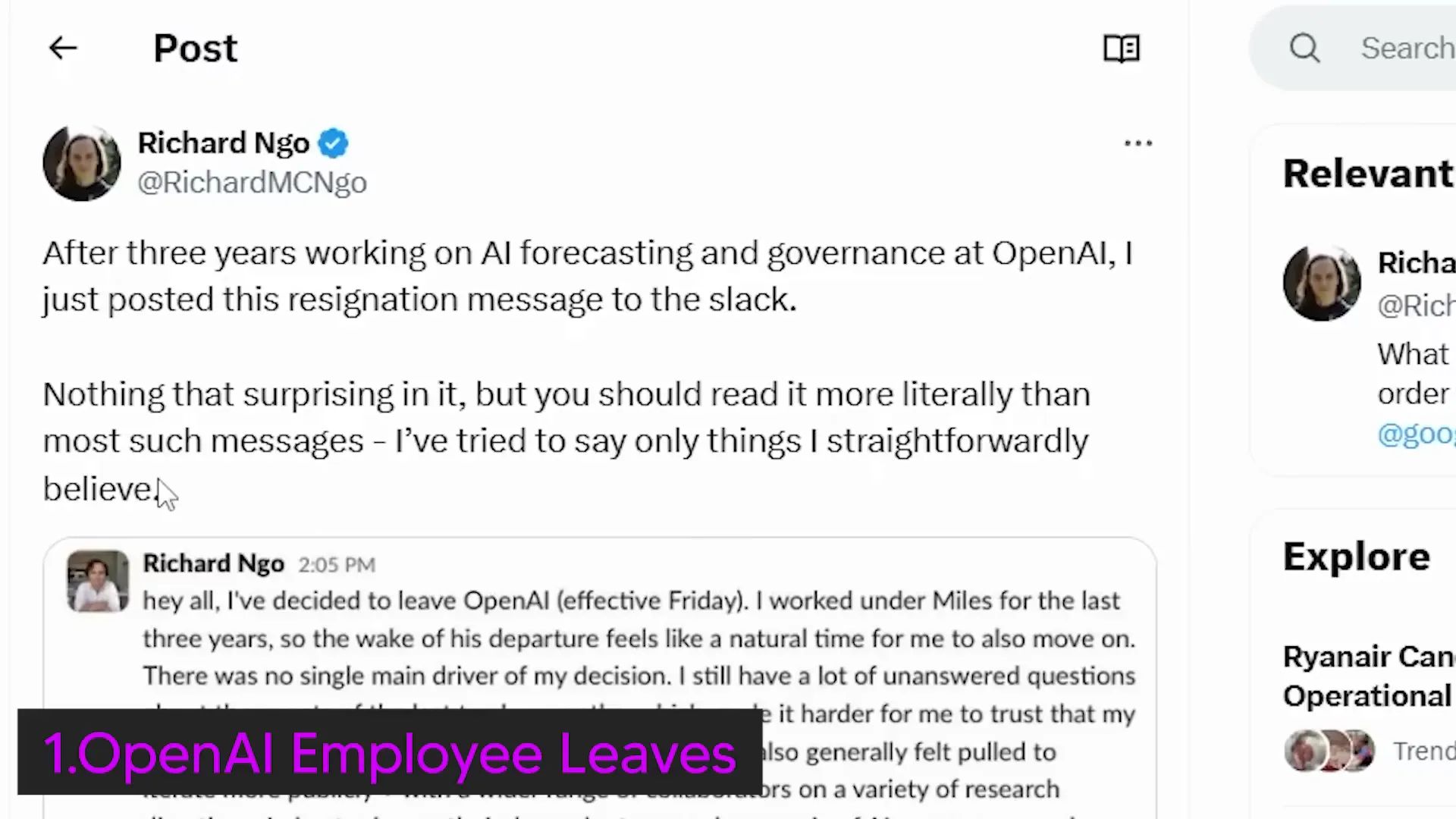

Another day, another resignation in the AI world! Richard NGO, a key player on the OpenAI governance team, has made waves with his announcement. After three years of being deeply involved in AI forecasting and governance, he's decided to pack his bags. His departure is a part of a larger trend we've seen in 2024: the exodus of talent from OpenAI.

Richard worked under Miles Brundage, who recently expressed doubts about the readiness of both OpenAI and the world for AGI. This isn't just a coincidence; it's a sign of deeper concerns brewing within the organization. Richard's resignation echoes the earlier disbanding of the super alignment team, which raises eyebrows about the internal dynamics at OpenAI.

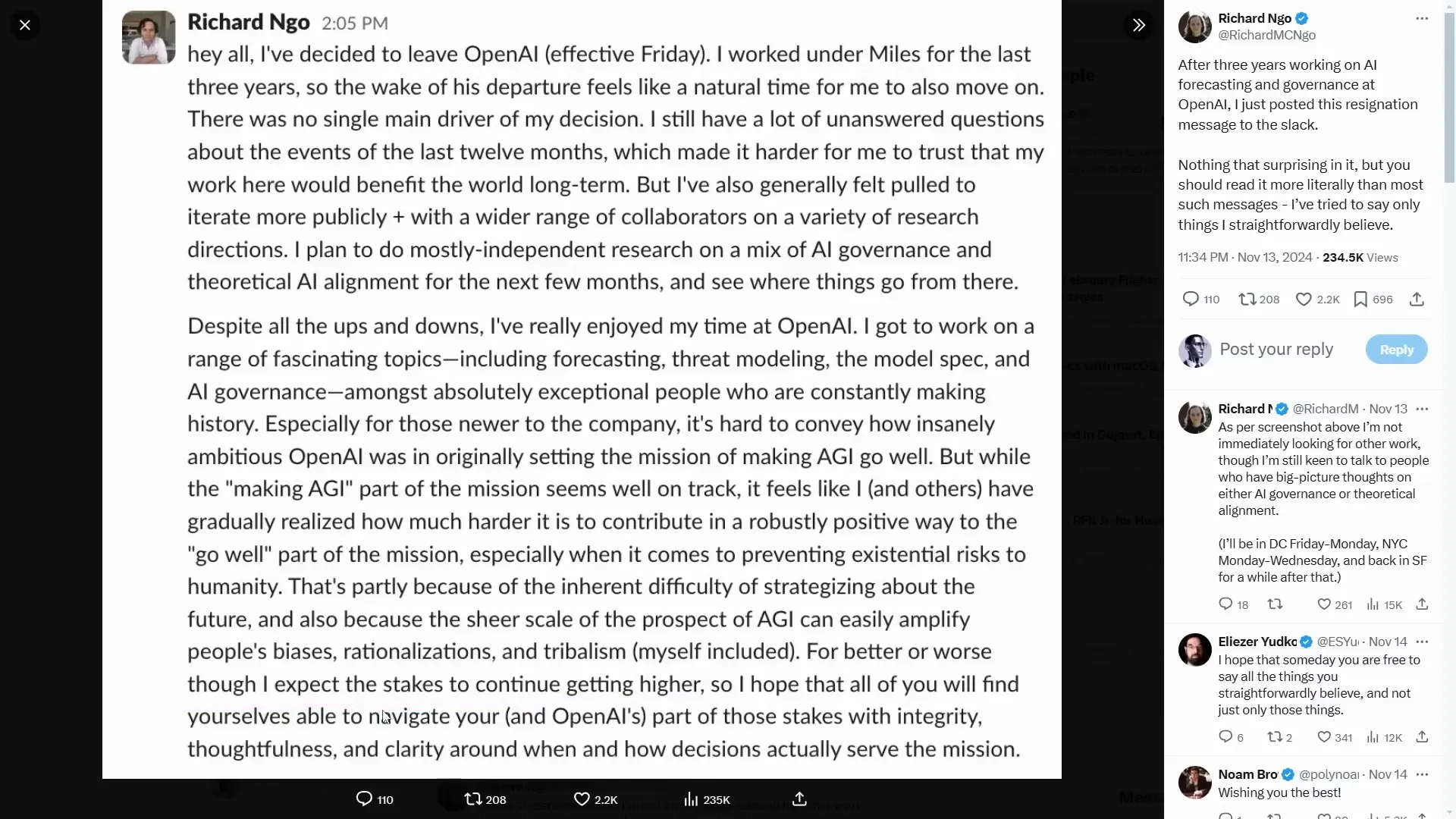

Questions on Trust and Impact

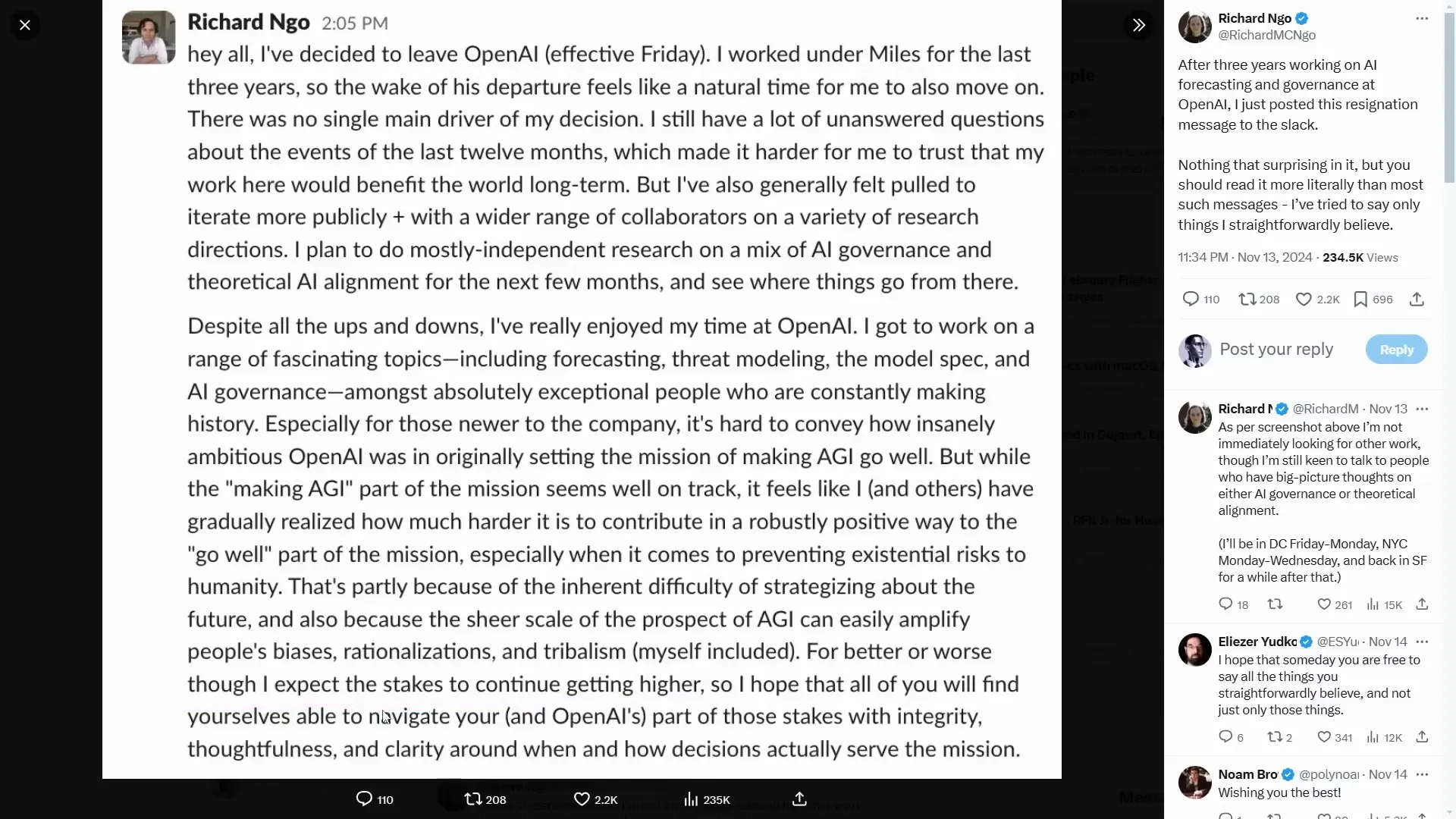

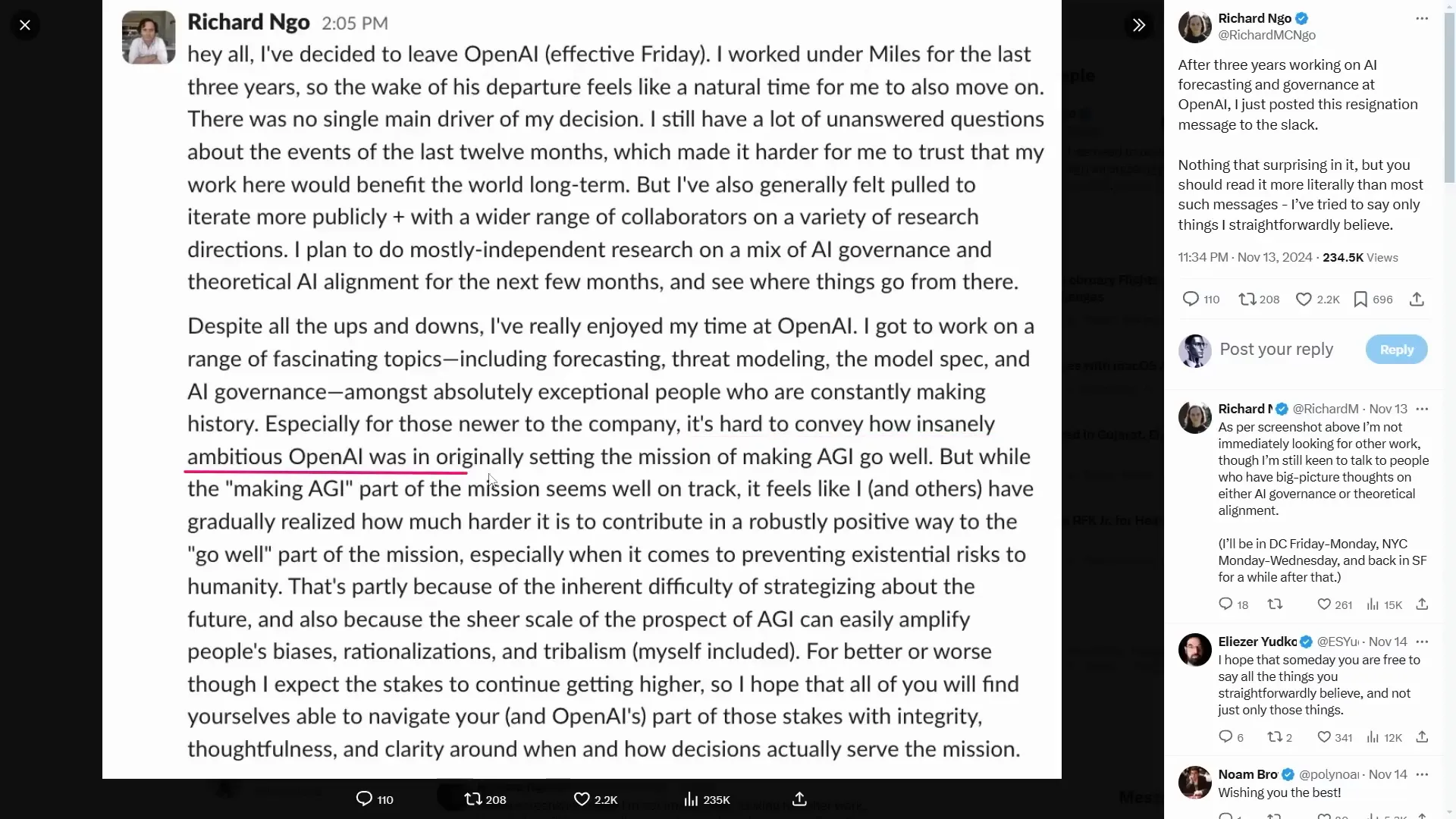

In his resignation message, Richard revealed that he has lingering questions about the past year's events, making it hard for him to trust that his work would benefit humanity in the long run. This statement isn't just a casual remark; it suggests a crisis of confidence in OpenAI's mission to ensure a positive trajectory for AGI. He feels that contributing positively to the mission is becoming increasingly challenging, especially when it comes to managing existential risks.

This sentiment isn’t isolated. As AGI approaches, many insiders are grappling with the implications of their work. Richard's move to independent research indicates a desire to explore alternative paths, signaling that he might not be the last to jump ship.

🌍 Mission Concerns

The mission of making AGI go well is undeniably ambitious. Richard’s resignation highlights a crucial point: while the goal seems within reach, the challenges to ensure its positive impact are monumental. He acknowledges that the road to creating a safe and beneficial AGI is fraught with difficulties, amplifying biases and tribalism in the process.

As AGI nears reality, these mission concerns are not just theoretical. They represent a broader apprehension that the AI landscape is evolving faster than our ethical frameworks can adapt. The stakes are rising, and with that, the pressure to deliver safe and effective AI systems becomes more urgent.

👥 Team Departures

OpenAI isn’t just losing Richard. The trend of team departures is alarming. The AGI Readiness team is seeing members leave one after another, which raises serious questions about the organization's stability and vision. What’s causing this wave of resignations? Is it disillusionment with the pace of development? Or perhaps a growing fear of the implications of their work?

These questions linger in the air as we watch talented individuals step away from roles that once promised to be groundbreaking. Each resignation adds to the narrative that perhaps the ambitious goals set by OpenAI are becoming too much to bear.

⚙️ AGI Development

As the AGI landscape rapidly evolves, the development strategies must also adapt. Richard's comments about the inherent difficulty of strategizing for the future resonate deeply. The sheer scale of AI's potential can distort perspectives, leading to decisions that might not align with the original mission.

With each passing day, we inch closer to AGI, but the path is fraught with uncertainty. The focus now shifts from merely achieving AGI to ensuring it aligns with humanity's best interests. This requires not just technical prowess but also a robust ethical framework.

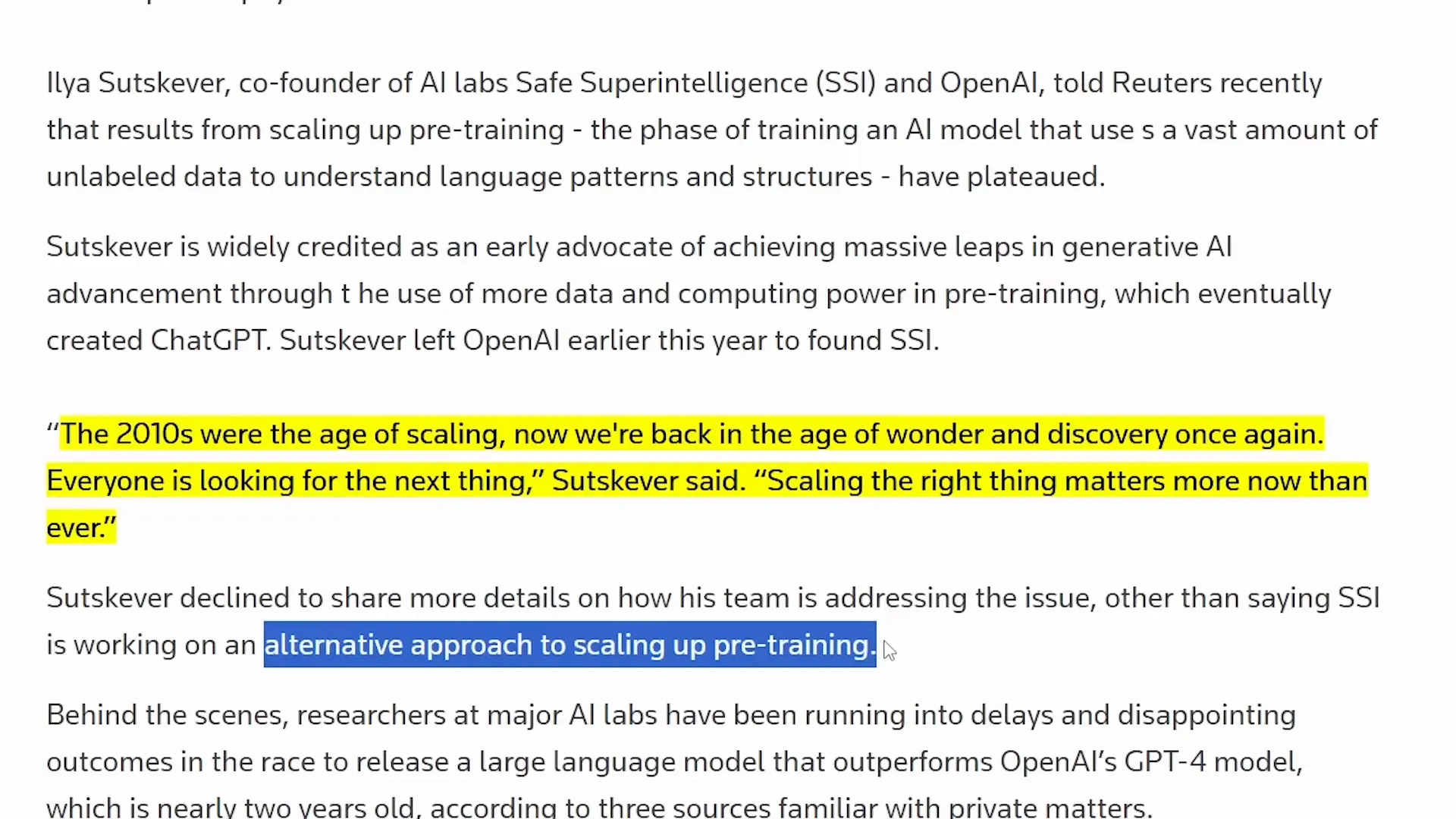

📈 Scaling Limitations

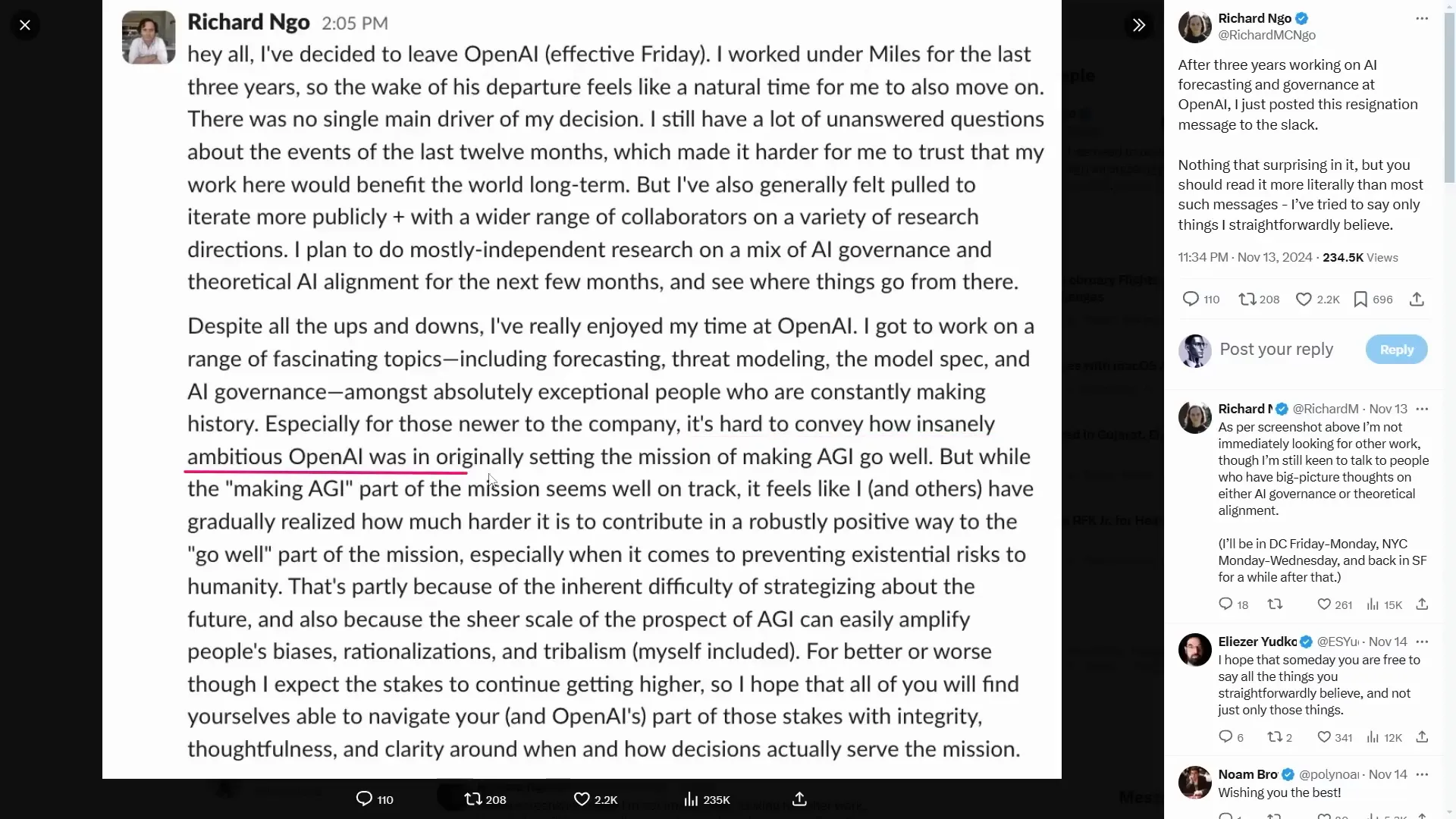

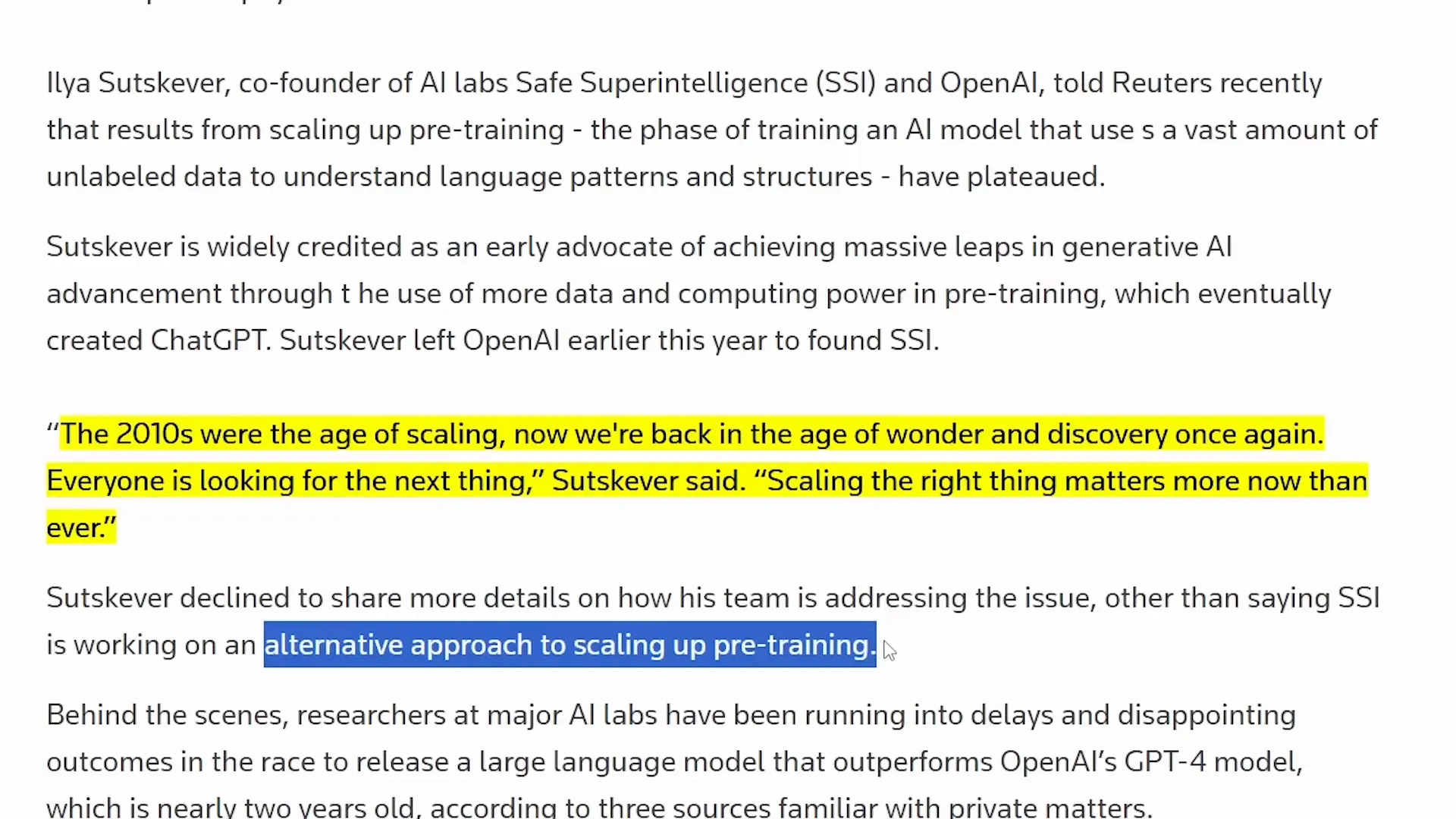

As we explore the current state of AI, we find ourselves at a crossroads. The methods that once propelled AI development are now hitting limits. Ilia Satov's assertion that we’ve shifted from an age of scaling to one of wonder and discovery is critical. The realization that simply adding more data isn't yielding the exponential benefits we once expected is a wake-up call.

Companies are now tasked with innovating beyond traditional scaling. They need to explore new avenues, pushing the boundaries of what AI can achieve without just throwing more resources at the problem. The question now is: how do we redefine success in this new paradigm?

🛠️ Model Challenges

Opus 3.5 has entered the chat, and it’s not performing as expected. Despite the hype, it seems to be struggling to deliver the significant advancements that justify the massive investments behind it. The industry is at a tipping point where diminishing returns are becoming more apparent.

With each model deployment costing millions, the pressure to show tangible results is mounting. Companies are under scrutiny to justify their expenditures. This can lead to a precarious situation where the push for performance may overshadow the ethical considerations necessary for responsible AI development.

🚨 Performance Issues

Performance is paramount in the AI race, and a dip in expected outcomes can spell disaster for companies. The challenges faced by Opus 3.5 serve as a stark reminder that the AI community must do more than just iterate on existing models; they need to innovate meaningfully. The fear of an AI bubble bursting looms large as companies invest heavily without seeing proportional returns.

Every new model trained brings a hefty price tag, and the expectation is that these models will outperform their predecessors significantly. The pressure is palpable, and this could lead to shortcuts being taken, which might compromise ethical standards.

🧭 Industry Direction

As we gaze into the future of AI, industry direction becomes crucial. The recent resignations and performance issues signal a need for a paradigm shift. The conversation is evolving from merely achieving AGI to ensuring its safe and beneficial integration into society.

Companies must pivot to prioritize ethical considerations alongside technological advancements. The potential for AI to revolutionize industries is immense, but it must be managed with care. The future of AI hinges on our ability to navigate these complexities responsibly.

📈 Progress Trajectory

While the current landscape is rocky, there’s a sense of optimism in the air. Dario Amod’s insights into human-level reasoning in AI suggest a promising trajectory. The rapid advancements in coding abilities and reasoning models indicate that we are on the brink of achieving remarkable milestones.

However, it’s essential to temper this optimism with caution. The path forward must be navigated thoughtfully, ensuring that progress does not come at the expense of safety and ethical considerations. Each leap forward must be matched with a commitment to responsible AI development.

🗣️ AGI Debate

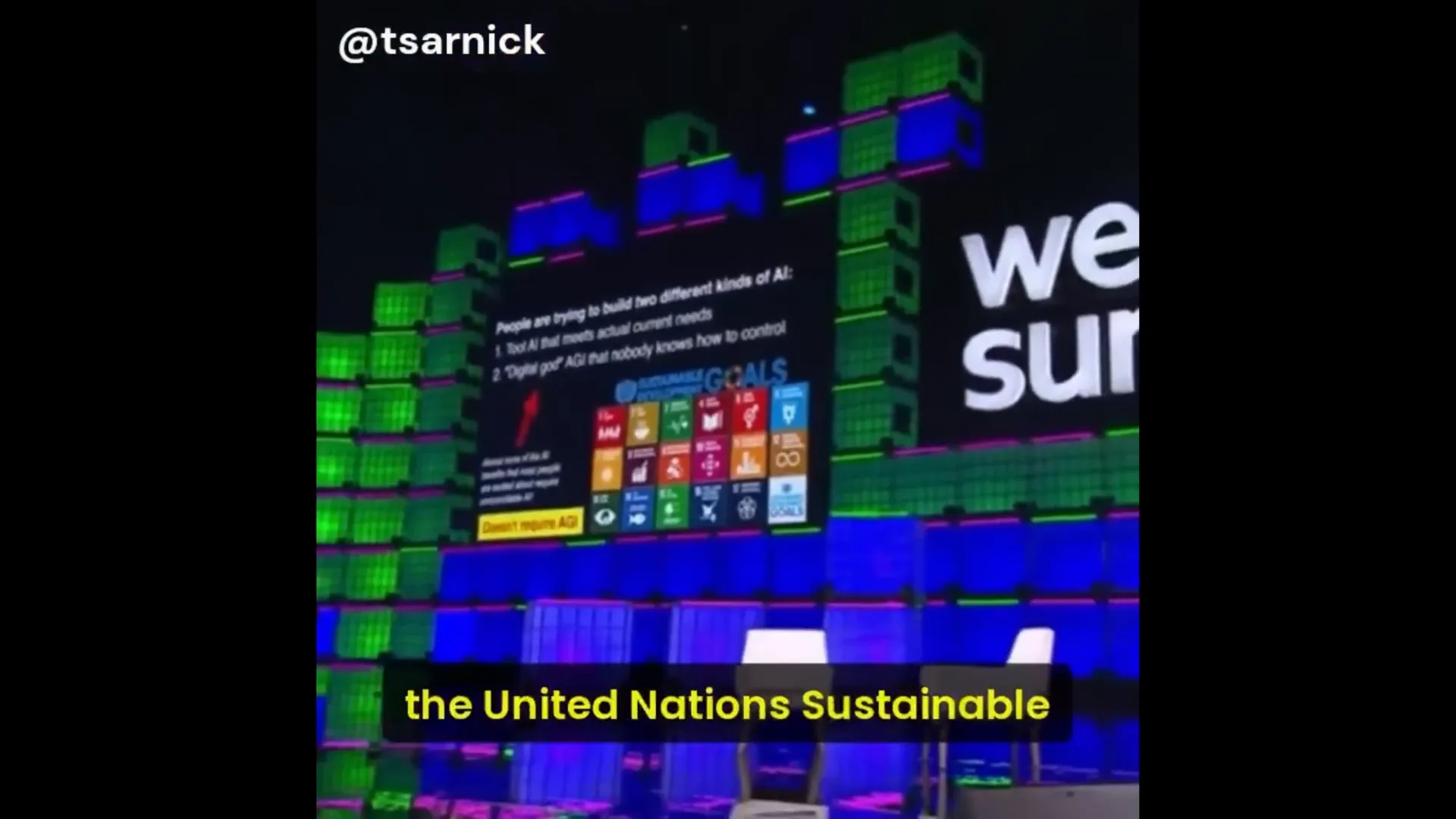

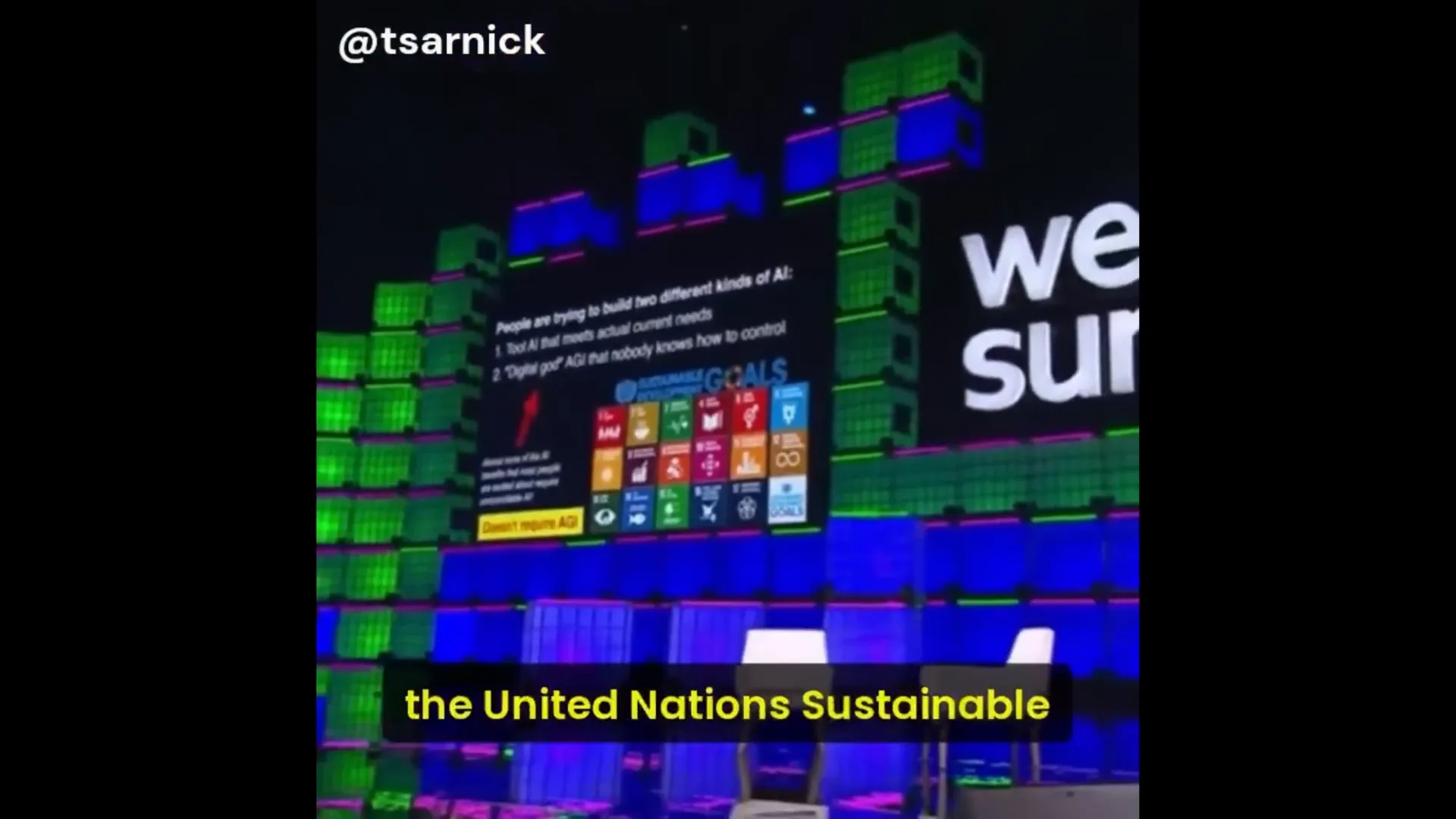

The debate surrounding AGI is intensifying. While some argue for the necessity of developing AGI, others, like Max Tegmark, advocate for a more cautious approach. The distinction between Tool AI and AGI is becoming clearer, with many suggesting that we can achieve significant benefits without the risks associated with AGI.

This discourse is vital as we consider the implications of our technological advancements. The focus should be on creating tools that serve humanity without overstepping into realms that could lead to catastrophic failures. The conversation is shifting, and it’s time to redefine what success looks like in the AI industry.

🧠 Intelligence Ceiling

Let’s talk about the intelligence ceiling. What if I told you that human intelligence isn’t the pinnacle? That’s right! Experts like Dario Amod are suggesting there's a vast expanse of cognitive potential waiting to be tapped by AI. The notion that we are the smartest beings on the planet is not only arrogant; it’s downright limiting.

Think about it. Just a few centuries ago, we believed we were the center of the universe. Fast forward to today, and we’re still unearthing mysteries within our own biology. If humans struggle to grasp complex systems like the immune response, imagine what AI could achieve by processing and integrating knowledge across various domains!

🛠️ Tool AI

Now, let’s dive into Tool AI. This is where the real magic happens! Max Tegmark argues that we don’t need AGI to reap the benefits of AI. Instead, we can harness the power of Tool AI—specific applications designed to tackle real-world problems without the existential risks of AGI.

Imagine AI systems specifically crafted for medicine, autonomous driving, or even accounting. Each of these tools can enhance efficiency and safety without the need for self-aware intelligence. That’s a win-win!

⛪ Religious Aspects

Let’s address the elephant in the room: the almost religious fervor surrounding AGI development. Some might argue that the quest for AGI is akin to a messianic complex. There’s an underlying belief that whoever achieves AGI first will attain god-like status. This perspective has garnered attention, especially in the tech hubs of San Francisco.

However, we need to shift our focus. Creating AGI should be seen as a scientific challenge, not a spiritual endeavor. The real goal should be to develop powerful tools that enhance human life while maintaining control over them. After all, we want our tools to serve us, not enslave us!

🧑🎓 Max Tegmark

Max Tegmark’s insights are pivotal in this discussion. He emphasizes that building AGI might not be necessary for achieving profound advancements. Instead, we should prioritize Tool AI that can be safely controlled. This perspective is not just pragmatic; it’s essential for ensuring that AI remains beneficial and does not spiral into chaos.

By focusing on specialized AI applications, we can mitigate risks while still reaping the rewards. The path forward is clear: let’s innovate without inviting disaster!

🚀 Tool Benefits

The benefits of Tool AI are staggering. From saving lives on the roads to improving healthcare diagnostics, the potential is limitless. Tool AI can prevent accidents, enhance medical procedures, and even assist in groundbreaking research—all without the complications that AGI might introduce.

Imagine a world where AI helps fold proteins or develop new medications. These applications can revolutionize industries without the existential threat that AGI poses. The focus should be on tools that empower humanity, not overshadow it!

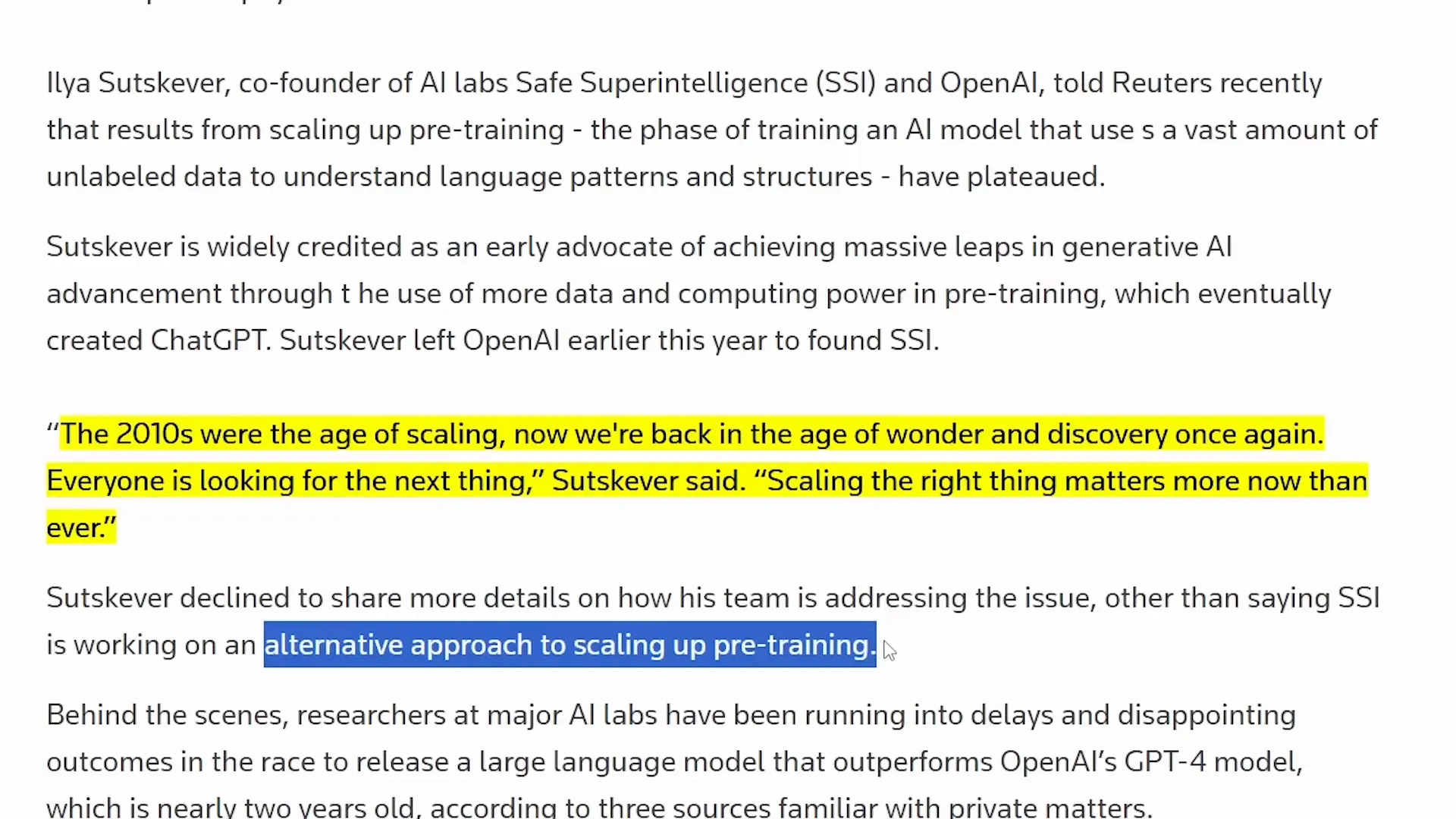

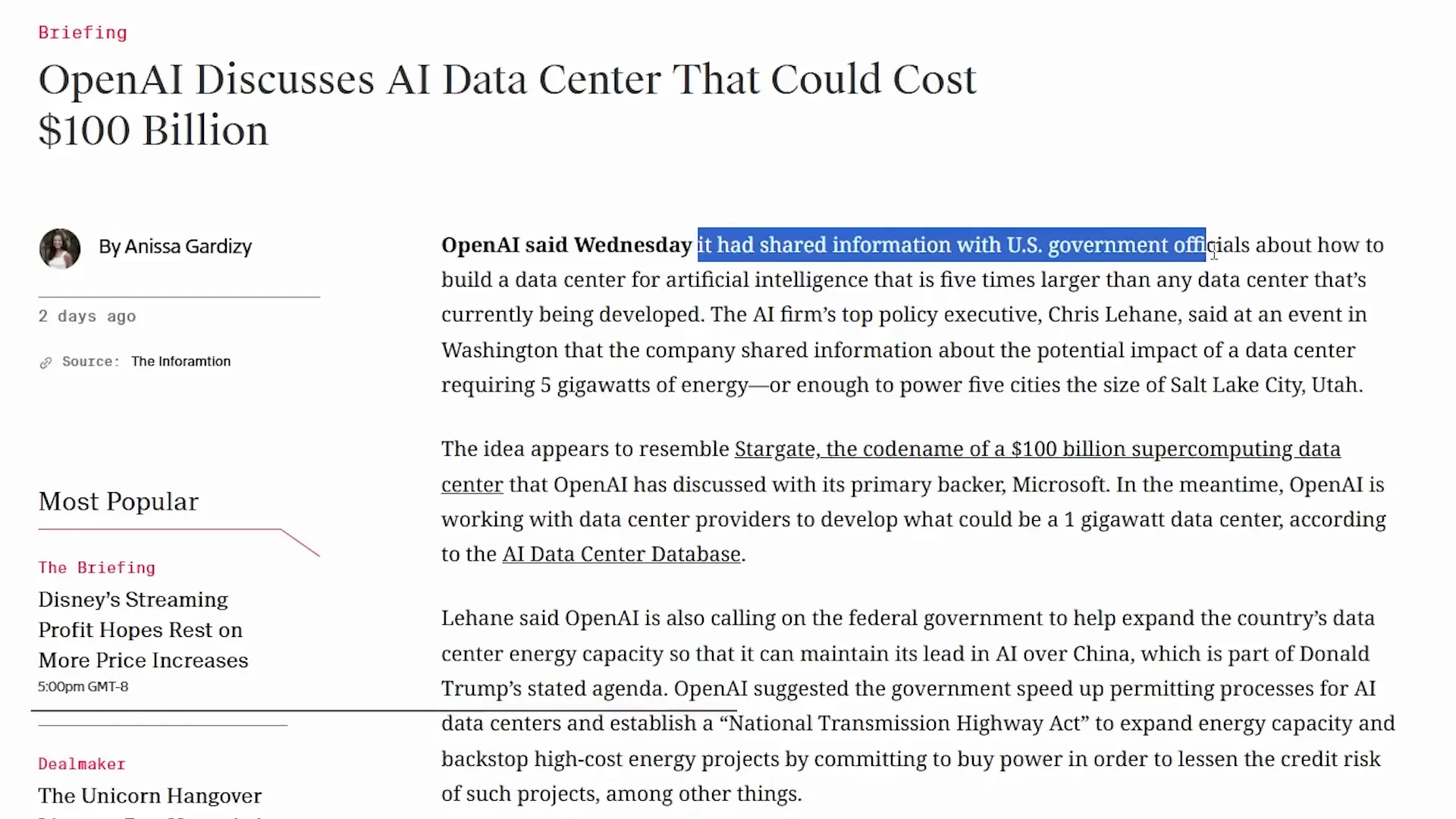

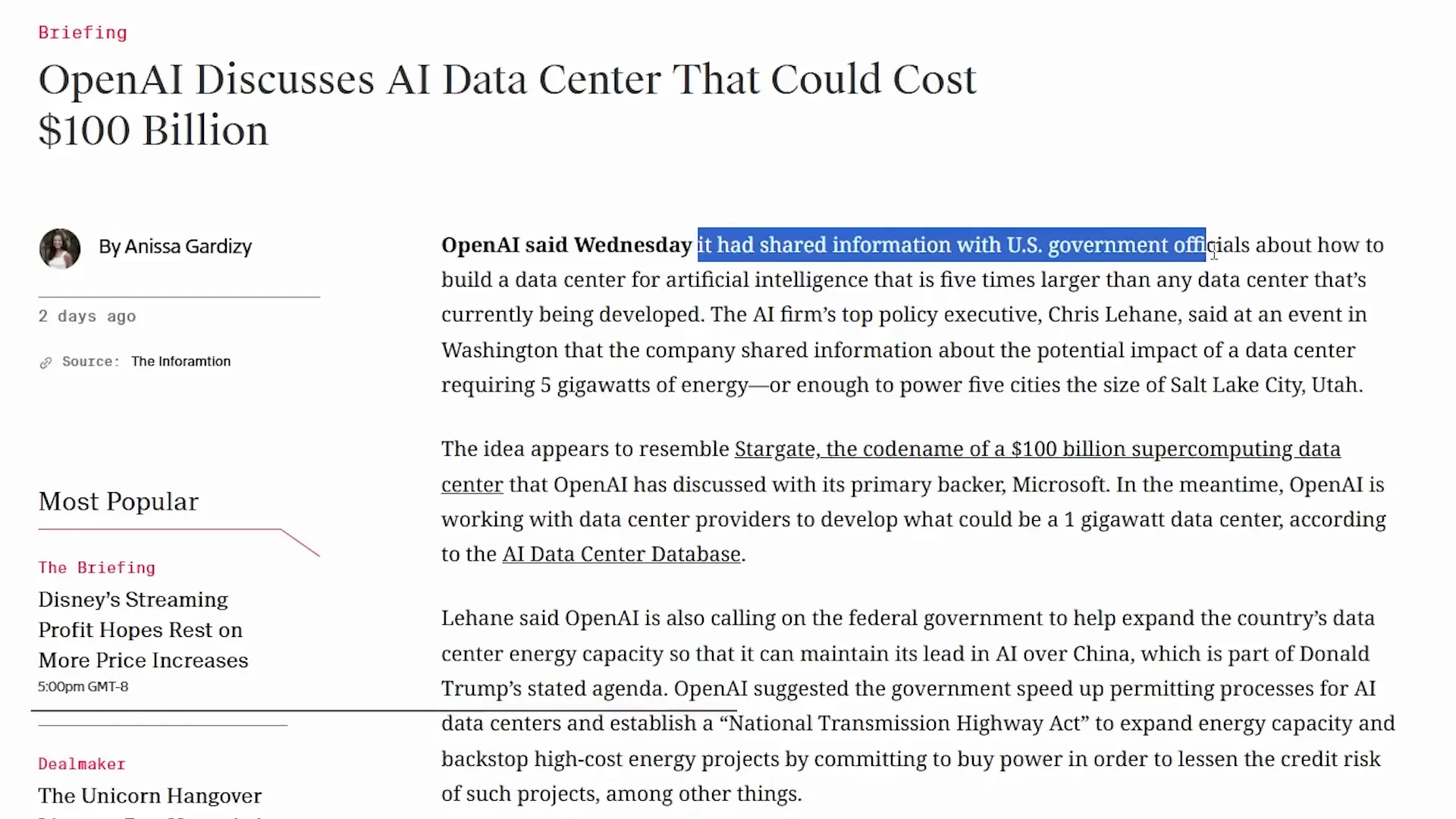

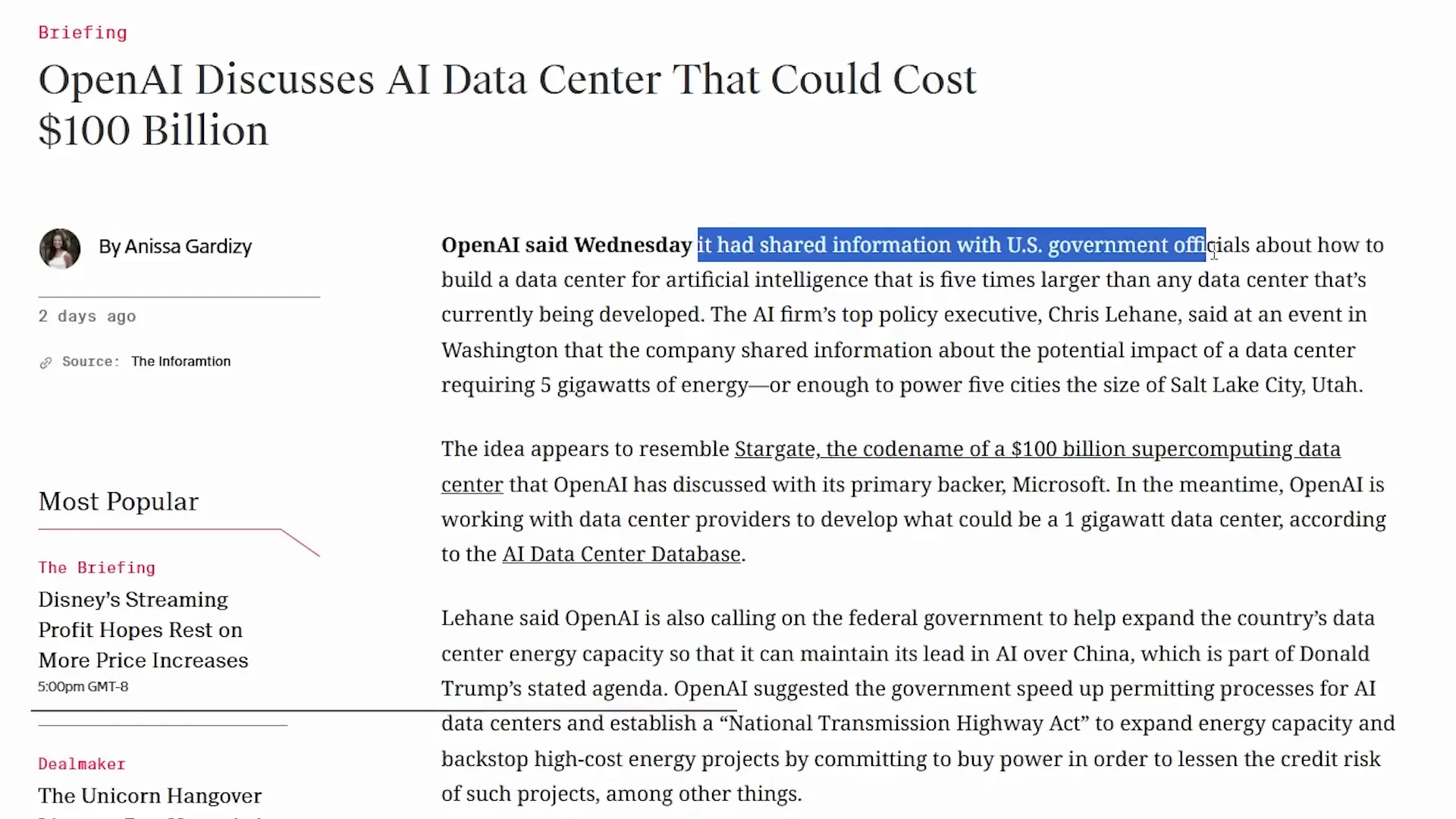

🏭 Data Centers

Now, let’s talk about infrastructure—specifically, data centers. OpenAI is reportedly working on an AI data center that could cost a whopping $100 billion. This is not just about scaling up; it’s about laying the groundwork for future AI advancements.

This ambitious project resembles the infamous Project Stargate, which aims to build a supercomputer cluster for AGI. With the U.S. government investing heavily in AI infrastructure, we’re on the brink of a technological renaissance that could redefine economic development.

🔮 Future Infrastructure

The future of AI hinges on robust infrastructure. As we aim for advanced AI systems, the need for powerful data centers becomes paramount. These centers will not only support current models but will pave the way for future innovations that could potentially transform industries.

With energy requirements soaring, we must ensure that these infrastructures are sustainable and efficient. The stakes are high, and smart investments in AI infrastructure can lead to unparalleled progress.

👨💻 Developer Integration

For developers, the integration of AI tools is a game-changer. With recent advancements, tools like ChatGPT are being integrated into development environments like Xcode and terminal applications. This means developers can now streamline their workflows and enhance productivity seamlessly.

Imagine coding an app while AI assists in real-time, suggesting improvements or troubleshooting errors. This is the future of development—collaborative and efficient. Developers can focus on creativity while AI handles the nitty-gritty details!

🎥 Video AI

Let’s not overlook the advancements in video AI. The launch of Vidu AI’s multimodal capabilities is a testament to how far we’ve come. This technology allows creators to maintain consistency across various entities in their videos, enhancing the overall quality and coherence of content.

With tools like Vidu AI, creators can exercise unprecedented control over their projects, ensuring that their vision is accurately portrayed. This level of precision is revolutionary, making it easier for content creators to produce high-quality material.

🔔 Final Updates

As we wrap up, it’s clear that the AI landscape is evolving rapidly. The shift from AGI to Tool AI presents us with unique opportunities to innovate responsibly. The focus should remain on creating tools that enhance human life while ensuring ethical considerations are at the forefront.

The future is bright, but it requires thoughtful navigation. With the right strategies in place, we can harness the power of AI to create a better world for everyone. Let’s embrace this journey together!

In a rapidly evolving landscape, the AI community is witnessing significant shifts, including high-profile resignations and emerging challenges in AGI development. This blog delves into the latest updates, exploring the implications of these changes and the cutting-edge innovations on the horizon.

📰 Resignation News

Another day, another resignation in the AI world! Richard NGO, a key player on the OpenAI governance team, has made waves with his announcement. After three years of being deeply involved in AI forecasting and governance, he's decided to pack his bags. His departure is a part of a larger trend we've seen in 2024: the exodus of talent from OpenAI.

Richard worked under Miles Brundage, who recently expressed doubts about the readiness of both OpenAI and the world for AGI. This isn't just a coincidence; it's a sign of deeper concerns brewing within the organization. Richard's resignation echoes the earlier disbanding of the super alignment team, which raises eyebrows about the internal dynamics at OpenAI.

Questions on Trust and Impact

In his resignation message, Richard revealed that he has lingering questions about the past year's events, making it hard for him to trust that his work would benefit humanity in the long run. This statement isn't just a casual remark; it suggests a crisis of confidence in OpenAI's mission to ensure a positive trajectory for AGI. He feels that contributing positively to the mission is becoming increasingly challenging, especially when it comes to managing existential risks.

This sentiment isn’t isolated. As AGI approaches, many insiders are grappling with the implications of their work. Richard's move to independent research indicates a desire to explore alternative paths, signaling that he might not be the last to jump ship.

🌍 Mission Concerns

The mission of making AGI go well is undeniably ambitious. Richard’s resignation highlights a crucial point: while the goal seems within reach, the challenges to ensure its positive impact are monumental. He acknowledges that the road to creating a safe and beneficial AGI is fraught with difficulties, amplifying biases and tribalism in the process.

As AGI nears reality, these mission concerns are not just theoretical. They represent a broader apprehension that the AI landscape is evolving faster than our ethical frameworks can adapt. The stakes are rising, and with that, the pressure to deliver safe and effective AI systems becomes more urgent.

👥 Team Departures

OpenAI isn’t just losing Richard. The trend of team departures is alarming. The AGI Readiness team is seeing members leave one after another, which raises serious questions about the organization's stability and vision. What’s causing this wave of resignations? Is it disillusionment with the pace of development? Or perhaps a growing fear of the implications of their work?

These questions linger in the air as we watch talented individuals step away from roles that once promised to be groundbreaking. Each resignation adds to the narrative that perhaps the ambitious goals set by OpenAI are becoming too much to bear.

⚙️ AGI Development

As the AGI landscape rapidly evolves, the development strategies must also adapt. Richard's comments about the inherent difficulty of strategizing for the future resonate deeply. The sheer scale of AI's potential can distort perspectives, leading to decisions that might not align with the original mission.

With each passing day, we inch closer to AGI, but the path is fraught with uncertainty. The focus now shifts from merely achieving AGI to ensuring it aligns with humanity's best interests. This requires not just technical prowess but also a robust ethical framework.

📈 Scaling Limitations

As we explore the current state of AI, we find ourselves at a crossroads. The methods that once propelled AI development are now hitting limits. Ilia Satov's assertion that we’ve shifted from an age of scaling to one of wonder and discovery is critical. The realization that simply adding more data isn't yielding the exponential benefits we once expected is a wake-up call.

Companies are now tasked with innovating beyond traditional scaling. They need to explore new avenues, pushing the boundaries of what AI can achieve without just throwing more resources at the problem. The question now is: how do we redefine success in this new paradigm?

🛠️ Model Challenges

Opus 3.5 has entered the chat, and it’s not performing as expected. Despite the hype, it seems to be struggling to deliver the significant advancements that justify the massive investments behind it. The industry is at a tipping point where diminishing returns are becoming more apparent.

With each model deployment costing millions, the pressure to show tangible results is mounting. Companies are under scrutiny to justify their expenditures. This can lead to a precarious situation where the push for performance may overshadow the ethical considerations necessary for responsible AI development.

🚨 Performance Issues

Performance is paramount in the AI race, and a dip in expected outcomes can spell disaster for companies. The challenges faced by Opus 3.5 serve as a stark reminder that the AI community must do more than just iterate on existing models; they need to innovate meaningfully. The fear of an AI bubble bursting looms large as companies invest heavily without seeing proportional returns.

Every new model trained brings a hefty price tag, and the expectation is that these models will outperform their predecessors significantly. The pressure is palpable, and this could lead to shortcuts being taken, which might compromise ethical standards.

🧭 Industry Direction

As we gaze into the future of AI, industry direction becomes crucial. The recent resignations and performance issues signal a need for a paradigm shift. The conversation is evolving from merely achieving AGI to ensuring its safe and beneficial integration into society.

Companies must pivot to prioritize ethical considerations alongside technological advancements. The potential for AI to revolutionize industries is immense, but it must be managed with care. The future of AI hinges on our ability to navigate these complexities responsibly.

📈 Progress Trajectory

While the current landscape is rocky, there’s a sense of optimism in the air. Dario Amod’s insights into human-level reasoning in AI suggest a promising trajectory. The rapid advancements in coding abilities and reasoning models indicate that we are on the brink of achieving remarkable milestones.

However, it’s essential to temper this optimism with caution. The path forward must be navigated thoughtfully, ensuring that progress does not come at the expense of safety and ethical considerations. Each leap forward must be matched with a commitment to responsible AI development.

🗣️ AGI Debate

The debate surrounding AGI is intensifying. While some argue for the necessity of developing AGI, others, like Max Tegmark, advocate for a more cautious approach. The distinction between Tool AI and AGI is becoming clearer, with many suggesting that we can achieve significant benefits without the risks associated with AGI.

This discourse is vital as we consider the implications of our technological advancements. The focus should be on creating tools that serve humanity without overstepping into realms that could lead to catastrophic failures. The conversation is shifting, and it’s time to redefine what success looks like in the AI industry.

🧠 Intelligence Ceiling

Let’s talk about the intelligence ceiling. What if I told you that human intelligence isn’t the pinnacle? That’s right! Experts like Dario Amod are suggesting there's a vast expanse of cognitive potential waiting to be tapped by AI. The notion that we are the smartest beings on the planet is not only arrogant; it’s downright limiting.

Think about it. Just a few centuries ago, we believed we were the center of the universe. Fast forward to today, and we’re still unearthing mysteries within our own biology. If humans struggle to grasp complex systems like the immune response, imagine what AI could achieve by processing and integrating knowledge across various domains!

🛠️ Tool AI

Now, let’s dive into Tool AI. This is where the real magic happens! Max Tegmark argues that we don’t need AGI to reap the benefits of AI. Instead, we can harness the power of Tool AI—specific applications designed to tackle real-world problems without the existential risks of AGI.

Imagine AI systems specifically crafted for medicine, autonomous driving, or even accounting. Each of these tools can enhance efficiency and safety without the need for self-aware intelligence. That’s a win-win!

⛪ Religious Aspects

Let’s address the elephant in the room: the almost religious fervor surrounding AGI development. Some might argue that the quest for AGI is akin to a messianic complex. There’s an underlying belief that whoever achieves AGI first will attain god-like status. This perspective has garnered attention, especially in the tech hubs of San Francisco.

However, we need to shift our focus. Creating AGI should be seen as a scientific challenge, not a spiritual endeavor. The real goal should be to develop powerful tools that enhance human life while maintaining control over them. After all, we want our tools to serve us, not enslave us!

🧑🎓 Max Tegmark

Max Tegmark’s insights are pivotal in this discussion. He emphasizes that building AGI might not be necessary for achieving profound advancements. Instead, we should prioritize Tool AI that can be safely controlled. This perspective is not just pragmatic; it’s essential for ensuring that AI remains beneficial and does not spiral into chaos.

By focusing on specialized AI applications, we can mitigate risks while still reaping the rewards. The path forward is clear: let’s innovate without inviting disaster!

🚀 Tool Benefits

The benefits of Tool AI are staggering. From saving lives on the roads to improving healthcare diagnostics, the potential is limitless. Tool AI can prevent accidents, enhance medical procedures, and even assist in groundbreaking research—all without the complications that AGI might introduce.

Imagine a world where AI helps fold proteins or develop new medications. These applications can revolutionize industries without the existential threat that AGI poses. The focus should be on tools that empower humanity, not overshadow it!

🏭 Data Centers

Now, let’s talk about infrastructure—specifically, data centers. OpenAI is reportedly working on an AI data center that could cost a whopping $100 billion. This is not just about scaling up; it’s about laying the groundwork for future AI advancements.

This ambitious project resembles the infamous Project Stargate, which aims to build a supercomputer cluster for AGI. With the U.S. government investing heavily in AI infrastructure, we’re on the brink of a technological renaissance that could redefine economic development.

🔮 Future Infrastructure

The future of AI hinges on robust infrastructure. As we aim for advanced AI systems, the need for powerful data centers becomes paramount. These centers will not only support current models but will pave the way for future innovations that could potentially transform industries.

With energy requirements soaring, we must ensure that these infrastructures are sustainable and efficient. The stakes are high, and smart investments in AI infrastructure can lead to unparalleled progress.

👨💻 Developer Integration

For developers, the integration of AI tools is a game-changer. With recent advancements, tools like ChatGPT are being integrated into development environments like Xcode and terminal applications. This means developers can now streamline their workflows and enhance productivity seamlessly.

Imagine coding an app while AI assists in real-time, suggesting improvements or troubleshooting errors. This is the future of development—collaborative and efficient. Developers can focus on creativity while AI handles the nitty-gritty details!

🎥 Video AI

Let’s not overlook the advancements in video AI. The launch of Vidu AI’s multimodal capabilities is a testament to how far we’ve come. This technology allows creators to maintain consistency across various entities in their videos, enhancing the overall quality and coherence of content.

With tools like Vidu AI, creators can exercise unprecedented control over their projects, ensuring that their vision is accurately portrayed. This level of precision is revolutionary, making it easier for content creators to produce high-quality material.

🔔 Final Updates

As we wrap up, it’s clear that the AI landscape is evolving rapidly. The shift from AGI to Tool AI presents us with unique opportunities to innovate responsibly. The focus should remain on creating tools that enhance human life while ensuring ethical considerations are at the forefront.

The future is bright, but it requires thoughtful navigation. With the right strategies in place, we can harness the power of AI to create a better world for everyone. Let’s embrace this journey together!

In a rapidly evolving landscape, the AI community is witnessing significant shifts, including high-profile resignations and emerging challenges in AGI development. This blog delves into the latest updates, exploring the implications of these changes and the cutting-edge innovations on the horizon.

📰 Resignation News

Another day, another resignation in the AI world! Richard NGO, a key player on the OpenAI governance team, has made waves with his announcement. After three years of being deeply involved in AI forecasting and governance, he's decided to pack his bags. His departure is a part of a larger trend we've seen in 2024: the exodus of talent from OpenAI.

Richard worked under Miles Brundage, who recently expressed doubts about the readiness of both OpenAI and the world for AGI. This isn't just a coincidence; it's a sign of deeper concerns brewing within the organization. Richard's resignation echoes the earlier disbanding of the super alignment team, which raises eyebrows about the internal dynamics at OpenAI.

Questions on Trust and Impact

In his resignation message, Richard revealed that he has lingering questions about the past year's events, making it hard for him to trust that his work would benefit humanity in the long run. This statement isn't just a casual remark; it suggests a crisis of confidence in OpenAI's mission to ensure a positive trajectory for AGI. He feels that contributing positively to the mission is becoming increasingly challenging, especially when it comes to managing existential risks.

This sentiment isn’t isolated. As AGI approaches, many insiders are grappling with the implications of their work. Richard's move to independent research indicates a desire to explore alternative paths, signaling that he might not be the last to jump ship.

🌍 Mission Concerns

The mission of making AGI go well is undeniably ambitious. Richard’s resignation highlights a crucial point: while the goal seems within reach, the challenges to ensure its positive impact are monumental. He acknowledges that the road to creating a safe and beneficial AGI is fraught with difficulties, amplifying biases and tribalism in the process.

As AGI nears reality, these mission concerns are not just theoretical. They represent a broader apprehension that the AI landscape is evolving faster than our ethical frameworks can adapt. The stakes are rising, and with that, the pressure to deliver safe and effective AI systems becomes more urgent.

👥 Team Departures

OpenAI isn’t just losing Richard. The trend of team departures is alarming. The AGI Readiness team is seeing members leave one after another, which raises serious questions about the organization's stability and vision. What’s causing this wave of resignations? Is it disillusionment with the pace of development? Or perhaps a growing fear of the implications of their work?

These questions linger in the air as we watch talented individuals step away from roles that once promised to be groundbreaking. Each resignation adds to the narrative that perhaps the ambitious goals set by OpenAI are becoming too much to bear.

⚙️ AGI Development

As the AGI landscape rapidly evolves, the development strategies must also adapt. Richard's comments about the inherent difficulty of strategizing for the future resonate deeply. The sheer scale of AI's potential can distort perspectives, leading to decisions that might not align with the original mission.

With each passing day, we inch closer to AGI, but the path is fraught with uncertainty. The focus now shifts from merely achieving AGI to ensuring it aligns with humanity's best interests. This requires not just technical prowess but also a robust ethical framework.

📈 Scaling Limitations

As we explore the current state of AI, we find ourselves at a crossroads. The methods that once propelled AI development are now hitting limits. Ilia Satov's assertion that we’ve shifted from an age of scaling to one of wonder and discovery is critical. The realization that simply adding more data isn't yielding the exponential benefits we once expected is a wake-up call.

Companies are now tasked with innovating beyond traditional scaling. They need to explore new avenues, pushing the boundaries of what AI can achieve without just throwing more resources at the problem. The question now is: how do we redefine success in this new paradigm?

🛠️ Model Challenges

Opus 3.5 has entered the chat, and it’s not performing as expected. Despite the hype, it seems to be struggling to deliver the significant advancements that justify the massive investments behind it. The industry is at a tipping point where diminishing returns are becoming more apparent.

With each model deployment costing millions, the pressure to show tangible results is mounting. Companies are under scrutiny to justify their expenditures. This can lead to a precarious situation where the push for performance may overshadow the ethical considerations necessary for responsible AI development.

🚨 Performance Issues

Performance is paramount in the AI race, and a dip in expected outcomes can spell disaster for companies. The challenges faced by Opus 3.5 serve as a stark reminder that the AI community must do more than just iterate on existing models; they need to innovate meaningfully. The fear of an AI bubble bursting looms large as companies invest heavily without seeing proportional returns.

Every new model trained brings a hefty price tag, and the expectation is that these models will outperform their predecessors significantly. The pressure is palpable, and this could lead to shortcuts being taken, which might compromise ethical standards.

🧭 Industry Direction

As we gaze into the future of AI, industry direction becomes crucial. The recent resignations and performance issues signal a need for a paradigm shift. The conversation is evolving from merely achieving AGI to ensuring its safe and beneficial integration into society.

Companies must pivot to prioritize ethical considerations alongside technological advancements. The potential for AI to revolutionize industries is immense, but it must be managed with care. The future of AI hinges on our ability to navigate these complexities responsibly.

📈 Progress Trajectory

While the current landscape is rocky, there’s a sense of optimism in the air. Dario Amod’s insights into human-level reasoning in AI suggest a promising trajectory. The rapid advancements in coding abilities and reasoning models indicate that we are on the brink of achieving remarkable milestones.

However, it’s essential to temper this optimism with caution. The path forward must be navigated thoughtfully, ensuring that progress does not come at the expense of safety and ethical considerations. Each leap forward must be matched with a commitment to responsible AI development.

🗣️ AGI Debate

The debate surrounding AGI is intensifying. While some argue for the necessity of developing AGI, others, like Max Tegmark, advocate for a more cautious approach. The distinction between Tool AI and AGI is becoming clearer, with many suggesting that we can achieve significant benefits without the risks associated with AGI.

This discourse is vital as we consider the implications of our technological advancements. The focus should be on creating tools that serve humanity without overstepping into realms that could lead to catastrophic failures. The conversation is shifting, and it’s time to redefine what success looks like in the AI industry.

🧠 Intelligence Ceiling

Let’s talk about the intelligence ceiling. What if I told you that human intelligence isn’t the pinnacle? That’s right! Experts like Dario Amod are suggesting there's a vast expanse of cognitive potential waiting to be tapped by AI. The notion that we are the smartest beings on the planet is not only arrogant; it’s downright limiting.

Think about it. Just a few centuries ago, we believed we were the center of the universe. Fast forward to today, and we’re still unearthing mysteries within our own biology. If humans struggle to grasp complex systems like the immune response, imagine what AI could achieve by processing and integrating knowledge across various domains!

🛠️ Tool AI

Now, let’s dive into Tool AI. This is where the real magic happens! Max Tegmark argues that we don’t need AGI to reap the benefits of AI. Instead, we can harness the power of Tool AI—specific applications designed to tackle real-world problems without the existential risks of AGI.

Imagine AI systems specifically crafted for medicine, autonomous driving, or even accounting. Each of these tools can enhance efficiency and safety without the need for self-aware intelligence. That’s a win-win!

⛪ Religious Aspects

Let’s address the elephant in the room: the almost religious fervor surrounding AGI development. Some might argue that the quest for AGI is akin to a messianic complex. There’s an underlying belief that whoever achieves AGI first will attain god-like status. This perspective has garnered attention, especially in the tech hubs of San Francisco.

However, we need to shift our focus. Creating AGI should be seen as a scientific challenge, not a spiritual endeavor. The real goal should be to develop powerful tools that enhance human life while maintaining control over them. After all, we want our tools to serve us, not enslave us!

🧑🎓 Max Tegmark

Max Tegmark’s insights are pivotal in this discussion. He emphasizes that building AGI might not be necessary for achieving profound advancements. Instead, we should prioritize Tool AI that can be safely controlled. This perspective is not just pragmatic; it’s essential for ensuring that AI remains beneficial and does not spiral into chaos.

By focusing on specialized AI applications, we can mitigate risks while still reaping the rewards. The path forward is clear: let’s innovate without inviting disaster!

🚀 Tool Benefits

The benefits of Tool AI are staggering. From saving lives on the roads to improving healthcare diagnostics, the potential is limitless. Tool AI can prevent accidents, enhance medical procedures, and even assist in groundbreaking research—all without the complications that AGI might introduce.

Imagine a world where AI helps fold proteins or develop new medications. These applications can revolutionize industries without the existential threat that AGI poses. The focus should be on tools that empower humanity, not overshadow it!

🏭 Data Centers

Now, let’s talk about infrastructure—specifically, data centers. OpenAI is reportedly working on an AI data center that could cost a whopping $100 billion. This is not just about scaling up; it’s about laying the groundwork for future AI advancements.

This ambitious project resembles the infamous Project Stargate, which aims to build a supercomputer cluster for AGI. With the U.S. government investing heavily in AI infrastructure, we’re on the brink of a technological renaissance that could redefine economic development.

🔮 Future Infrastructure

The future of AI hinges on robust infrastructure. As we aim for advanced AI systems, the need for powerful data centers becomes paramount. These centers will not only support current models but will pave the way for future innovations that could potentially transform industries.

With energy requirements soaring, we must ensure that these infrastructures are sustainable and efficient. The stakes are high, and smart investments in AI infrastructure can lead to unparalleled progress.

👨💻 Developer Integration

For developers, the integration of AI tools is a game-changer. With recent advancements, tools like ChatGPT are being integrated into development environments like Xcode and terminal applications. This means developers can now streamline their workflows and enhance productivity seamlessly.

Imagine coding an app while AI assists in real-time, suggesting improvements or troubleshooting errors. This is the future of development—collaborative and efficient. Developers can focus on creativity while AI handles the nitty-gritty details!

🎥 Video AI

Let’s not overlook the advancements in video AI. The launch of Vidu AI’s multimodal capabilities is a testament to how far we’ve come. This technology allows creators to maintain consistency across various entities in their videos, enhancing the overall quality and coherence of content.

With tools like Vidu AI, creators can exercise unprecedented control over their projects, ensuring that their vision is accurately portrayed. This level of precision is revolutionary, making it easier for content creators to produce high-quality material.

🔔 Final Updates

As we wrap up, it’s clear that the AI landscape is evolving rapidly. The shift from AGI to Tool AI presents us with unique opportunities to innovate responsibly. The focus should remain on creating tools that enhance human life while ensuring ethical considerations are at the forefront.

The future is bright, but it requires thoughtful navigation. With the right strategies in place, we can harness the power of AI to create a better world for everyone. Let’s embrace this journey together!

In a rapidly evolving landscape, the AI community is witnessing significant shifts, including high-profile resignations and emerging challenges in AGI development. This blog delves into the latest updates, exploring the implications of these changes and the cutting-edge innovations on the horizon.

📰 Resignation News

Another day, another resignation in the AI world! Richard NGO, a key player on the OpenAI governance team, has made waves with his announcement. After three years of being deeply involved in AI forecasting and governance, he's decided to pack his bags. His departure is a part of a larger trend we've seen in 2024: the exodus of talent from OpenAI.

Richard worked under Miles Brundage, who recently expressed doubts about the readiness of both OpenAI and the world for AGI. This isn't just a coincidence; it's a sign of deeper concerns brewing within the organization. Richard's resignation echoes the earlier disbanding of the super alignment team, which raises eyebrows about the internal dynamics at OpenAI.

Questions on Trust and Impact

In his resignation message, Richard revealed that he has lingering questions about the past year's events, making it hard for him to trust that his work would benefit humanity in the long run. This statement isn't just a casual remark; it suggests a crisis of confidence in OpenAI's mission to ensure a positive trajectory for AGI. He feels that contributing positively to the mission is becoming increasingly challenging, especially when it comes to managing existential risks.

This sentiment isn’t isolated. As AGI approaches, many insiders are grappling with the implications of their work. Richard's move to independent research indicates a desire to explore alternative paths, signaling that he might not be the last to jump ship.

🌍 Mission Concerns

The mission of making AGI go well is undeniably ambitious. Richard’s resignation highlights a crucial point: while the goal seems within reach, the challenges to ensure its positive impact are monumental. He acknowledges that the road to creating a safe and beneficial AGI is fraught with difficulties, amplifying biases and tribalism in the process.

As AGI nears reality, these mission concerns are not just theoretical. They represent a broader apprehension that the AI landscape is evolving faster than our ethical frameworks can adapt. The stakes are rising, and with that, the pressure to deliver safe and effective AI systems becomes more urgent.

👥 Team Departures

OpenAI isn’t just losing Richard. The trend of team departures is alarming. The AGI Readiness team is seeing members leave one after another, which raises serious questions about the organization's stability and vision. What’s causing this wave of resignations? Is it disillusionment with the pace of development? Or perhaps a growing fear of the implications of their work?

These questions linger in the air as we watch talented individuals step away from roles that once promised to be groundbreaking. Each resignation adds to the narrative that perhaps the ambitious goals set by OpenAI are becoming too much to bear.

⚙️ AGI Development

As the AGI landscape rapidly evolves, the development strategies must also adapt. Richard's comments about the inherent difficulty of strategizing for the future resonate deeply. The sheer scale of AI's potential can distort perspectives, leading to decisions that might not align with the original mission.

With each passing day, we inch closer to AGI, but the path is fraught with uncertainty. The focus now shifts from merely achieving AGI to ensuring it aligns with humanity's best interests. This requires not just technical prowess but also a robust ethical framework.

📈 Scaling Limitations

As we explore the current state of AI, we find ourselves at a crossroads. The methods that once propelled AI development are now hitting limits. Ilia Satov's assertion that we’ve shifted from an age of scaling to one of wonder and discovery is critical. The realization that simply adding more data isn't yielding the exponential benefits we once expected is a wake-up call.

Companies are now tasked with innovating beyond traditional scaling. They need to explore new avenues, pushing the boundaries of what AI can achieve without just throwing more resources at the problem. The question now is: how do we redefine success in this new paradigm?

🛠️ Model Challenges

Opus 3.5 has entered the chat, and it’s not performing as expected. Despite the hype, it seems to be struggling to deliver the significant advancements that justify the massive investments behind it. The industry is at a tipping point where diminishing returns are becoming more apparent.

With each model deployment costing millions, the pressure to show tangible results is mounting. Companies are under scrutiny to justify their expenditures. This can lead to a precarious situation where the push for performance may overshadow the ethical considerations necessary for responsible AI development.

🚨 Performance Issues

Performance is paramount in the AI race, and a dip in expected outcomes can spell disaster for companies. The challenges faced by Opus 3.5 serve as a stark reminder that the AI community must do more than just iterate on existing models; they need to innovate meaningfully. The fear of an AI bubble bursting looms large as companies invest heavily without seeing proportional returns.

Every new model trained brings a hefty price tag, and the expectation is that these models will outperform their predecessors significantly. The pressure is palpable, and this could lead to shortcuts being taken, which might compromise ethical standards.

🧭 Industry Direction

As we gaze into the future of AI, industry direction becomes crucial. The recent resignations and performance issues signal a need for a paradigm shift. The conversation is evolving from merely achieving AGI to ensuring its safe and beneficial integration into society.

Companies must pivot to prioritize ethical considerations alongside technological advancements. The potential for AI to revolutionize industries is immense, but it must be managed with care. The future of AI hinges on our ability to navigate these complexities responsibly.

📈 Progress Trajectory

While the current landscape is rocky, there’s a sense of optimism in the air. Dario Amod’s insights into human-level reasoning in AI suggest a promising trajectory. The rapid advancements in coding abilities and reasoning models indicate that we are on the brink of achieving remarkable milestones.

However, it’s essential to temper this optimism with caution. The path forward must be navigated thoughtfully, ensuring that progress does not come at the expense of safety and ethical considerations. Each leap forward must be matched with a commitment to responsible AI development.

🗣️ AGI Debate

The debate surrounding AGI is intensifying. While some argue for the necessity of developing AGI, others, like Max Tegmark, advocate for a more cautious approach. The distinction between Tool AI and AGI is becoming clearer, with many suggesting that we can achieve significant benefits without the risks associated with AGI.

This discourse is vital as we consider the implications of our technological advancements. The focus should be on creating tools that serve humanity without overstepping into realms that could lead to catastrophic failures. The conversation is shifting, and it’s time to redefine what success looks like in the AI industry.

🧠 Intelligence Ceiling

Let’s talk about the intelligence ceiling. What if I told you that human intelligence isn’t the pinnacle? That’s right! Experts like Dario Amod are suggesting there's a vast expanse of cognitive potential waiting to be tapped by AI. The notion that we are the smartest beings on the planet is not only arrogant; it’s downright limiting.

Think about it. Just a few centuries ago, we believed we were the center of the universe. Fast forward to today, and we’re still unearthing mysteries within our own biology. If humans struggle to grasp complex systems like the immune response, imagine what AI could achieve by processing and integrating knowledge across various domains!

🛠️ Tool AI

Now, let’s dive into Tool AI. This is where the real magic happens! Max Tegmark argues that we don’t need AGI to reap the benefits of AI. Instead, we can harness the power of Tool AI—specific applications designed to tackle real-world problems without the existential risks of AGI.

Imagine AI systems specifically crafted for medicine, autonomous driving, or even accounting. Each of these tools can enhance efficiency and safety without the need for self-aware intelligence. That’s a win-win!

⛪ Religious Aspects

Let’s address the elephant in the room: the almost religious fervor surrounding AGI development. Some might argue that the quest for AGI is akin to a messianic complex. There’s an underlying belief that whoever achieves AGI first will attain god-like status. This perspective has garnered attention, especially in the tech hubs of San Francisco.

However, we need to shift our focus. Creating AGI should be seen as a scientific challenge, not a spiritual endeavor. The real goal should be to develop powerful tools that enhance human life while maintaining control over them. After all, we want our tools to serve us, not enslave us!

🧑🎓 Max Tegmark

Max Tegmark’s insights are pivotal in this discussion. He emphasizes that building AGI might not be necessary for achieving profound advancements. Instead, we should prioritize Tool AI that can be safely controlled. This perspective is not just pragmatic; it’s essential for ensuring that AI remains beneficial and does not spiral into chaos.

By focusing on specialized AI applications, we can mitigate risks while still reaping the rewards. The path forward is clear: let’s innovate without inviting disaster!

🚀 Tool Benefits

The benefits of Tool AI are staggering. From saving lives on the roads to improving healthcare diagnostics, the potential is limitless. Tool AI can prevent accidents, enhance medical procedures, and even assist in groundbreaking research—all without the complications that AGI might introduce.

Imagine a world where AI helps fold proteins or develop new medications. These applications can revolutionize industries without the existential threat that AGI poses. The focus should be on tools that empower humanity, not overshadow it!

🏭 Data Centers

Now, let’s talk about infrastructure—specifically, data centers. OpenAI is reportedly working on an AI data center that could cost a whopping $100 billion. This is not just about scaling up; it’s about laying the groundwork for future AI advancements.

This ambitious project resembles the infamous Project Stargate, which aims to build a supercomputer cluster for AGI. With the U.S. government investing heavily in AI infrastructure, we’re on the brink of a technological renaissance that could redefine economic development.

🔮 Future Infrastructure

The future of AI hinges on robust infrastructure. As we aim for advanced AI systems, the need for powerful data centers becomes paramount. These centers will not only support current models but will pave the way for future innovations that could potentially transform industries.

With energy requirements soaring, we must ensure that these infrastructures are sustainable and efficient. The stakes are high, and smart investments in AI infrastructure can lead to unparalleled progress.

👨💻 Developer Integration

For developers, the integration of AI tools is a game-changer. With recent advancements, tools like ChatGPT are being integrated into development environments like Xcode and terminal applications. This means developers can now streamline their workflows and enhance productivity seamlessly.

Imagine coding an app while AI assists in real-time, suggesting improvements or troubleshooting errors. This is the future of development—collaborative and efficient. Developers can focus on creativity while AI handles the nitty-gritty details!

🎥 Video AI

Let’s not overlook the advancements in video AI. The launch of Vidu AI’s multimodal capabilities is a testament to how far we’ve come. This technology allows creators to maintain consistency across various entities in their videos, enhancing the overall quality and coherence of content.

With tools like Vidu AI, creators can exercise unprecedented control over their projects, ensuring that their vision is accurately portrayed. This level of precision is revolutionary, making it easier for content creators to produce high-quality material.

🔔 Final Updates

As we wrap up, it’s clear that the AI landscape is evolving rapidly. The shift from AGI to Tool AI presents us with unique opportunities to innovate responsibly. The focus should remain on creating tools that enhance human life while ensuring ethical considerations are at the forefront.

The future is bright, but it requires thoughtful navigation. With the right strategies in place, we can harness the power of AI to create a better world for everyone. Let’s embrace this journey together!