Content

The Ultimate Showdown: Kling vs. Runway Gen 3 vs. Luma Dream Machine vs. Pixverse

The Ultimate Showdown: Kling vs. Runway Gen 3 vs. Luma Dream Machine vs. Pixverse

The Ultimate Showdown: Kling vs. Runway Gen 3 vs. Luma Dream Machine vs. Pixverse

Danny Roman

October 31, 2024

In the rapidly evolving world of AI video generation, four major players have recently upped their game: Runway Gen 3, Kling, Luma Dream Machine, and Pixverse. Join us as we dive deep into their features, performance, and value, helping you determine which tool is the best fit for your creative needs.

🚀 Intro

Welcome to the future of video creation! The landscape of AI video generators is evolving at lightning speed, and it’s about time you get in on the action. Imagine crafting breathtaking videos with just a few clicks—sounds like magic, right? Well, it’s not magic; it’s technology!

Whether you're a seasoned creator or just stepping into the world of video production, the advancements in AI tools like Runway, Kling, Luma Dream Machine, and Pixverse are game-changers. In this blog, we'll explore how these platforms work, how to generate stunning videos, and even compare text and image inputs to optimize your creative process.

So, buckle up! Let’s dive into the nitty-gritty of how you can leverage these powerful tools to bring your wildest ideas to life.

⚙️ How it will work

Getting started with AI video generation is as easy as pie. Each platform offers a user-friendly interface that guides you through the process. Here’s the lowdown:

Choose Your Tool: Pick one of the platforms—Runway, Kling, Luma, or Pixverse. Each has unique strengths, so choose wisely!

Input Your Prompt: Type in a descriptive prompt for what you want to create. Be as specific as possible to get the best results.

Select Settings: Depending on the platform, you might set parameters like video length, resolution, and creativity level.

Generate: Hit that generate button and watch the magic unfold. Sometimes it takes a few tries to nail the perfect video, so don’t hesitate to reroll!

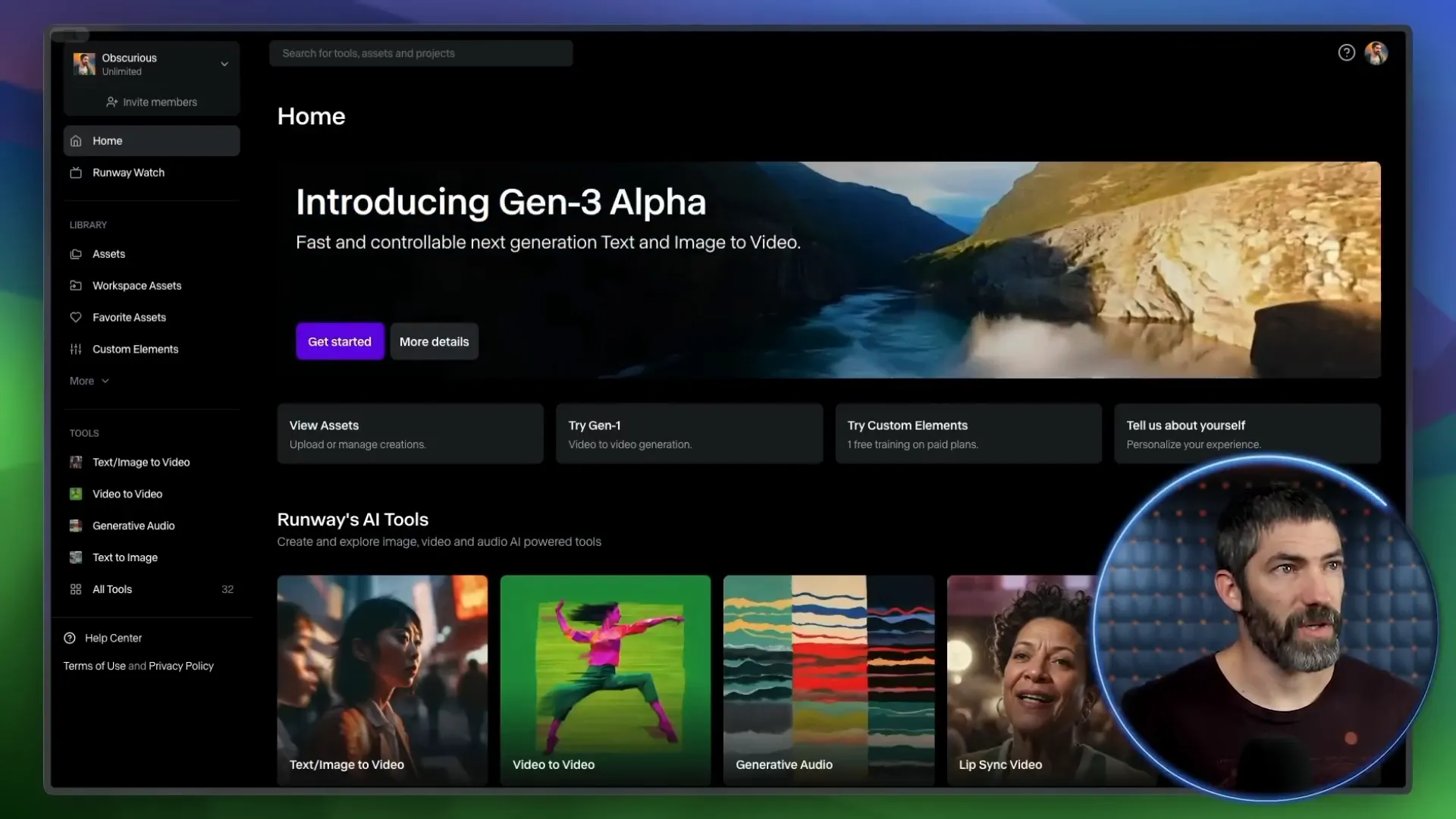

🌀 How to generate

Now, let’s break down the generation process. Here’s how you can create captivating videos step-by-step:

Open the Platform: Launch your chosen AI video generator and familiarize yourself with its layout.

Input Your Prompt: Enter a detailed description of the scene you wish to create. For example, “A serene lake at sunset with mountains in the background.”

Select Video Length: Choose between 5 or 10 seconds, depending on how much detail you want to capture.

Adjust Settings: If applicable, tweak settings like aspect ratio and camera movement options to suit your vision.

Hit Generate: Click the button and let the AI work its magic. If the first result isn't perfect, don’t worry—try again!

🔍 Text to Video Comparisons

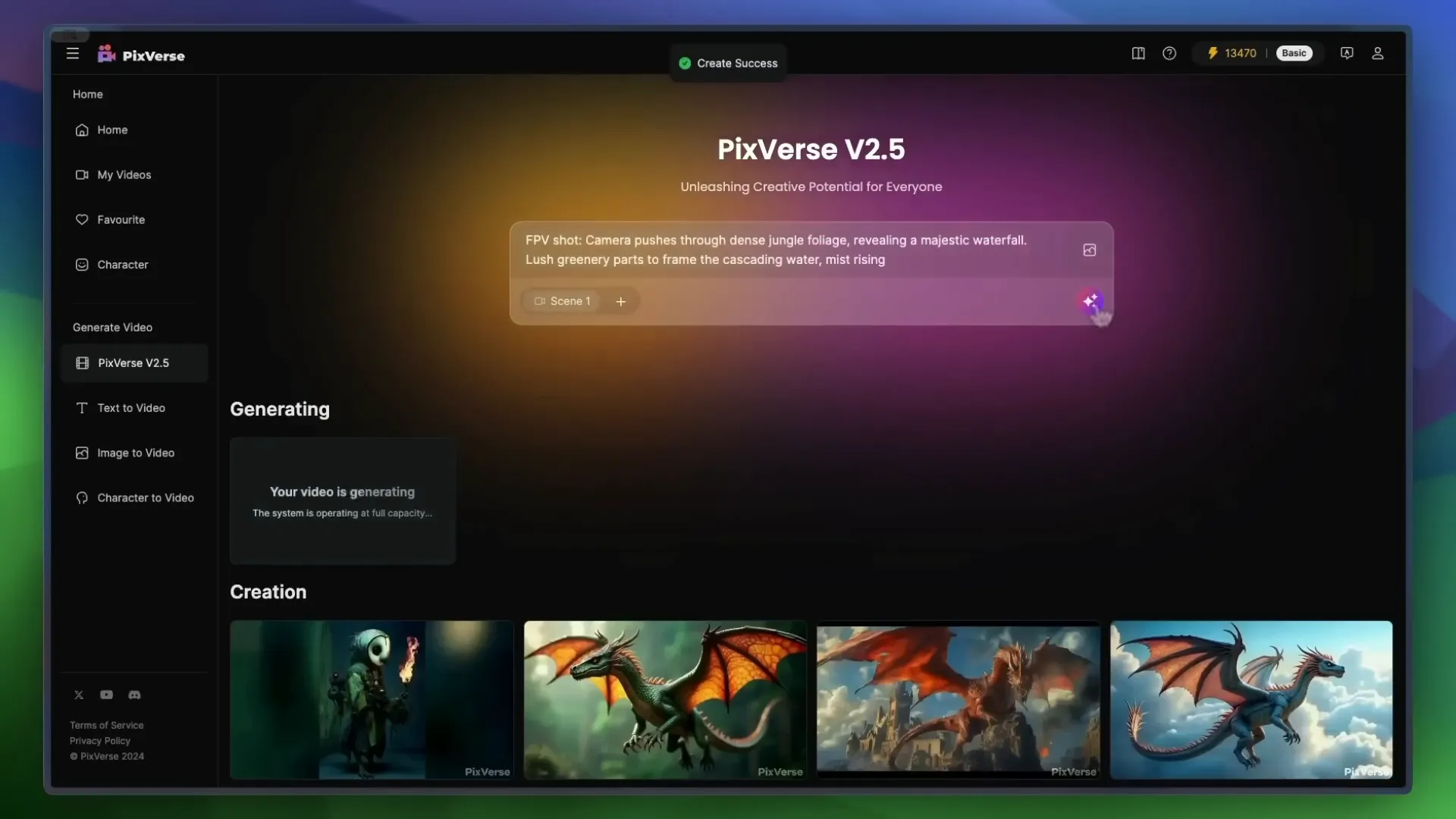

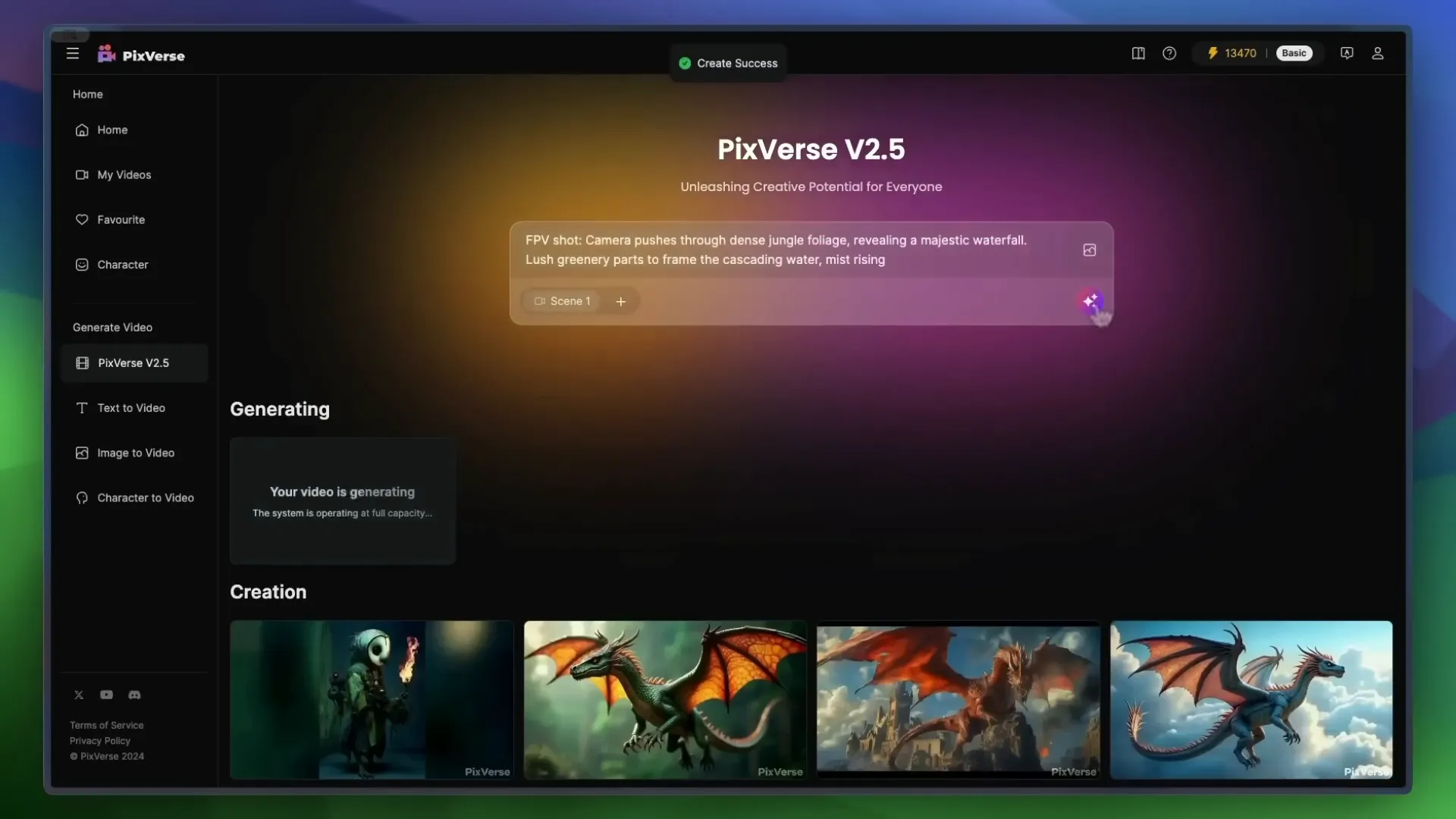

Text-to-video generation is where the real fun begins. With just a few words, you can create visuals that tell a story. But how do these platforms stack up against one another? Let’s compare!

When using text prompts, I ran several tests across all four platforms. The results varied in quality, coherence, and adherence to the prompt. Here’s a snapshot of how they performed:

Runway: Known for its fluid visuals and movement, it nails prompts that require dynamic scenes.

Kling: Often delivers near-perfect results, with excellent adherence to prompts and stunning visuals.

Luma: While it sometimes falls short on detail, it excels in creating motion, especially in lively scenes.

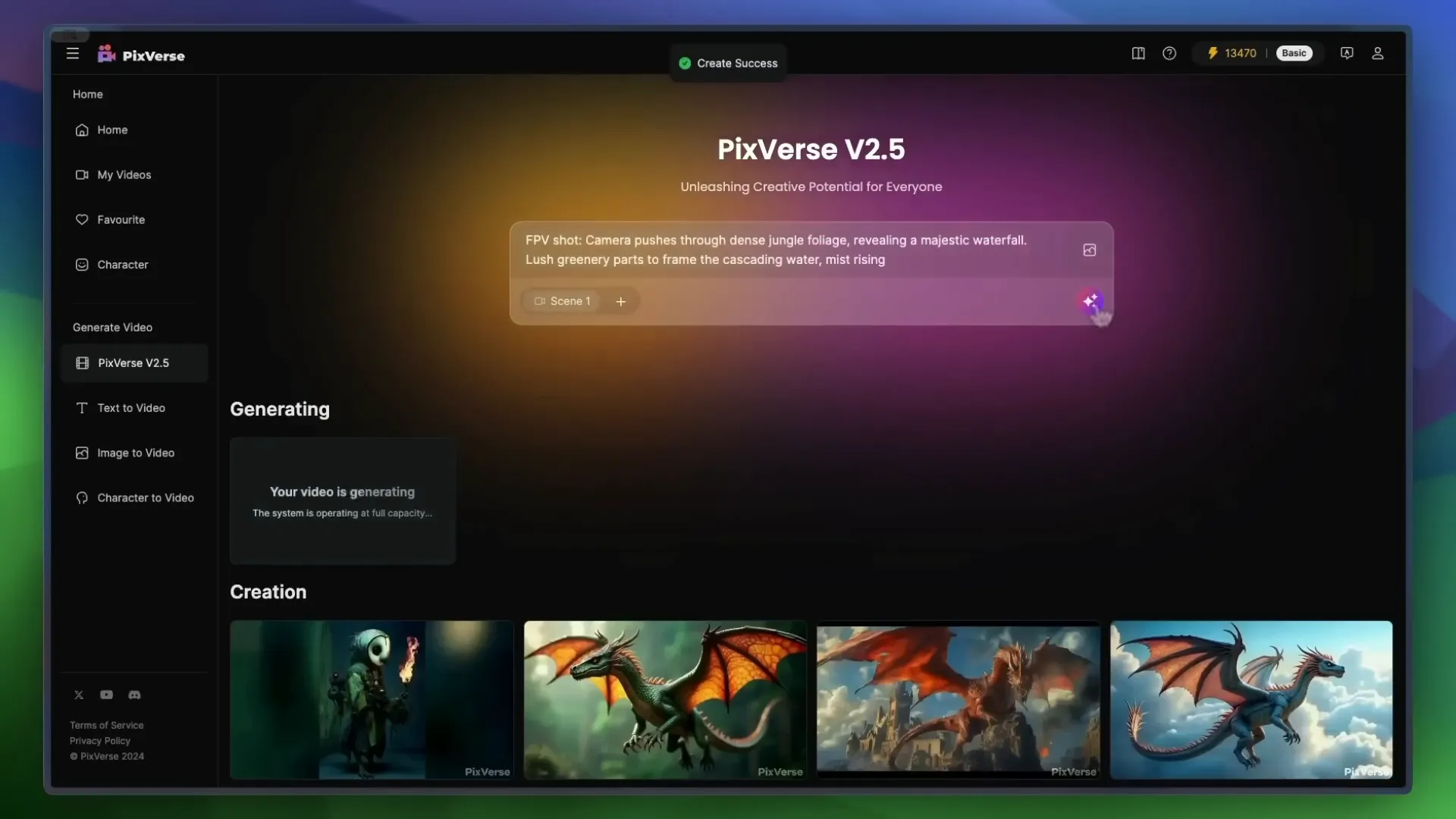

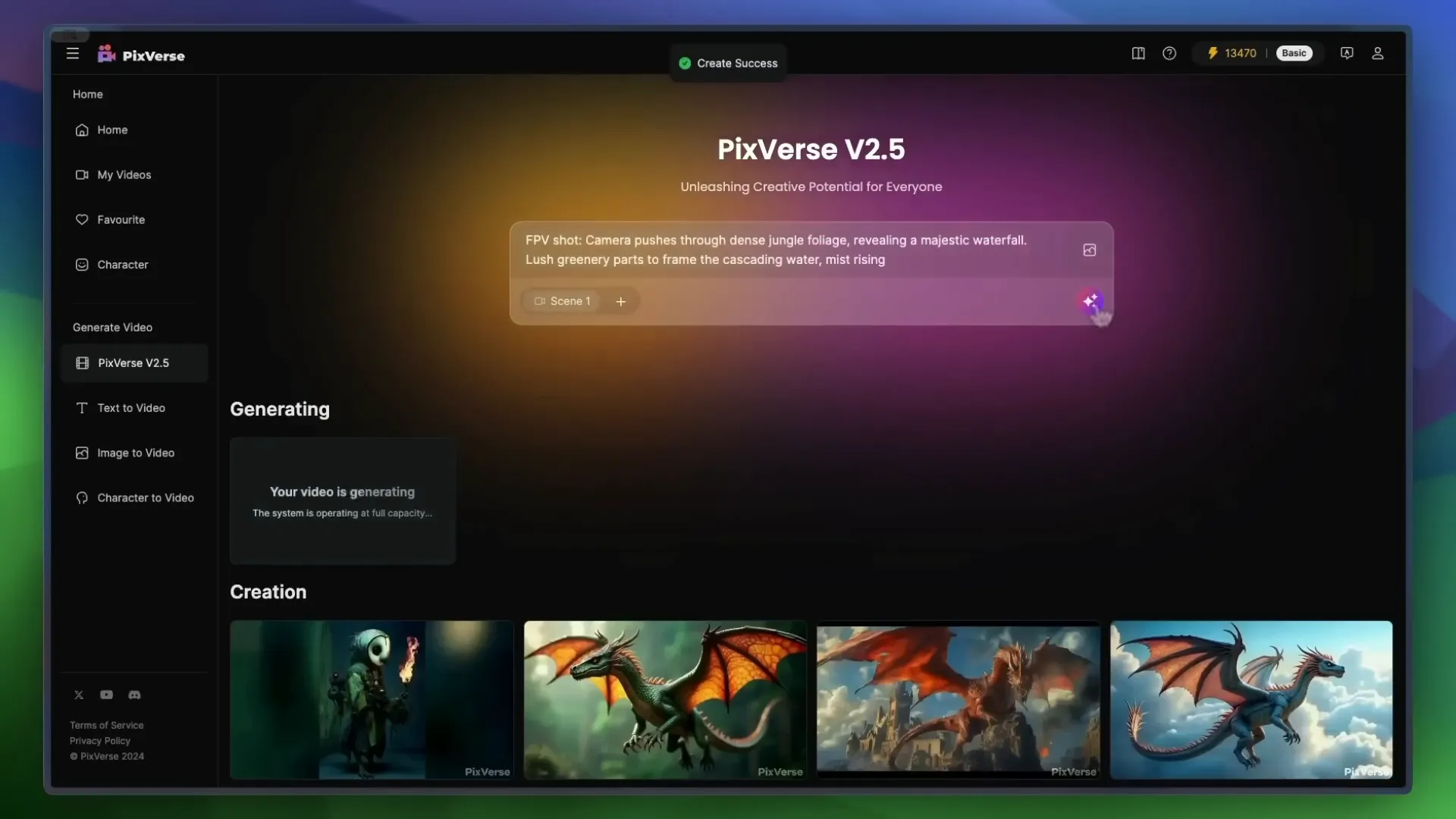

Pixverse: Offers good quality but often has a slow-motion feel that can detract from its appeal.

📊 Sora Text to Video Prompt Comparisons

Let’s get a bit more granular with Sora's text-to-video prompts. Here’s a breakdown of how Sora's prompts performed across the different platforms:

Golden Retrievers Playing in the Snow: Runway captured the playful essence beautifully, while Kling also performed admirably, though with slight morphing.

Dynamic City Time-Lapse: Runway was the standout here, managing to transition from day to night seamlessly.

Chinese New Year Celebration: Runway again took the lead with coherent faces and a decent dragon, while Kling struggled with the dragon's appearance.

📝 Generating Text

Generating text for video prompts isn’t just about typing in a few words. It's about crafting a narrative that the AI can visualize. Here’s how to maximize your text input:

Be Descriptive: The more vivid your description, the better the AI can interpret it. Instead of saying “a dog,” try “a golden retriever joyfully fetching a ball in a sunny park.”

Use Action Words: Verbs are your friends! Words like “soar,” “dive,” and “glide” help the AI visualize movement.

Specify the Environment: Mentioning details about the setting enhances the scene. Think about the time of day, weather, and surrounding elements.

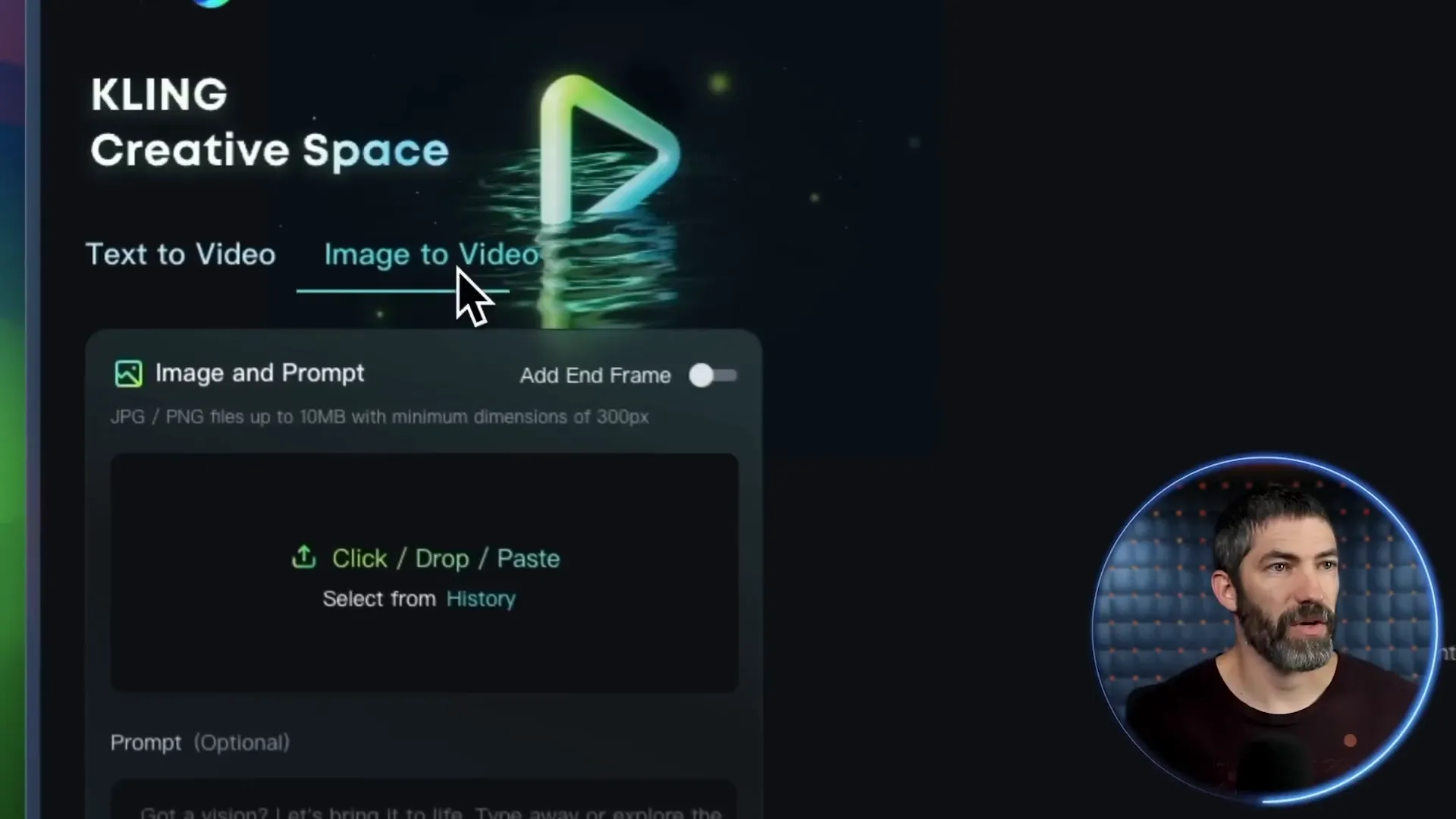

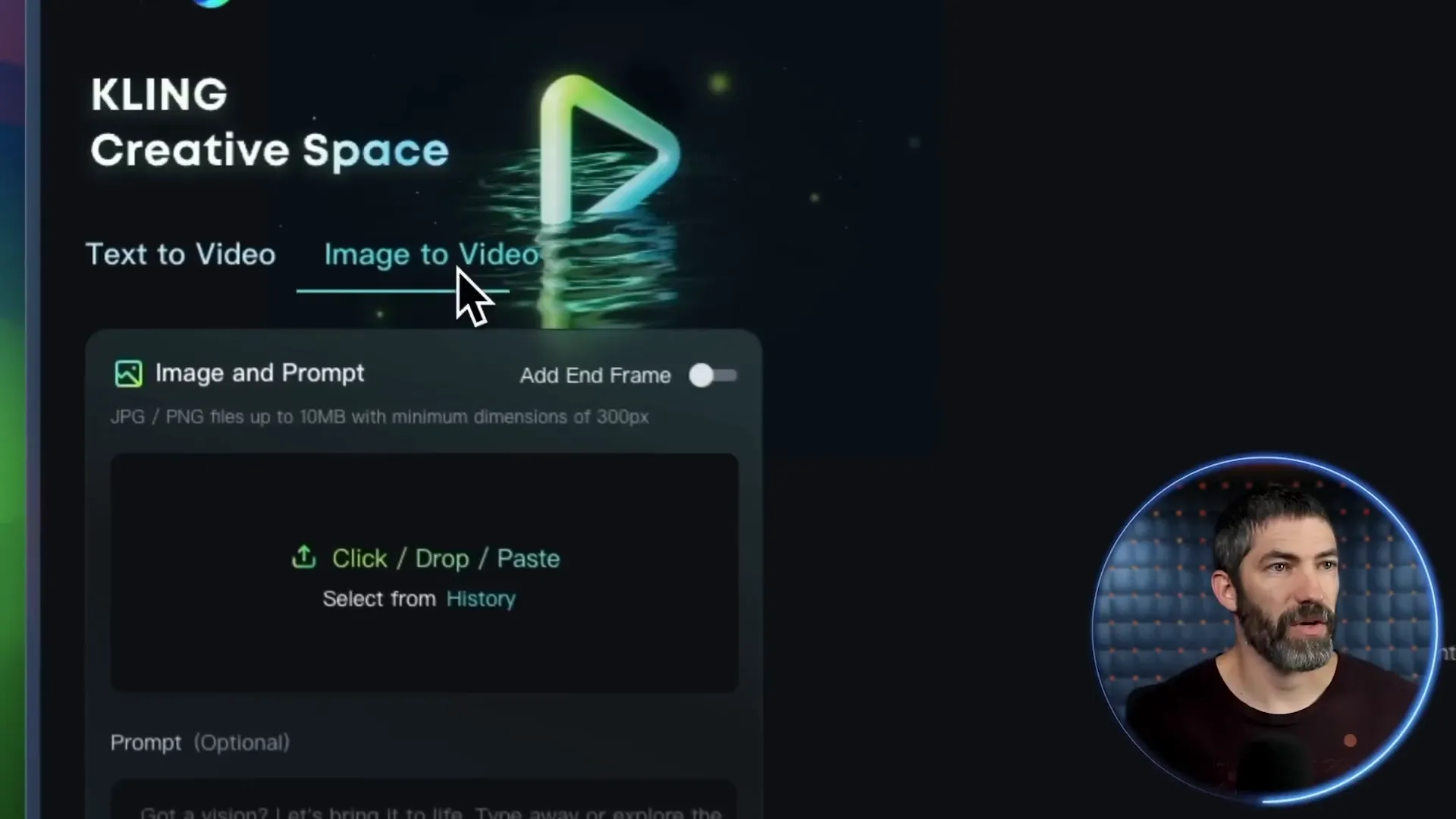

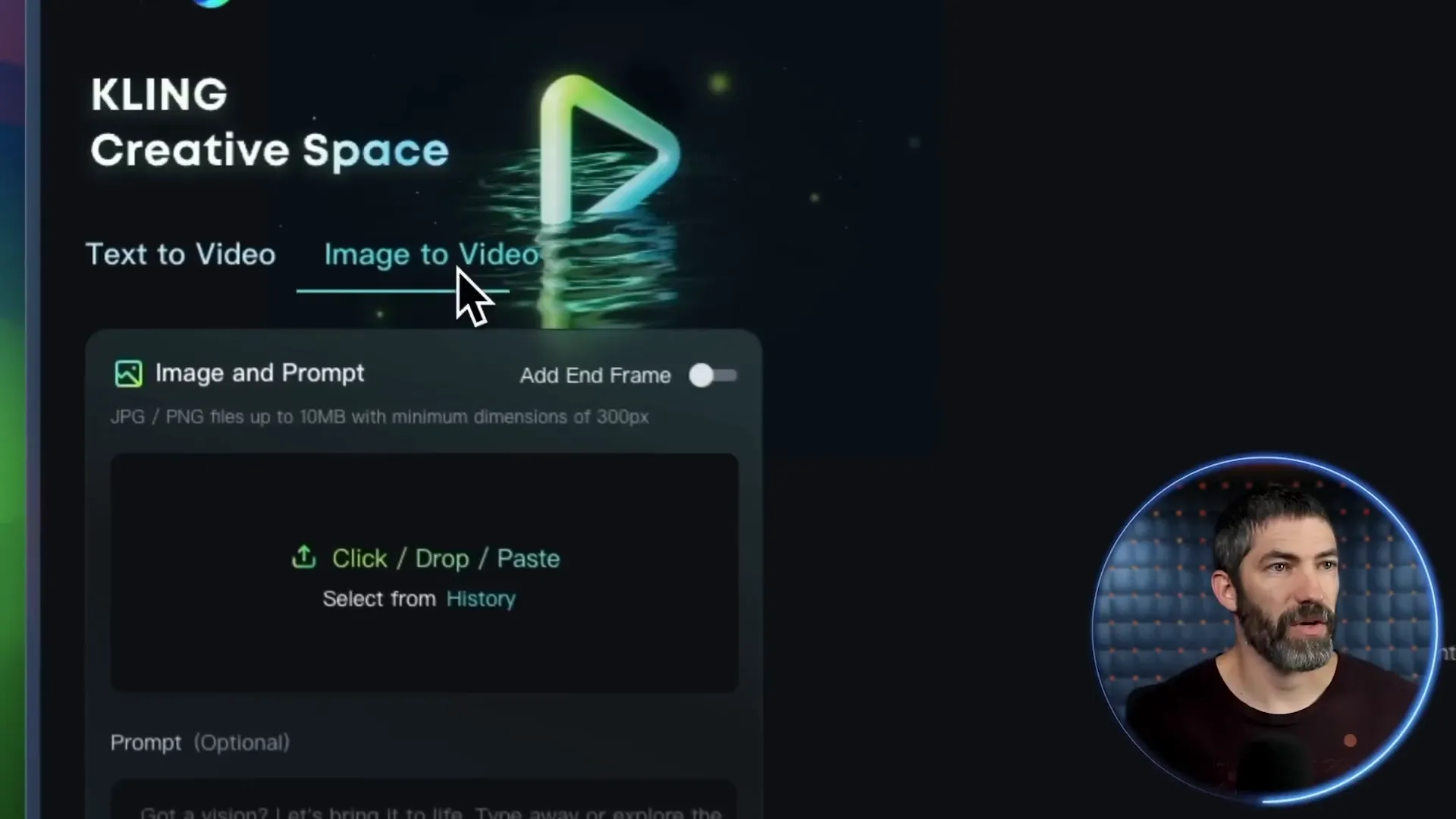

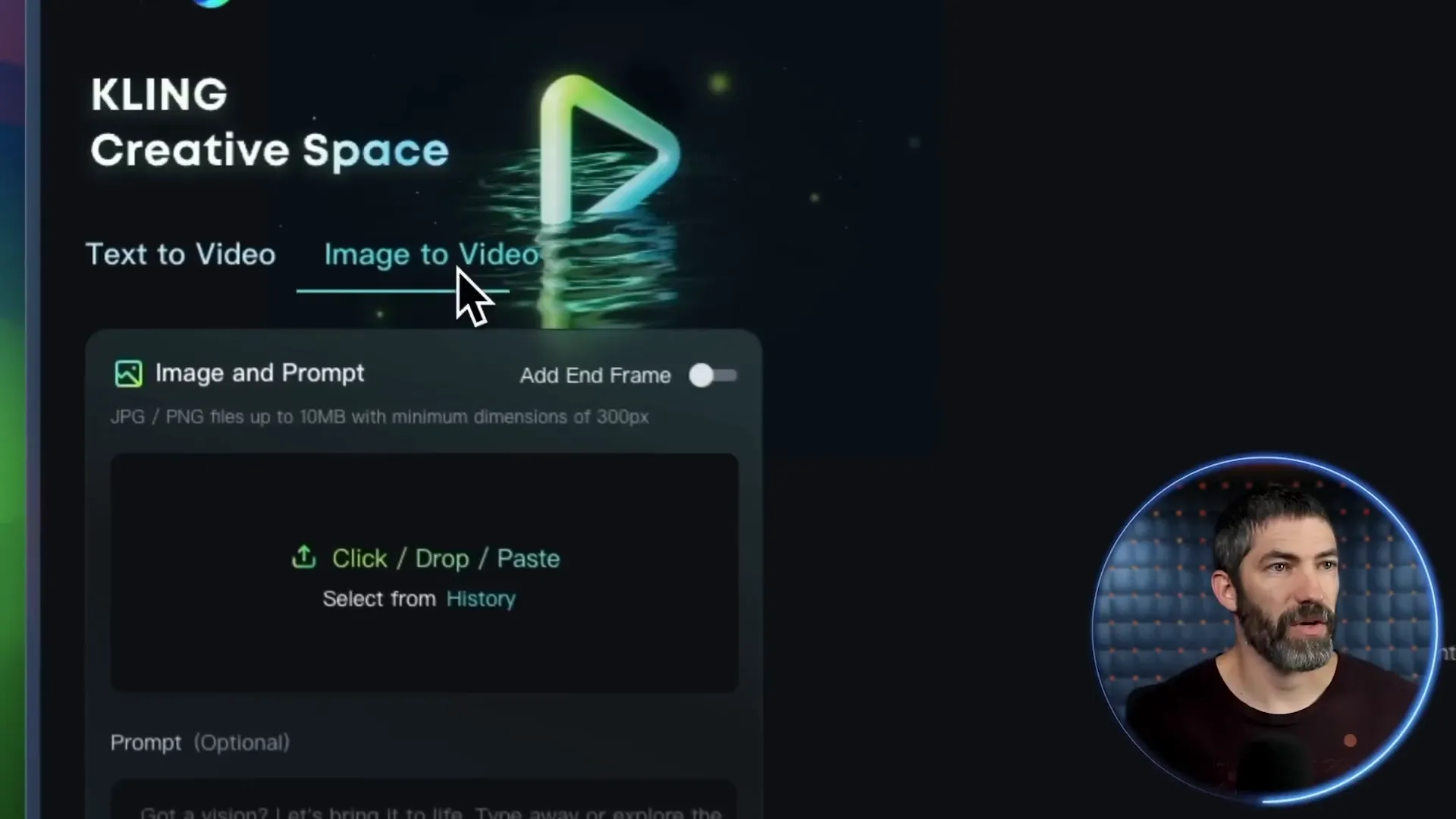

🖼️ How to Image to Video

Switching gears to image-to-video generation! This method allows you to start with an image, giving you more control over the output. Here’s how to do it:

Upload Your Image: Choose a high-quality image that represents the scene you want to create.

Input a Prompt: Describe what you want the video to depict based on the image. For example, “A cat chopping fish on a cutting board.”

Adjust Settings: Set your desired video length and any additional parameters.

Generate: Click the generate button and watch as your static image transforms into a dynamic video!

🎬 Image to Video Comparisons

Finally, let’s look at how the platforms perform with image-to-video prompts. Here’s what I discovered:

Runway: Maintains coherence and showcases excellent motion, though it sometimes lacks in technique.

Kling: Stands out with precise cuts and movement, making it a top choice for image-based prompts.

Luma: Produces visually appealing results but often falls into a slow-motion trap.

Pixverse: While it offers decent visuals, it tends to lack fidelity compared to its competitors.

🛹 Skateboarding

Alright, let’s talk about skateboarding! A sport that’s as much about style as it is about technique. I decided to test how well these AI tools could handle the dynamic movements of skateboarding. I used a prompt where I’m supposed to catch a skateboard and land in a parking lot. Sounds simple, right? Wrong!

Runway went completely bonkers with this one. Picture this: a camera guy suddenly appears with a bike, then we morph together into some surreal entity. It’s like a bizarre art piece gone wrong!

Cling, on the other hand, at least seemed to grasp the concept, which is a win in my book. It tried to deliver a coherent skateboard trick, but let’s just say it wasn’t exactly Tony Hawk material.

Luma sort of understood the prompt too, but it was like watching a toddler try to skate: cute, but not quite there. And Pixverse? Well, it started to grasp the idea but then spiraled into pure chaos. It’s evident that figuring out the physics of skateboarding is a tall order for these AIs.

👾 The Final Boss

After testing these platforms, I can confidently say we’re reaching the final boss level of AI video generation! The advancements are staggering, but there are still hurdles to clear. Each platform has its strengths, but none have truly conquered the complexities of action sports like skateboarding. It’s as if they’re stuck in a glitchy video game, trying to figure out how to animate a simple kickflip.

So, what’s the takeaway? While we’re on the verge of something groundbreaking, we still have a ways to go before these tools can perfectly replicate the fluidity and physics of real-life skateboarding. But hey, that’s what makes this journey exciting, right?

🧑⚖️ The Verdict

So, who comes out on top? After extensive testing, it’s clear that Kling reigns supreme in overall quality. It consistently produced the best results across various prompts, including those pesky action shots. Runway follows closely behind, particularly for its speed and dynamic visuals.

Luma and Pixverse? They have their moments, but they often lag behind in quality, especially when it comes to complex scenes. If you’re looking for the best quality for your buck, Kling is the way to go.

💰 Price and Speed Comparison

Now, let’s break down the price and speed. Kling not only topped the quality charts but also offered more bang for your buck. It’s almost half the price per generation compared to Runway, making it a no-brainer for budget-conscious creators.

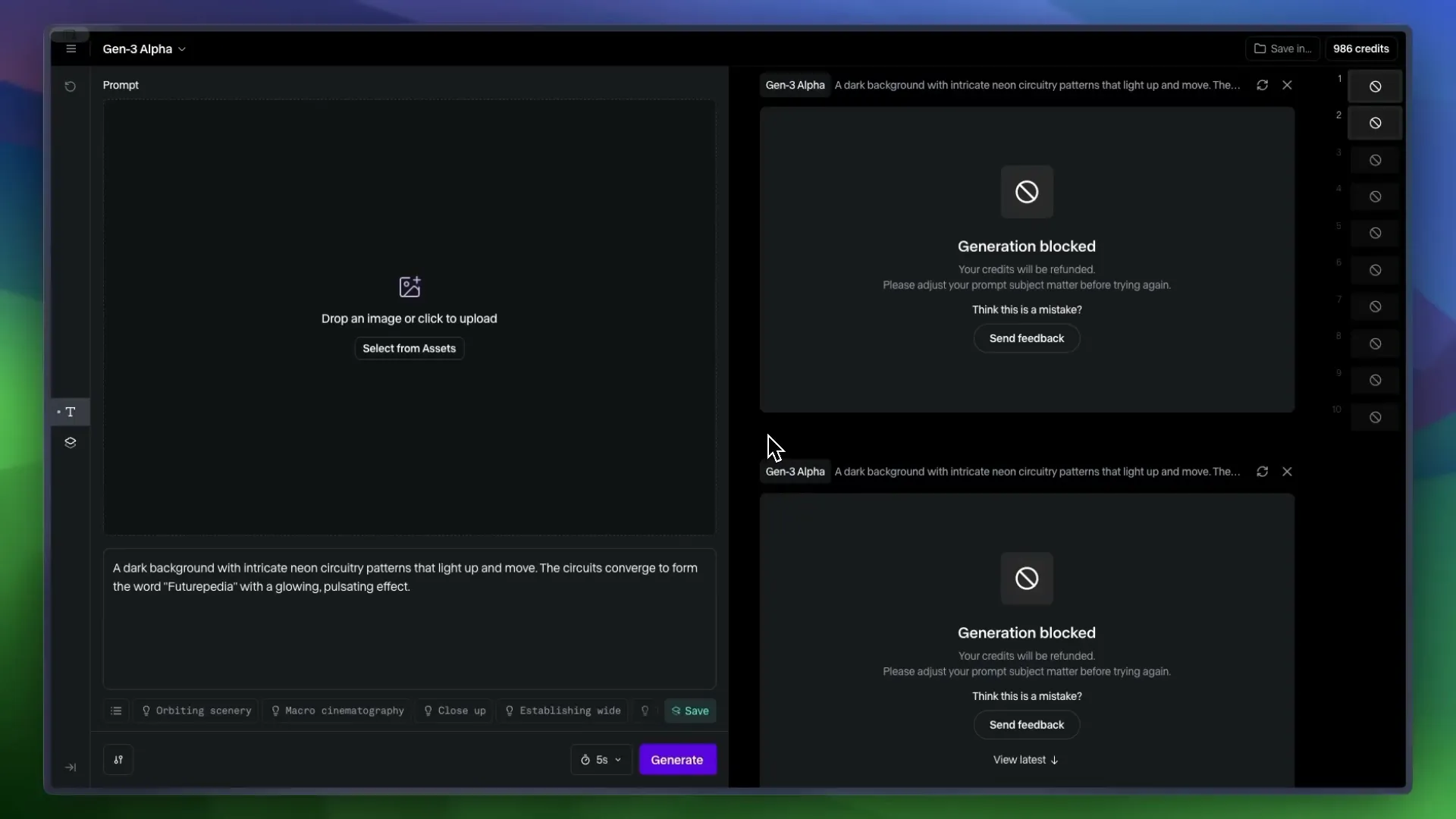

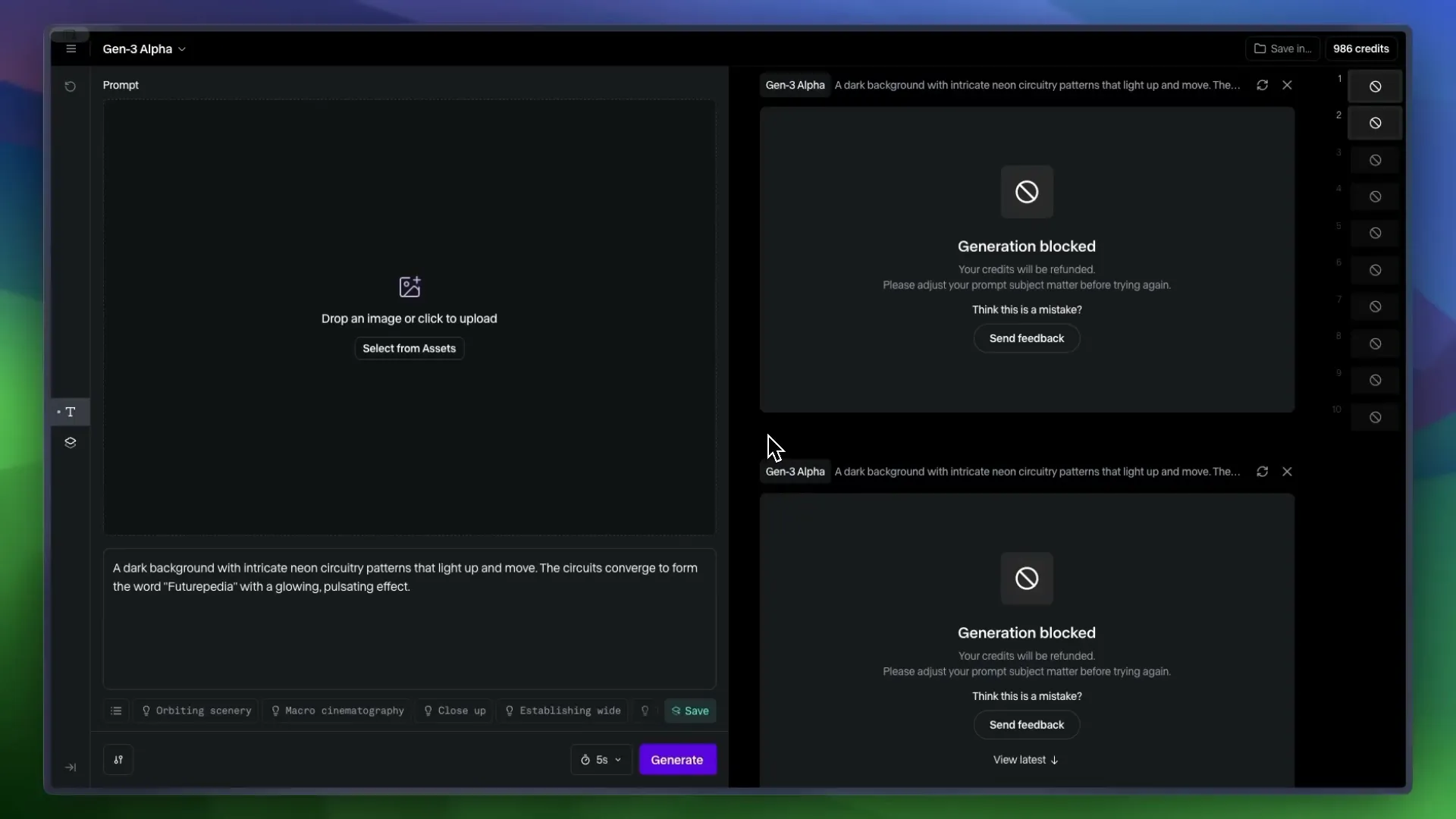

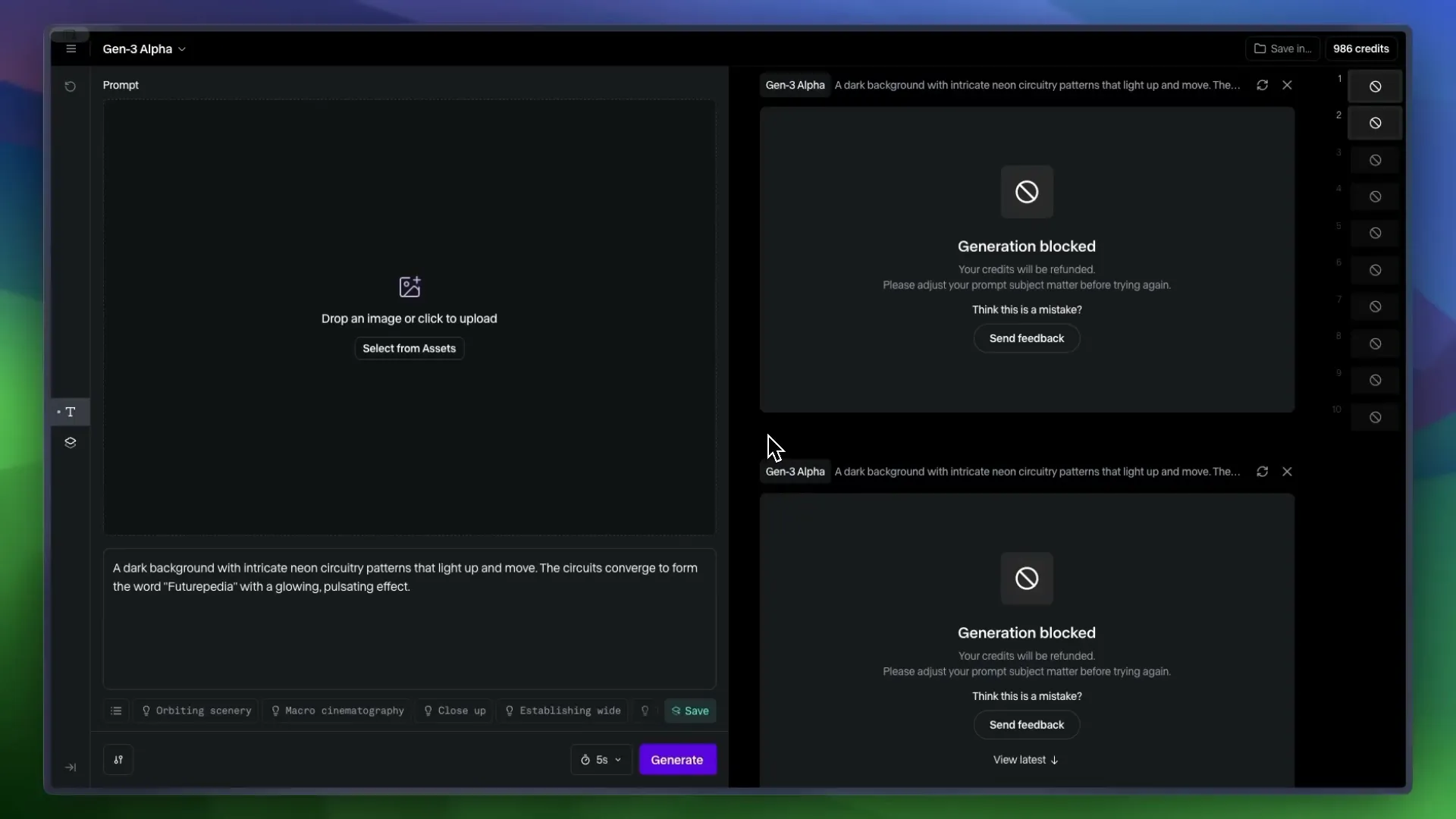

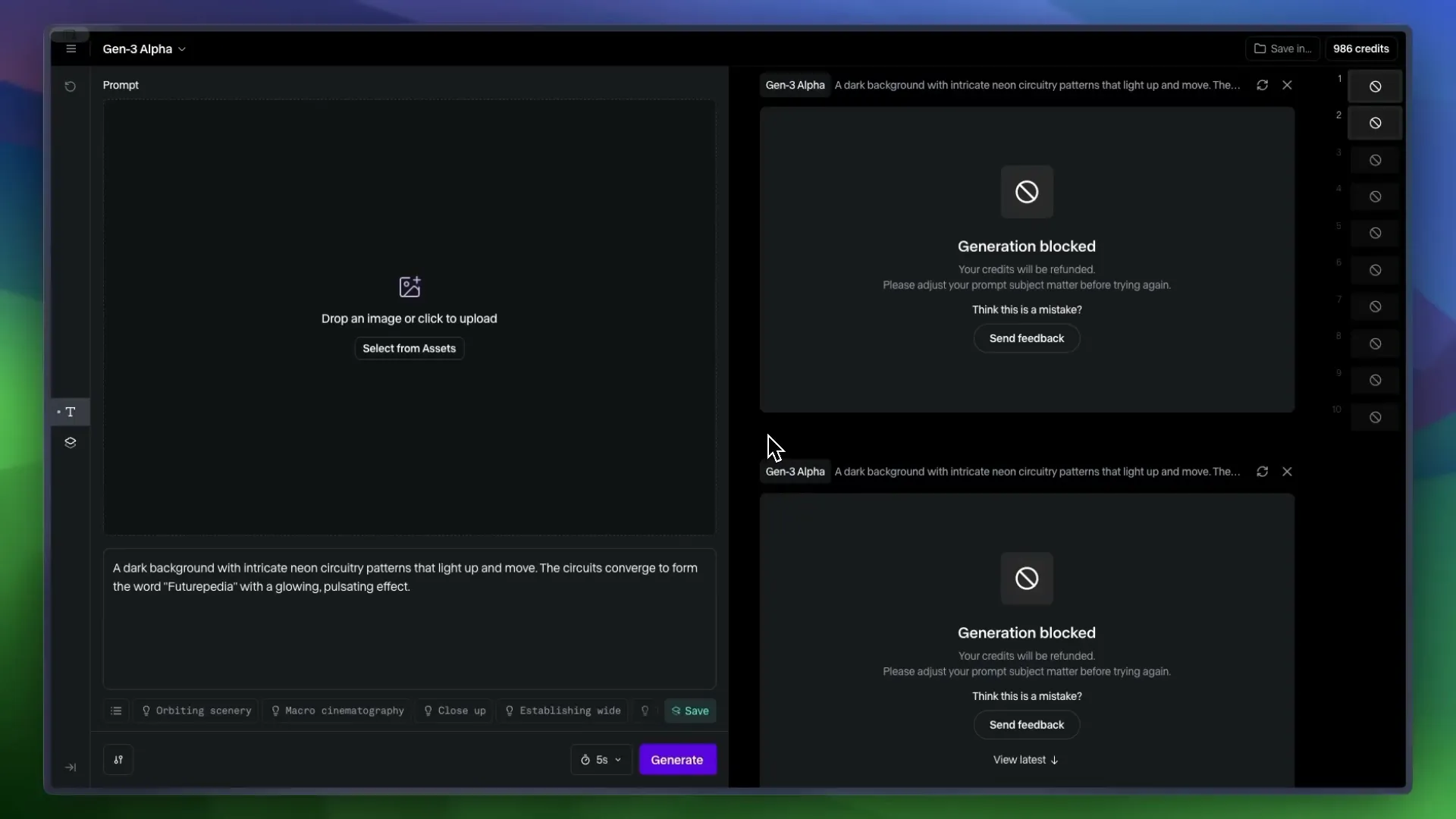

Runway, while pricier, boasts faster generation times across the board. If you need quick turns on your projects, it’s hard to beat Runway’s efficiency. Just keep in mind its occasional hiccups with blocked prompts.

Luma and Pixverse are cheaper alternatives, but they come with compromises in quality. If you don’t need top-tier results for something casual, they’re worth considering. But for serious projects, the investment in Kling or Runway pays off.

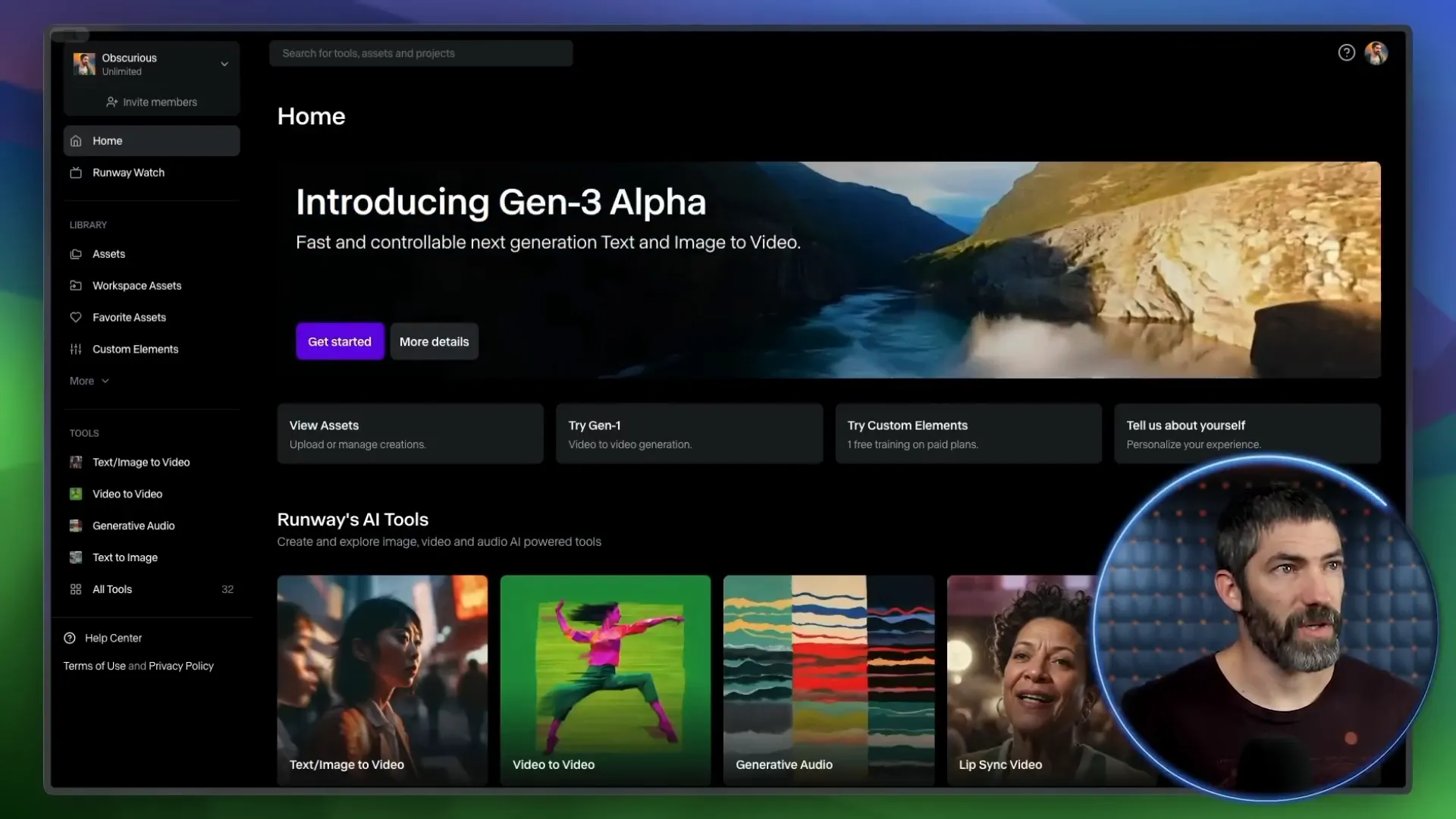

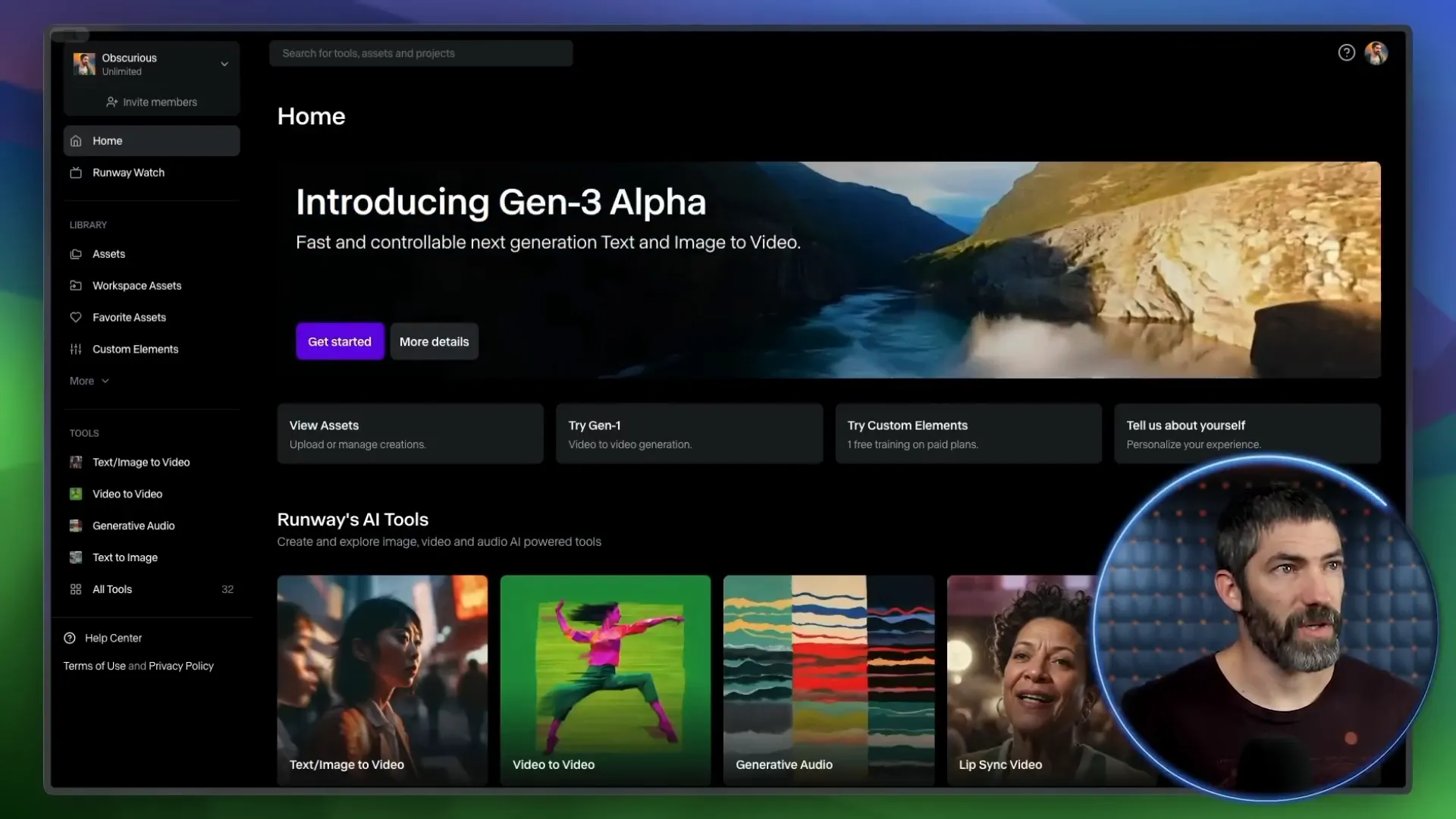

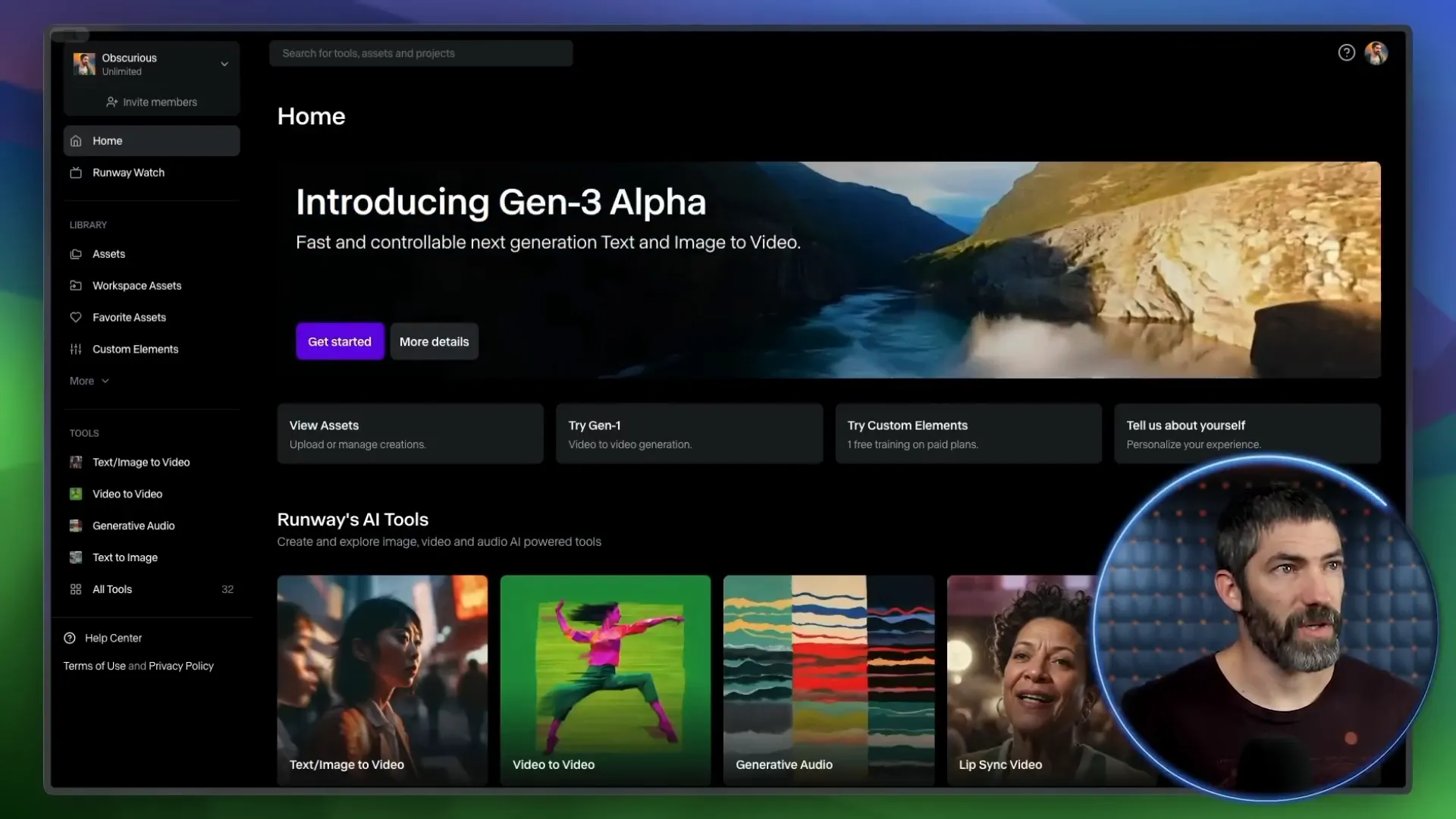

🔍 Features Breakdown

Let’s dive into the features! All four platforms offer text-to-video and image-to-video capabilities, but the execution varies. Kling and Luma allow for end frames, which is a neat feature for storytelling. Runway and Kling provide options for 5 or 10-second videos, while Luma and Pixverse stick to 5 seconds.

Runway excels at following camera directions through prompting, giving you more creative control. Plus, you can remove watermarks on all platforms except Pixverse. And let’s not forget about the unlimited plan that Runway offers for $95 a month—perfect for those hefty projects!

📋 Final Summary

In conclusion, the race for AI video generation supremacy is heating up. Kling stands out for its quality and affordability, while Runway is your go-to for speed. Luma and Pixverse are viable options for casual creators who don’t mind sacrificing some quality.

As we push forward, expect these platforms to evolve even further. The future is bright for AI in video generation, and we’re just getting started!

In the rapidly evolving world of AI video generation, four major players have recently upped their game: Runway Gen 3, Kling, Luma Dream Machine, and Pixverse. Join us as we dive deep into their features, performance, and value, helping you determine which tool is the best fit for your creative needs.

🚀 Intro

Welcome to the future of video creation! The landscape of AI video generators is evolving at lightning speed, and it’s about time you get in on the action. Imagine crafting breathtaking videos with just a few clicks—sounds like magic, right? Well, it’s not magic; it’s technology!

Whether you're a seasoned creator or just stepping into the world of video production, the advancements in AI tools like Runway, Kling, Luma Dream Machine, and Pixverse are game-changers. In this blog, we'll explore how these platforms work, how to generate stunning videos, and even compare text and image inputs to optimize your creative process.

So, buckle up! Let’s dive into the nitty-gritty of how you can leverage these powerful tools to bring your wildest ideas to life.

⚙️ How it will work

Getting started with AI video generation is as easy as pie. Each platform offers a user-friendly interface that guides you through the process. Here’s the lowdown:

Choose Your Tool: Pick one of the platforms—Runway, Kling, Luma, or Pixverse. Each has unique strengths, so choose wisely!

Input Your Prompt: Type in a descriptive prompt for what you want to create. Be as specific as possible to get the best results.

Select Settings: Depending on the platform, you might set parameters like video length, resolution, and creativity level.

Generate: Hit that generate button and watch the magic unfold. Sometimes it takes a few tries to nail the perfect video, so don’t hesitate to reroll!

🌀 How to generate

Now, let’s break down the generation process. Here’s how you can create captivating videos step-by-step:

Open the Platform: Launch your chosen AI video generator and familiarize yourself with its layout.

Input Your Prompt: Enter a detailed description of the scene you wish to create. For example, “A serene lake at sunset with mountains in the background.”

Select Video Length: Choose between 5 or 10 seconds, depending on how much detail you want to capture.

Adjust Settings: If applicable, tweak settings like aspect ratio and camera movement options to suit your vision.

Hit Generate: Click the button and let the AI work its magic. If the first result isn't perfect, don’t worry—try again!

🔍 Text to Video Comparisons

Text-to-video generation is where the real fun begins. With just a few words, you can create visuals that tell a story. But how do these platforms stack up against one another? Let’s compare!

When using text prompts, I ran several tests across all four platforms. The results varied in quality, coherence, and adherence to the prompt. Here’s a snapshot of how they performed:

Runway: Known for its fluid visuals and movement, it nails prompts that require dynamic scenes.

Kling: Often delivers near-perfect results, with excellent adherence to prompts and stunning visuals.

Luma: While it sometimes falls short on detail, it excels in creating motion, especially in lively scenes.

Pixverse: Offers good quality but often has a slow-motion feel that can detract from its appeal.

📊 Sora Text to Video Prompt Comparisons

Let’s get a bit more granular with Sora's text-to-video prompts. Here’s a breakdown of how Sora's prompts performed across the different platforms:

Golden Retrievers Playing in the Snow: Runway captured the playful essence beautifully, while Kling also performed admirably, though with slight morphing.

Dynamic City Time-Lapse: Runway was the standout here, managing to transition from day to night seamlessly.

Chinese New Year Celebration: Runway again took the lead with coherent faces and a decent dragon, while Kling struggled with the dragon's appearance.

📝 Generating Text

Generating text for video prompts isn’t just about typing in a few words. It's about crafting a narrative that the AI can visualize. Here’s how to maximize your text input:

Be Descriptive: The more vivid your description, the better the AI can interpret it. Instead of saying “a dog,” try “a golden retriever joyfully fetching a ball in a sunny park.”

Use Action Words: Verbs are your friends! Words like “soar,” “dive,” and “glide” help the AI visualize movement.

Specify the Environment: Mentioning details about the setting enhances the scene. Think about the time of day, weather, and surrounding elements.

🖼️ How to Image to Video

Switching gears to image-to-video generation! This method allows you to start with an image, giving you more control over the output. Here’s how to do it:

Upload Your Image: Choose a high-quality image that represents the scene you want to create.

Input a Prompt: Describe what you want the video to depict based on the image. For example, “A cat chopping fish on a cutting board.”

Adjust Settings: Set your desired video length and any additional parameters.

Generate: Click the generate button and watch as your static image transforms into a dynamic video!

🎬 Image to Video Comparisons

Finally, let’s look at how the platforms perform with image-to-video prompts. Here’s what I discovered:

Runway: Maintains coherence and showcases excellent motion, though it sometimes lacks in technique.

Kling: Stands out with precise cuts and movement, making it a top choice for image-based prompts.

Luma: Produces visually appealing results but often falls into a slow-motion trap.

Pixverse: While it offers decent visuals, it tends to lack fidelity compared to its competitors.

🛹 Skateboarding

Alright, let’s talk about skateboarding! A sport that’s as much about style as it is about technique. I decided to test how well these AI tools could handle the dynamic movements of skateboarding. I used a prompt where I’m supposed to catch a skateboard and land in a parking lot. Sounds simple, right? Wrong!

Runway went completely bonkers with this one. Picture this: a camera guy suddenly appears with a bike, then we morph together into some surreal entity. It’s like a bizarre art piece gone wrong!

Cling, on the other hand, at least seemed to grasp the concept, which is a win in my book. It tried to deliver a coherent skateboard trick, but let’s just say it wasn’t exactly Tony Hawk material.

Luma sort of understood the prompt too, but it was like watching a toddler try to skate: cute, but not quite there. And Pixverse? Well, it started to grasp the idea but then spiraled into pure chaos. It’s evident that figuring out the physics of skateboarding is a tall order for these AIs.

👾 The Final Boss

After testing these platforms, I can confidently say we’re reaching the final boss level of AI video generation! The advancements are staggering, but there are still hurdles to clear. Each platform has its strengths, but none have truly conquered the complexities of action sports like skateboarding. It’s as if they’re stuck in a glitchy video game, trying to figure out how to animate a simple kickflip.

So, what’s the takeaway? While we’re on the verge of something groundbreaking, we still have a ways to go before these tools can perfectly replicate the fluidity and physics of real-life skateboarding. But hey, that’s what makes this journey exciting, right?

🧑⚖️ The Verdict

So, who comes out on top? After extensive testing, it’s clear that Kling reigns supreme in overall quality. It consistently produced the best results across various prompts, including those pesky action shots. Runway follows closely behind, particularly for its speed and dynamic visuals.

Luma and Pixverse? They have their moments, but they often lag behind in quality, especially when it comes to complex scenes. If you’re looking for the best quality for your buck, Kling is the way to go.

💰 Price and Speed Comparison

Now, let’s break down the price and speed. Kling not only topped the quality charts but also offered more bang for your buck. It’s almost half the price per generation compared to Runway, making it a no-brainer for budget-conscious creators.

Runway, while pricier, boasts faster generation times across the board. If you need quick turns on your projects, it’s hard to beat Runway’s efficiency. Just keep in mind its occasional hiccups with blocked prompts.

Luma and Pixverse are cheaper alternatives, but they come with compromises in quality. If you don’t need top-tier results for something casual, they’re worth considering. But for serious projects, the investment in Kling or Runway pays off.

🔍 Features Breakdown

Let’s dive into the features! All four platforms offer text-to-video and image-to-video capabilities, but the execution varies. Kling and Luma allow for end frames, which is a neat feature for storytelling. Runway and Kling provide options for 5 or 10-second videos, while Luma and Pixverse stick to 5 seconds.

Runway excels at following camera directions through prompting, giving you more creative control. Plus, you can remove watermarks on all platforms except Pixverse. And let’s not forget about the unlimited plan that Runway offers for $95 a month—perfect for those hefty projects!

📋 Final Summary

In conclusion, the race for AI video generation supremacy is heating up. Kling stands out for its quality and affordability, while Runway is your go-to for speed. Luma and Pixverse are viable options for casual creators who don’t mind sacrificing some quality.

As we push forward, expect these platforms to evolve even further. The future is bright for AI in video generation, and we’re just getting started!

In the rapidly evolving world of AI video generation, four major players have recently upped their game: Runway Gen 3, Kling, Luma Dream Machine, and Pixverse. Join us as we dive deep into their features, performance, and value, helping you determine which tool is the best fit for your creative needs.

🚀 Intro

Welcome to the future of video creation! The landscape of AI video generators is evolving at lightning speed, and it’s about time you get in on the action. Imagine crafting breathtaking videos with just a few clicks—sounds like magic, right? Well, it’s not magic; it’s technology!

Whether you're a seasoned creator or just stepping into the world of video production, the advancements in AI tools like Runway, Kling, Luma Dream Machine, and Pixverse are game-changers. In this blog, we'll explore how these platforms work, how to generate stunning videos, and even compare text and image inputs to optimize your creative process.

So, buckle up! Let’s dive into the nitty-gritty of how you can leverage these powerful tools to bring your wildest ideas to life.

⚙️ How it will work

Getting started with AI video generation is as easy as pie. Each platform offers a user-friendly interface that guides you through the process. Here’s the lowdown:

Choose Your Tool: Pick one of the platforms—Runway, Kling, Luma, or Pixverse. Each has unique strengths, so choose wisely!

Input Your Prompt: Type in a descriptive prompt for what you want to create. Be as specific as possible to get the best results.

Select Settings: Depending on the platform, you might set parameters like video length, resolution, and creativity level.

Generate: Hit that generate button and watch the magic unfold. Sometimes it takes a few tries to nail the perfect video, so don’t hesitate to reroll!

🌀 How to generate

Now, let’s break down the generation process. Here’s how you can create captivating videos step-by-step:

Open the Platform: Launch your chosen AI video generator and familiarize yourself with its layout.

Input Your Prompt: Enter a detailed description of the scene you wish to create. For example, “A serene lake at sunset with mountains in the background.”

Select Video Length: Choose between 5 or 10 seconds, depending on how much detail you want to capture.

Adjust Settings: If applicable, tweak settings like aspect ratio and camera movement options to suit your vision.

Hit Generate: Click the button and let the AI work its magic. If the first result isn't perfect, don’t worry—try again!

🔍 Text to Video Comparisons

Text-to-video generation is where the real fun begins. With just a few words, you can create visuals that tell a story. But how do these platforms stack up against one another? Let’s compare!

When using text prompts, I ran several tests across all four platforms. The results varied in quality, coherence, and adherence to the prompt. Here’s a snapshot of how they performed:

Runway: Known for its fluid visuals and movement, it nails prompts that require dynamic scenes.

Kling: Often delivers near-perfect results, with excellent adherence to prompts and stunning visuals.

Luma: While it sometimes falls short on detail, it excels in creating motion, especially in lively scenes.

Pixverse: Offers good quality but often has a slow-motion feel that can detract from its appeal.

📊 Sora Text to Video Prompt Comparisons

Let’s get a bit more granular with Sora's text-to-video prompts. Here’s a breakdown of how Sora's prompts performed across the different platforms:

Golden Retrievers Playing in the Snow: Runway captured the playful essence beautifully, while Kling also performed admirably, though with slight morphing.

Dynamic City Time-Lapse: Runway was the standout here, managing to transition from day to night seamlessly.

Chinese New Year Celebration: Runway again took the lead with coherent faces and a decent dragon, while Kling struggled with the dragon's appearance.

📝 Generating Text

Generating text for video prompts isn’t just about typing in a few words. It's about crafting a narrative that the AI can visualize. Here’s how to maximize your text input:

Be Descriptive: The more vivid your description, the better the AI can interpret it. Instead of saying “a dog,” try “a golden retriever joyfully fetching a ball in a sunny park.”

Use Action Words: Verbs are your friends! Words like “soar,” “dive,” and “glide” help the AI visualize movement.

Specify the Environment: Mentioning details about the setting enhances the scene. Think about the time of day, weather, and surrounding elements.

🖼️ How to Image to Video

Switching gears to image-to-video generation! This method allows you to start with an image, giving you more control over the output. Here’s how to do it:

Upload Your Image: Choose a high-quality image that represents the scene you want to create.

Input a Prompt: Describe what you want the video to depict based on the image. For example, “A cat chopping fish on a cutting board.”

Adjust Settings: Set your desired video length and any additional parameters.

Generate: Click the generate button and watch as your static image transforms into a dynamic video!

🎬 Image to Video Comparisons

Finally, let’s look at how the platforms perform with image-to-video prompts. Here’s what I discovered:

Runway: Maintains coherence and showcases excellent motion, though it sometimes lacks in technique.

Kling: Stands out with precise cuts and movement, making it a top choice for image-based prompts.

Luma: Produces visually appealing results but often falls into a slow-motion trap.

Pixverse: While it offers decent visuals, it tends to lack fidelity compared to its competitors.

🛹 Skateboarding

Alright, let’s talk about skateboarding! A sport that’s as much about style as it is about technique. I decided to test how well these AI tools could handle the dynamic movements of skateboarding. I used a prompt where I’m supposed to catch a skateboard and land in a parking lot. Sounds simple, right? Wrong!

Runway went completely bonkers with this one. Picture this: a camera guy suddenly appears with a bike, then we morph together into some surreal entity. It’s like a bizarre art piece gone wrong!

Cling, on the other hand, at least seemed to grasp the concept, which is a win in my book. It tried to deliver a coherent skateboard trick, but let’s just say it wasn’t exactly Tony Hawk material.

Luma sort of understood the prompt too, but it was like watching a toddler try to skate: cute, but not quite there. And Pixverse? Well, it started to grasp the idea but then spiraled into pure chaos. It’s evident that figuring out the physics of skateboarding is a tall order for these AIs.

👾 The Final Boss

After testing these platforms, I can confidently say we’re reaching the final boss level of AI video generation! The advancements are staggering, but there are still hurdles to clear. Each platform has its strengths, but none have truly conquered the complexities of action sports like skateboarding. It’s as if they’re stuck in a glitchy video game, trying to figure out how to animate a simple kickflip.

So, what’s the takeaway? While we’re on the verge of something groundbreaking, we still have a ways to go before these tools can perfectly replicate the fluidity and physics of real-life skateboarding. But hey, that’s what makes this journey exciting, right?

🧑⚖️ The Verdict

So, who comes out on top? After extensive testing, it’s clear that Kling reigns supreme in overall quality. It consistently produced the best results across various prompts, including those pesky action shots. Runway follows closely behind, particularly for its speed and dynamic visuals.

Luma and Pixverse? They have their moments, but they often lag behind in quality, especially when it comes to complex scenes. If you’re looking for the best quality for your buck, Kling is the way to go.

💰 Price and Speed Comparison

Now, let’s break down the price and speed. Kling not only topped the quality charts but also offered more bang for your buck. It’s almost half the price per generation compared to Runway, making it a no-brainer for budget-conscious creators.

Runway, while pricier, boasts faster generation times across the board. If you need quick turns on your projects, it’s hard to beat Runway’s efficiency. Just keep in mind its occasional hiccups with blocked prompts.

Luma and Pixverse are cheaper alternatives, but they come with compromises in quality. If you don’t need top-tier results for something casual, they’re worth considering. But for serious projects, the investment in Kling or Runway pays off.

🔍 Features Breakdown

Let’s dive into the features! All four platforms offer text-to-video and image-to-video capabilities, but the execution varies. Kling and Luma allow for end frames, which is a neat feature for storytelling. Runway and Kling provide options for 5 or 10-second videos, while Luma and Pixverse stick to 5 seconds.

Runway excels at following camera directions through prompting, giving you more creative control. Plus, you can remove watermarks on all platforms except Pixverse. And let’s not forget about the unlimited plan that Runway offers for $95 a month—perfect for those hefty projects!

📋 Final Summary

In conclusion, the race for AI video generation supremacy is heating up. Kling stands out for its quality and affordability, while Runway is your go-to for speed. Luma and Pixverse are viable options for casual creators who don’t mind sacrificing some quality.

As we push forward, expect these platforms to evolve even further. The future is bright for AI in video generation, and we’re just getting started!

In the rapidly evolving world of AI video generation, four major players have recently upped their game: Runway Gen 3, Kling, Luma Dream Machine, and Pixverse. Join us as we dive deep into their features, performance, and value, helping you determine which tool is the best fit for your creative needs.

🚀 Intro

Welcome to the future of video creation! The landscape of AI video generators is evolving at lightning speed, and it’s about time you get in on the action. Imagine crafting breathtaking videos with just a few clicks—sounds like magic, right? Well, it’s not magic; it’s technology!

Whether you're a seasoned creator or just stepping into the world of video production, the advancements in AI tools like Runway, Kling, Luma Dream Machine, and Pixverse are game-changers. In this blog, we'll explore how these platforms work, how to generate stunning videos, and even compare text and image inputs to optimize your creative process.

So, buckle up! Let’s dive into the nitty-gritty of how you can leverage these powerful tools to bring your wildest ideas to life.

⚙️ How it will work

Getting started with AI video generation is as easy as pie. Each platform offers a user-friendly interface that guides you through the process. Here’s the lowdown:

Choose Your Tool: Pick one of the platforms—Runway, Kling, Luma, or Pixverse. Each has unique strengths, so choose wisely!

Input Your Prompt: Type in a descriptive prompt for what you want to create. Be as specific as possible to get the best results.

Select Settings: Depending on the platform, you might set parameters like video length, resolution, and creativity level.

Generate: Hit that generate button and watch the magic unfold. Sometimes it takes a few tries to nail the perfect video, so don’t hesitate to reroll!

🌀 How to generate

Now, let’s break down the generation process. Here’s how you can create captivating videos step-by-step:

Open the Platform: Launch your chosen AI video generator and familiarize yourself with its layout.

Input Your Prompt: Enter a detailed description of the scene you wish to create. For example, “A serene lake at sunset with mountains in the background.”

Select Video Length: Choose between 5 or 10 seconds, depending on how much detail you want to capture.

Adjust Settings: If applicable, tweak settings like aspect ratio and camera movement options to suit your vision.

Hit Generate: Click the button and let the AI work its magic. If the first result isn't perfect, don’t worry—try again!

🔍 Text to Video Comparisons

Text-to-video generation is where the real fun begins. With just a few words, you can create visuals that tell a story. But how do these platforms stack up against one another? Let’s compare!

When using text prompts, I ran several tests across all four platforms. The results varied in quality, coherence, and adherence to the prompt. Here’s a snapshot of how they performed:

Runway: Known for its fluid visuals and movement, it nails prompts that require dynamic scenes.

Kling: Often delivers near-perfect results, with excellent adherence to prompts and stunning visuals.

Luma: While it sometimes falls short on detail, it excels in creating motion, especially in lively scenes.

Pixverse: Offers good quality but often has a slow-motion feel that can detract from its appeal.

📊 Sora Text to Video Prompt Comparisons

Let’s get a bit more granular with Sora's text-to-video prompts. Here’s a breakdown of how Sora's prompts performed across the different platforms:

Golden Retrievers Playing in the Snow: Runway captured the playful essence beautifully, while Kling also performed admirably, though with slight morphing.

Dynamic City Time-Lapse: Runway was the standout here, managing to transition from day to night seamlessly.

Chinese New Year Celebration: Runway again took the lead with coherent faces and a decent dragon, while Kling struggled with the dragon's appearance.

📝 Generating Text

Generating text for video prompts isn’t just about typing in a few words. It's about crafting a narrative that the AI can visualize. Here’s how to maximize your text input:

Be Descriptive: The more vivid your description, the better the AI can interpret it. Instead of saying “a dog,” try “a golden retriever joyfully fetching a ball in a sunny park.”

Use Action Words: Verbs are your friends! Words like “soar,” “dive,” and “glide” help the AI visualize movement.

Specify the Environment: Mentioning details about the setting enhances the scene. Think about the time of day, weather, and surrounding elements.

🖼️ How to Image to Video

Switching gears to image-to-video generation! This method allows you to start with an image, giving you more control over the output. Here’s how to do it:

Upload Your Image: Choose a high-quality image that represents the scene you want to create.

Input a Prompt: Describe what you want the video to depict based on the image. For example, “A cat chopping fish on a cutting board.”

Adjust Settings: Set your desired video length and any additional parameters.

Generate: Click the generate button and watch as your static image transforms into a dynamic video!

🎬 Image to Video Comparisons

Finally, let’s look at how the platforms perform with image-to-video prompts. Here’s what I discovered:

Runway: Maintains coherence and showcases excellent motion, though it sometimes lacks in technique.

Kling: Stands out with precise cuts and movement, making it a top choice for image-based prompts.

Luma: Produces visually appealing results but often falls into a slow-motion trap.

Pixverse: While it offers decent visuals, it tends to lack fidelity compared to its competitors.

🛹 Skateboarding

Alright, let’s talk about skateboarding! A sport that’s as much about style as it is about technique. I decided to test how well these AI tools could handle the dynamic movements of skateboarding. I used a prompt where I’m supposed to catch a skateboard and land in a parking lot. Sounds simple, right? Wrong!

Runway went completely bonkers with this one. Picture this: a camera guy suddenly appears with a bike, then we morph together into some surreal entity. It’s like a bizarre art piece gone wrong!

Cling, on the other hand, at least seemed to grasp the concept, which is a win in my book. It tried to deliver a coherent skateboard trick, but let’s just say it wasn’t exactly Tony Hawk material.

Luma sort of understood the prompt too, but it was like watching a toddler try to skate: cute, but not quite there. And Pixverse? Well, it started to grasp the idea but then spiraled into pure chaos. It’s evident that figuring out the physics of skateboarding is a tall order for these AIs.

👾 The Final Boss

After testing these platforms, I can confidently say we’re reaching the final boss level of AI video generation! The advancements are staggering, but there are still hurdles to clear. Each platform has its strengths, but none have truly conquered the complexities of action sports like skateboarding. It’s as if they’re stuck in a glitchy video game, trying to figure out how to animate a simple kickflip.

So, what’s the takeaway? While we’re on the verge of something groundbreaking, we still have a ways to go before these tools can perfectly replicate the fluidity and physics of real-life skateboarding. But hey, that’s what makes this journey exciting, right?

🧑⚖️ The Verdict

So, who comes out on top? After extensive testing, it’s clear that Kling reigns supreme in overall quality. It consistently produced the best results across various prompts, including those pesky action shots. Runway follows closely behind, particularly for its speed and dynamic visuals.

Luma and Pixverse? They have their moments, but they often lag behind in quality, especially when it comes to complex scenes. If you’re looking for the best quality for your buck, Kling is the way to go.

💰 Price and Speed Comparison

Now, let’s break down the price and speed. Kling not only topped the quality charts but also offered more bang for your buck. It’s almost half the price per generation compared to Runway, making it a no-brainer for budget-conscious creators.

Runway, while pricier, boasts faster generation times across the board. If you need quick turns on your projects, it’s hard to beat Runway’s efficiency. Just keep in mind its occasional hiccups with blocked prompts.

Luma and Pixverse are cheaper alternatives, but they come with compromises in quality. If you don’t need top-tier results for something casual, they’re worth considering. But for serious projects, the investment in Kling or Runway pays off.

🔍 Features Breakdown

Let’s dive into the features! All four platforms offer text-to-video and image-to-video capabilities, but the execution varies. Kling and Luma allow for end frames, which is a neat feature for storytelling. Runway and Kling provide options for 5 or 10-second videos, while Luma and Pixverse stick to 5 seconds.

Runway excels at following camera directions through prompting, giving you more creative control. Plus, you can remove watermarks on all platforms except Pixverse. And let’s not forget about the unlimited plan that Runway offers for $95 a month—perfect for those hefty projects!

📋 Final Summary

In conclusion, the race for AI video generation supremacy is heating up. Kling stands out for its quality and affordability, while Runway is your go-to for speed. Luma and Pixverse are viable options for casual creators who don’t mind sacrificing some quality.

As we push forward, expect these platforms to evolve even further. The future is bright for AI in video generation, and we’re just getting started!