Content

Revolutionizing AI Video Generation: The Latest Breakthroughs

Revolutionizing AI Video Generation: The Latest Breakthroughs

Revolutionizing AI Video Generation: The Latest Breakthroughs

Danny Roman

December 5, 2024

In the fast-evolving landscape of AI video generation, new technologies and models are emerging at an unprecedented rate. This blog explores the latest advancements, including Spatiotemporal Skip Guidance, Tencent's groundbreaking open-source model, and much more, highlighting how these innovations are set to enhance video quality and realism.

🚀 Exciting Video Generation Updates

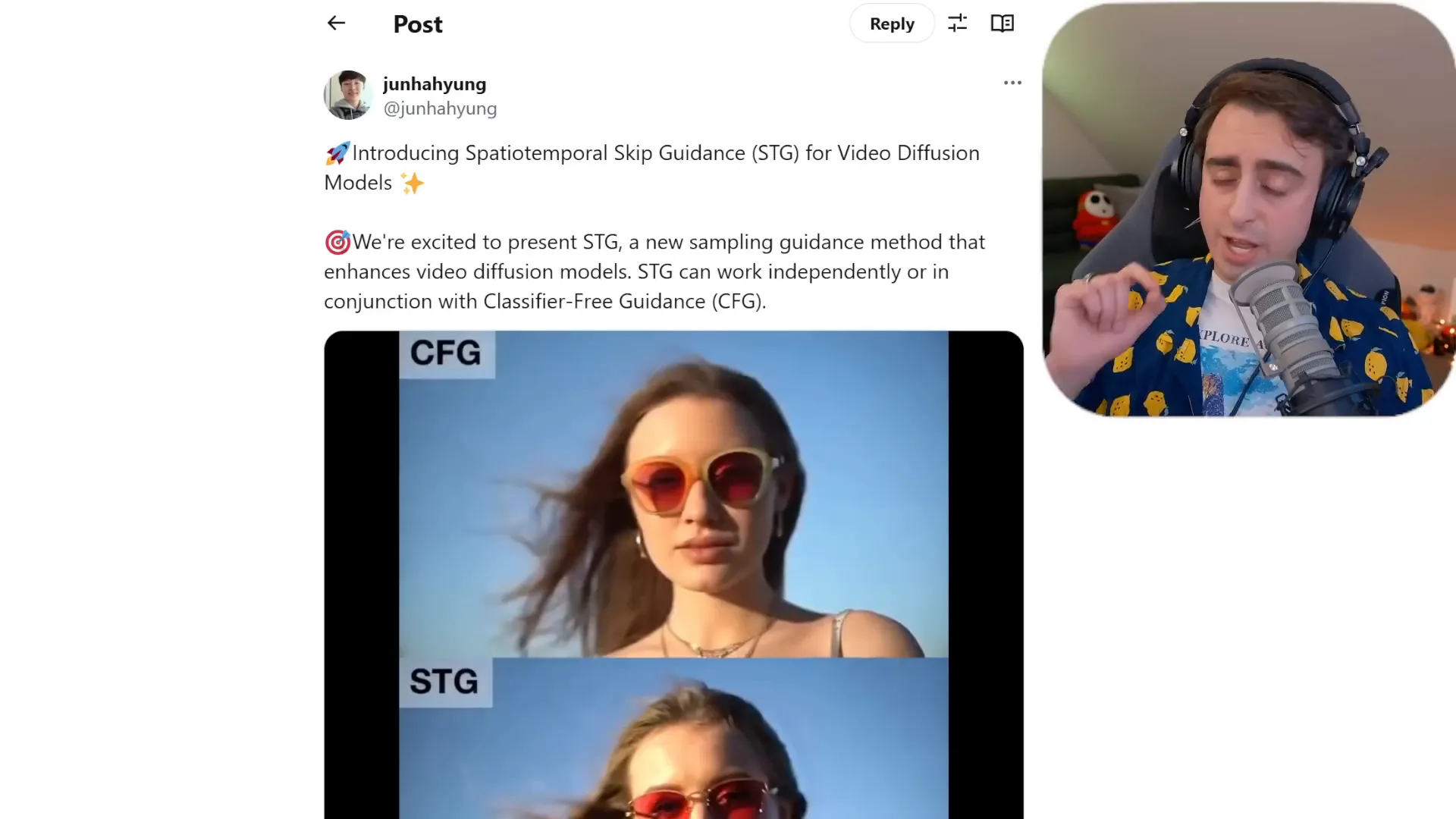

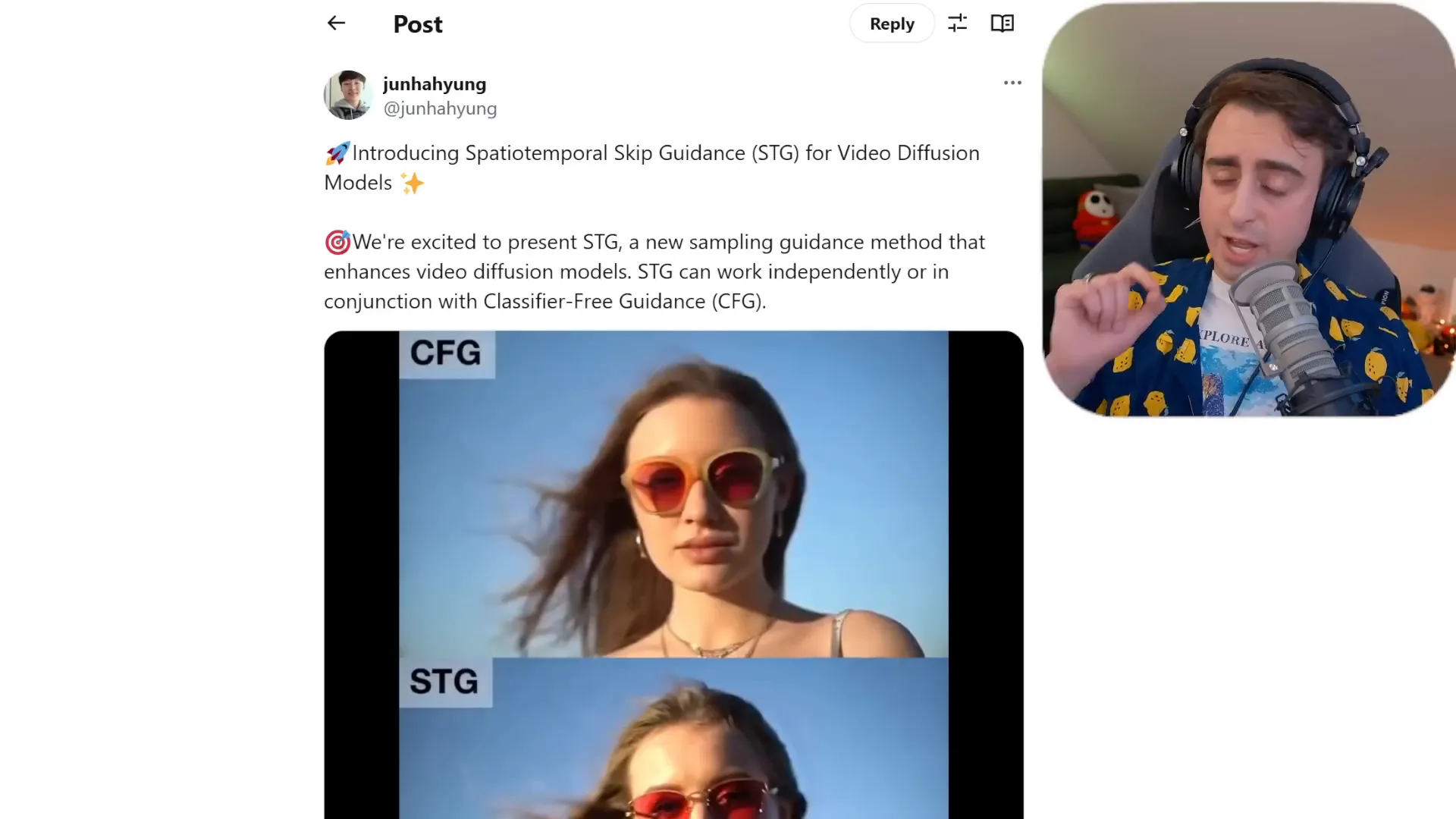

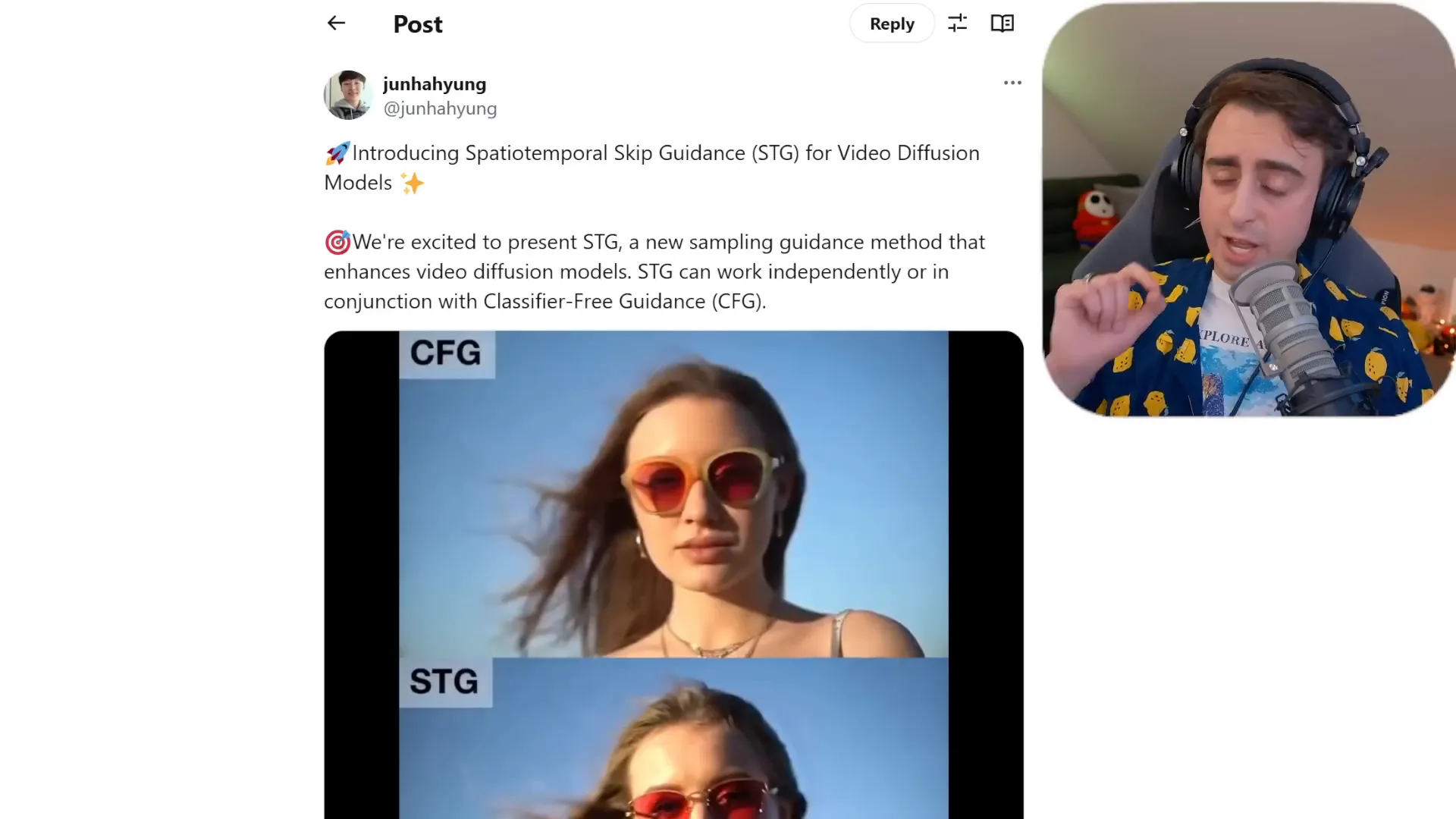

Hold onto your seats, because the world of AI video generation just got a turbo boost! The latest breakthroughs are not just minor tweaks; they’re game changers. With the introduction of Spatiotemporal Skip Guidance (STG), we’re stepping into a new era where video generation models are more precise, realistic, and downright impressive. It’s not just about creating videos anymore; it’s about creating experiences that captivate and engage like never before.

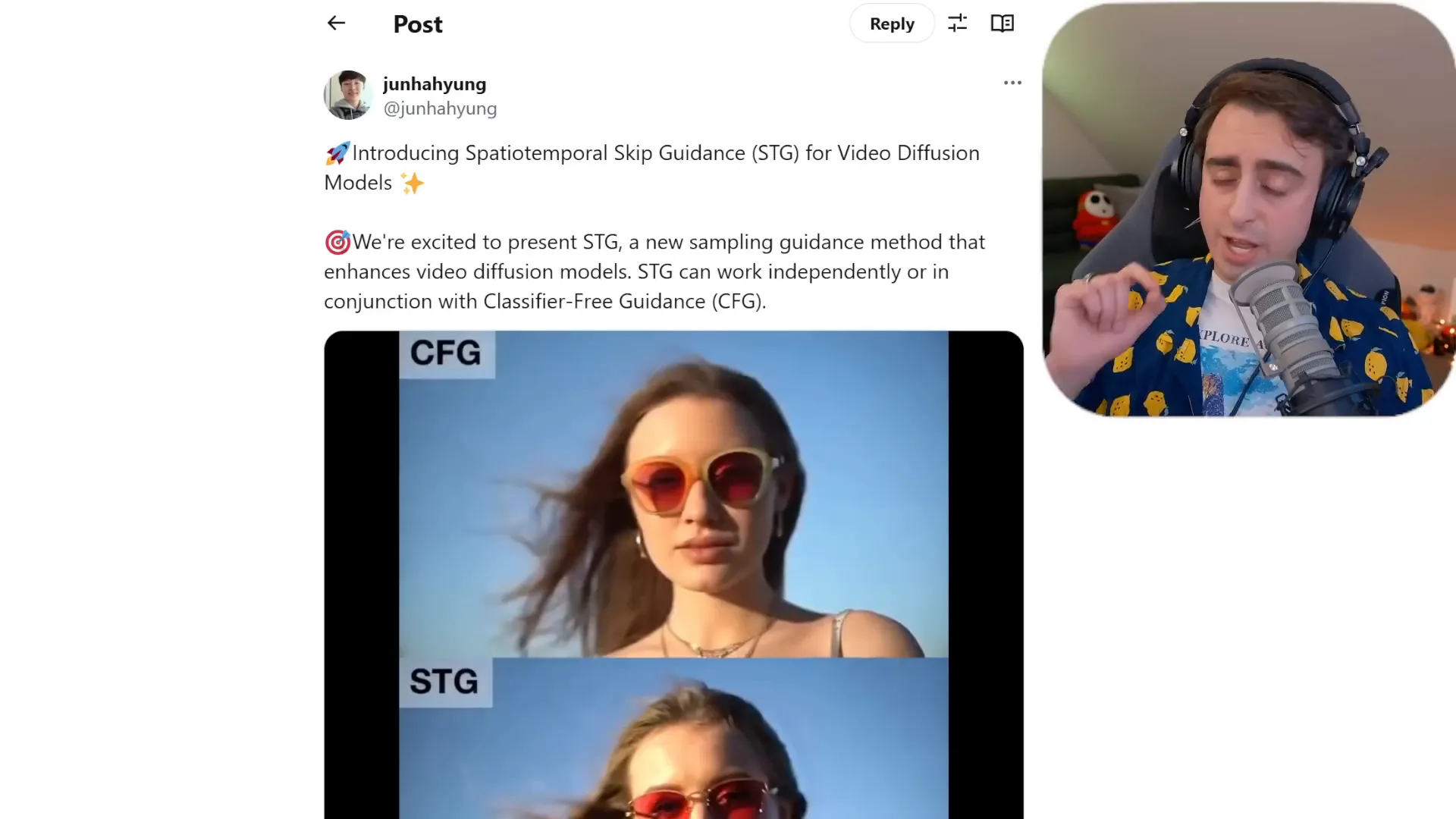

What’s the Buzz About STG?

So, what exactly is Spatiotemporal Skip Guidance? Think of it as your video model’s new best friend. This innovative feature acts as a guiding hand, enhancing the output of video generation models. It can work its magic independently or team up with the classic classifier-free guidance, which is a staple in most image and video generation tools.

Why Should You Care?

The implications are massive! With STG, the details in video outputs are crisper, more lifelike, and much more engaging. Imagine producing videos where smoke billows realistically, and characters appear with depth and expression that almost feels human. This isn’t just an upgrade; it’s a revolution in how we think about video generation.

🌟 Introducing Spatiotemporal Skip Guidance (STG)

Let’s dive deeper into the marvel that is Spatiotemporal Skip Guidance. At its core, STG enhances the temporal consistency of video frames while ensuring that spatial details are not lost. This means that as you watch a video, you’ll notice that movements are smoother and more coherent across frames. The result? A viewing experience that feels seamless and immersive.

How Does STG Work?

STG operates by analyzing the motion dynamics within a video. It intelligently decides how to enhance specific frames based on the overall flow of the video. This ensures that elements like hair movement, facial expressions, and background details are not only preserved but enhanced. The technology behind STG is a blend of cutting-edge algorithms that prioritize detail and realism.

STG’s Impact on Creators

For creators and developers, this means more power at your fingertips. You can produce videos that are not just visually appealing but also rich in detail and emotion. Whether you’re in gaming, filmmaking, or content creation, the ability to harness STG can set your work apart. It’s about pushing boundaries and redefining what’s possible in video generation.

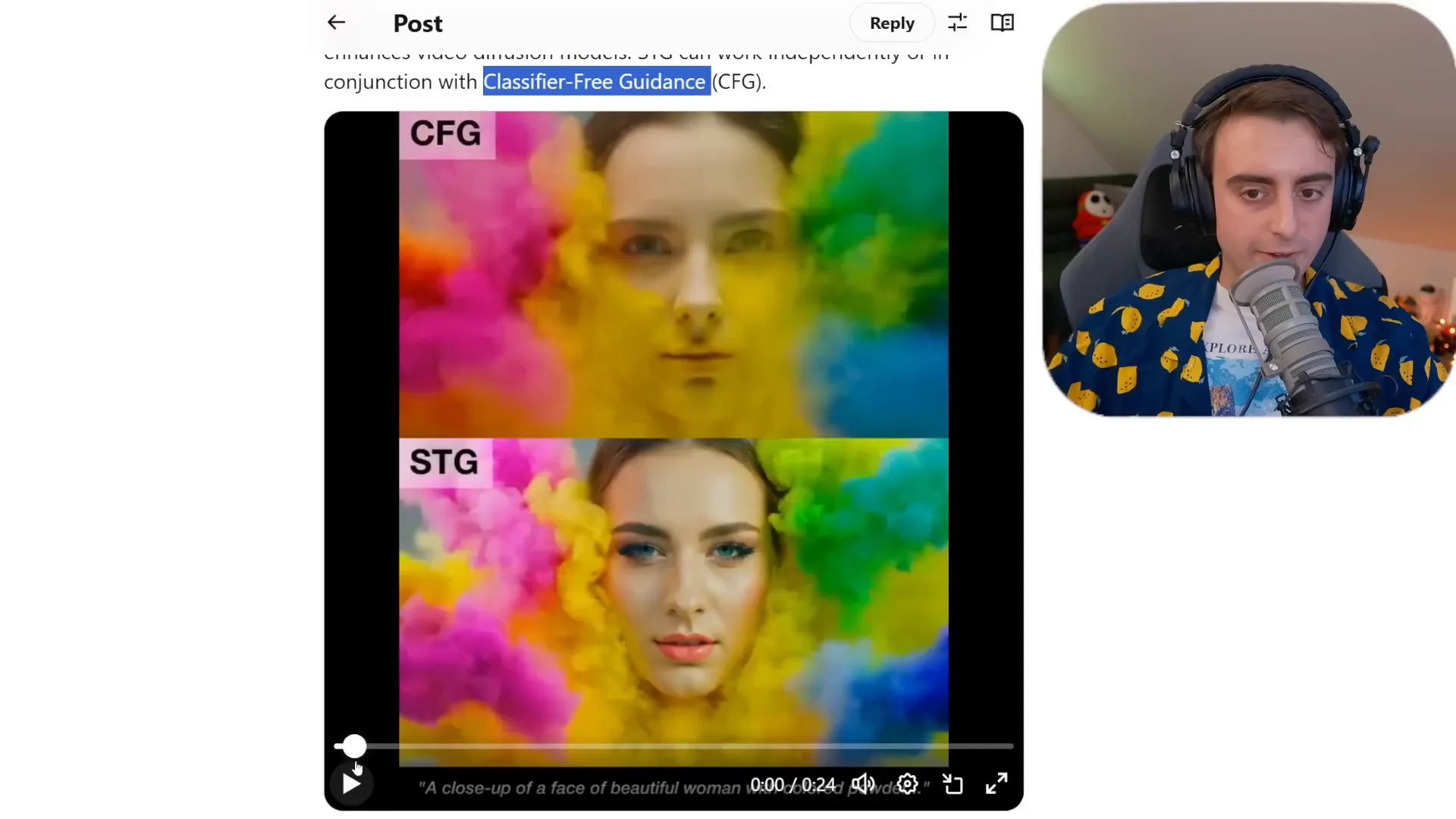

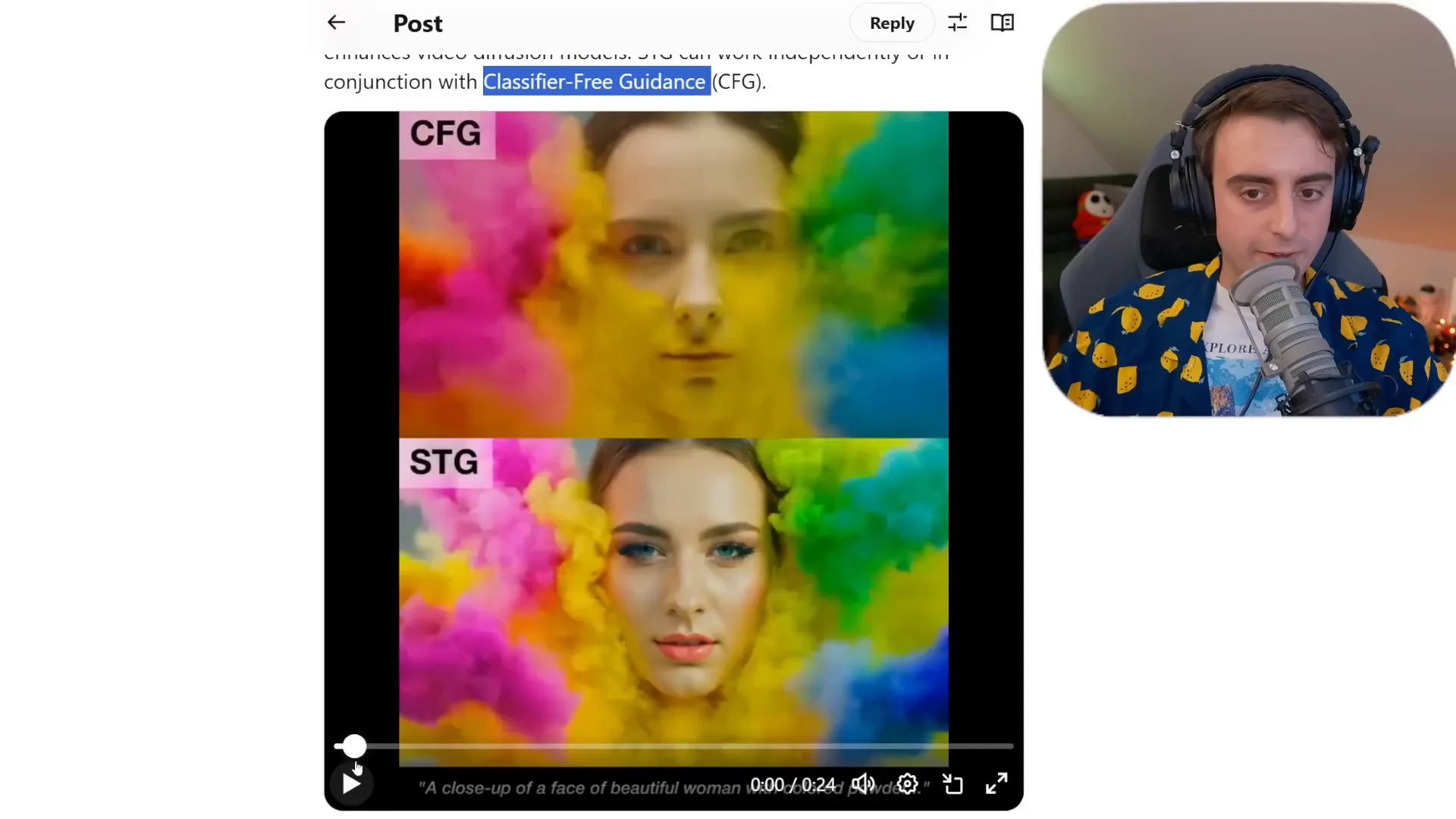

🔍 STG in Action: Detailed Examples

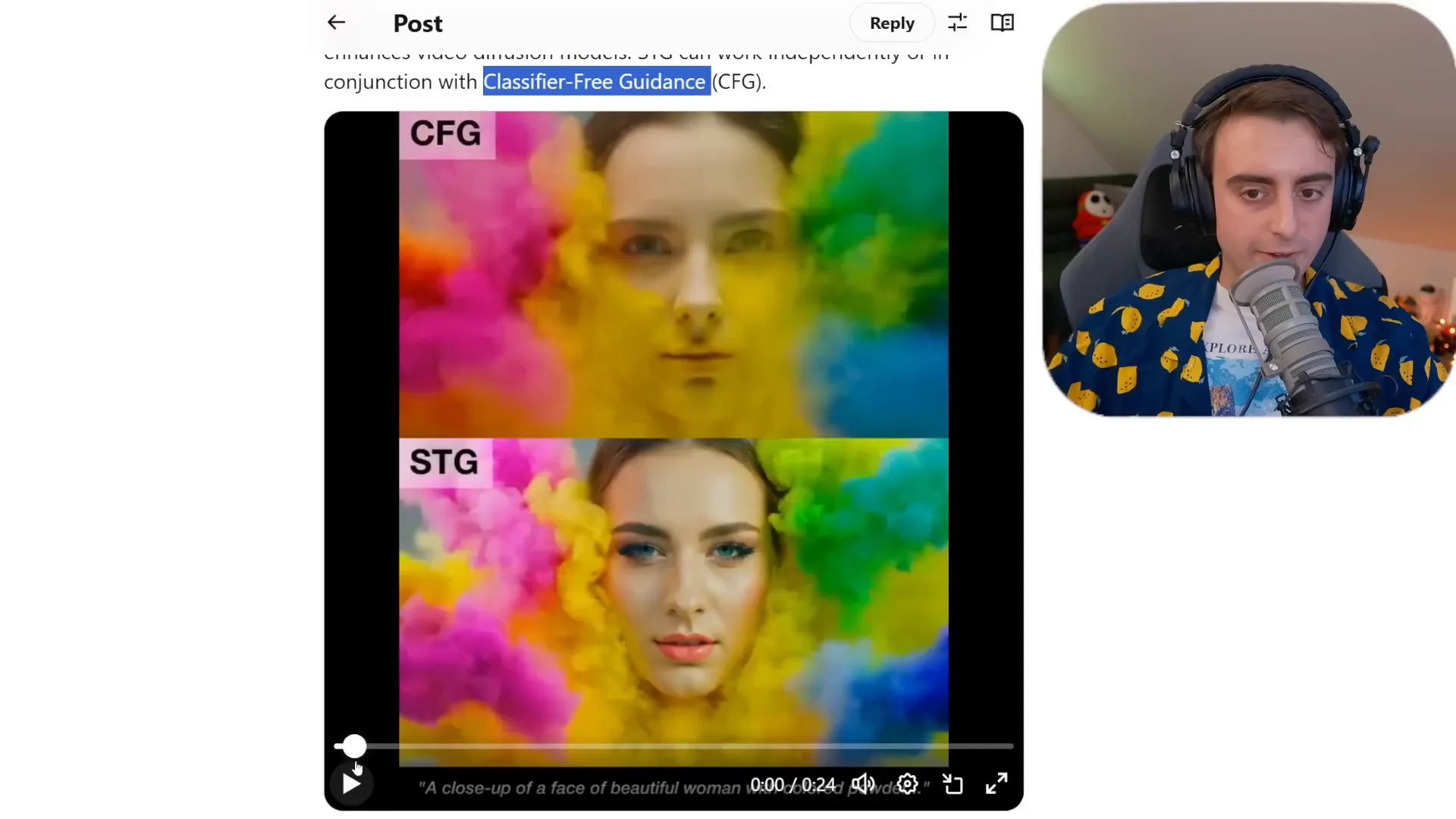

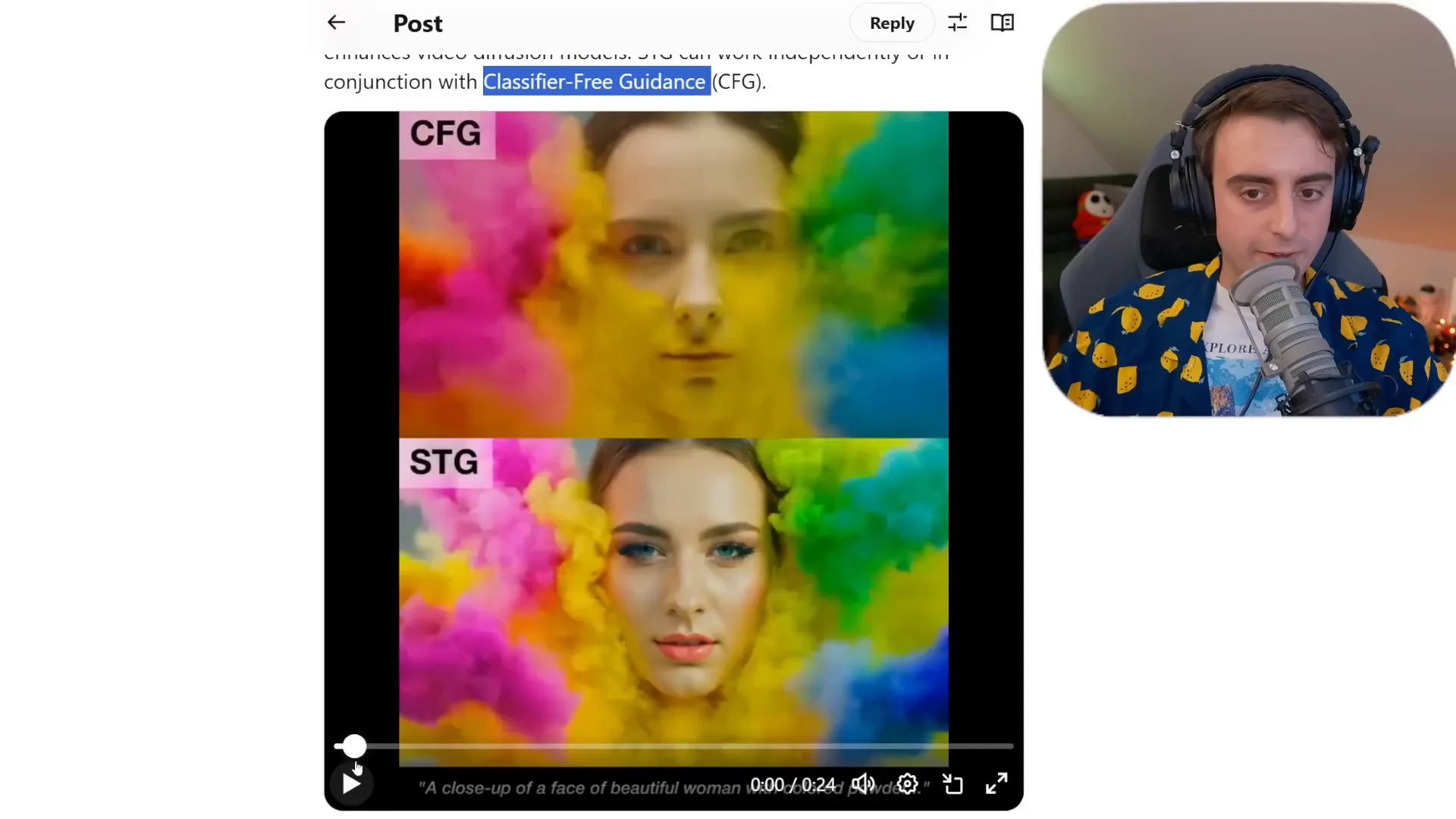

Now, let’s get to the juicy part—real-life examples of STG in action. The results speak for themselves, showcasing the dramatic improvements that STG brings to video generation.

A Closer Look at the Enhancements

Detailed Character Features: Characters rendered with STG look more human-like, capturing nuances in facial expressions and intricate details that were previously absent.

Realistic Environmental Effects: Elements like smoke and lighting are significantly enhanced, adding depth and realism to scenes.

Consistent Motion Across Frames: STG ensures that movements are fluid and consistent, reducing the jarring effects often seen in traditional video generation.

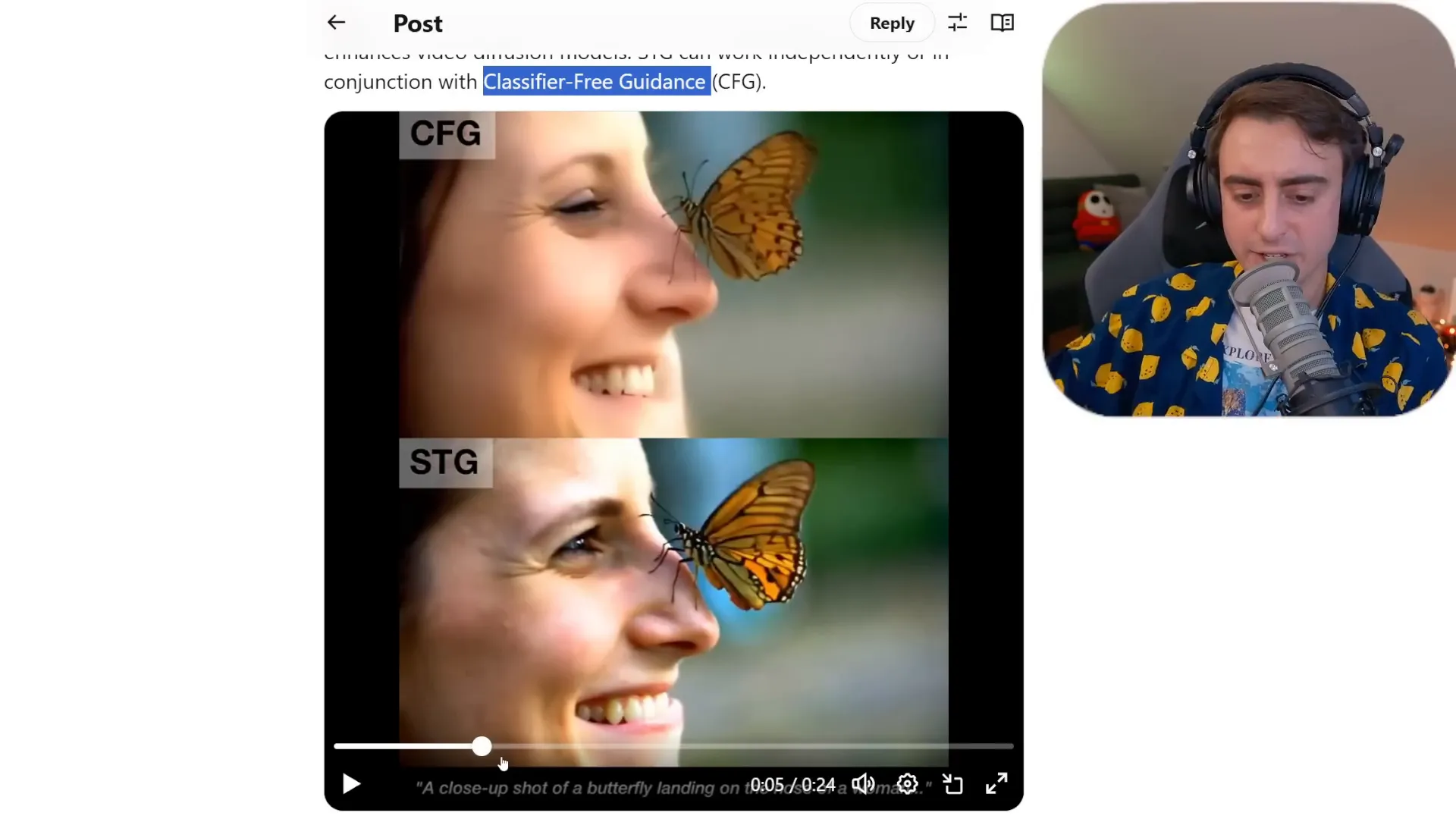

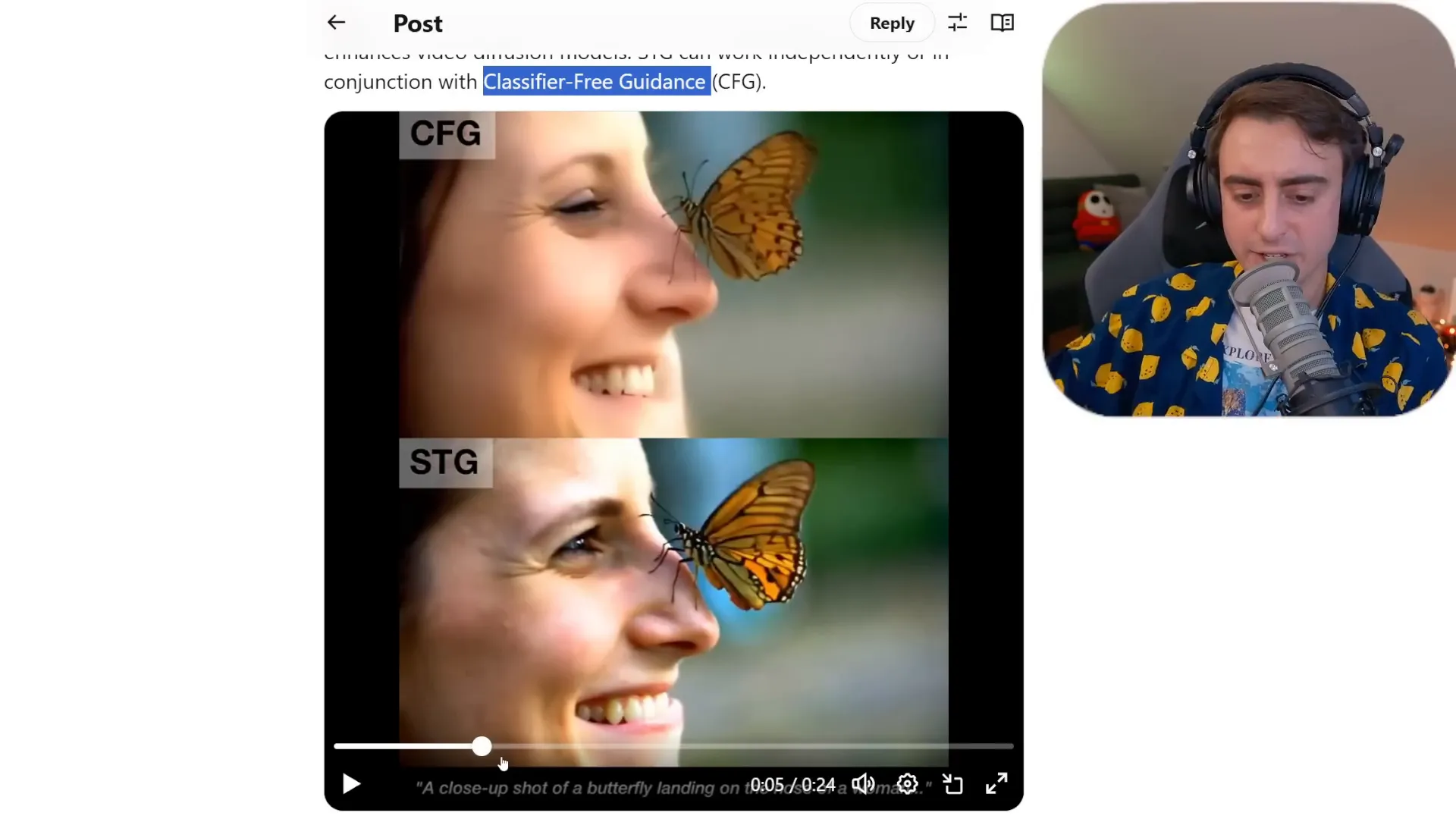

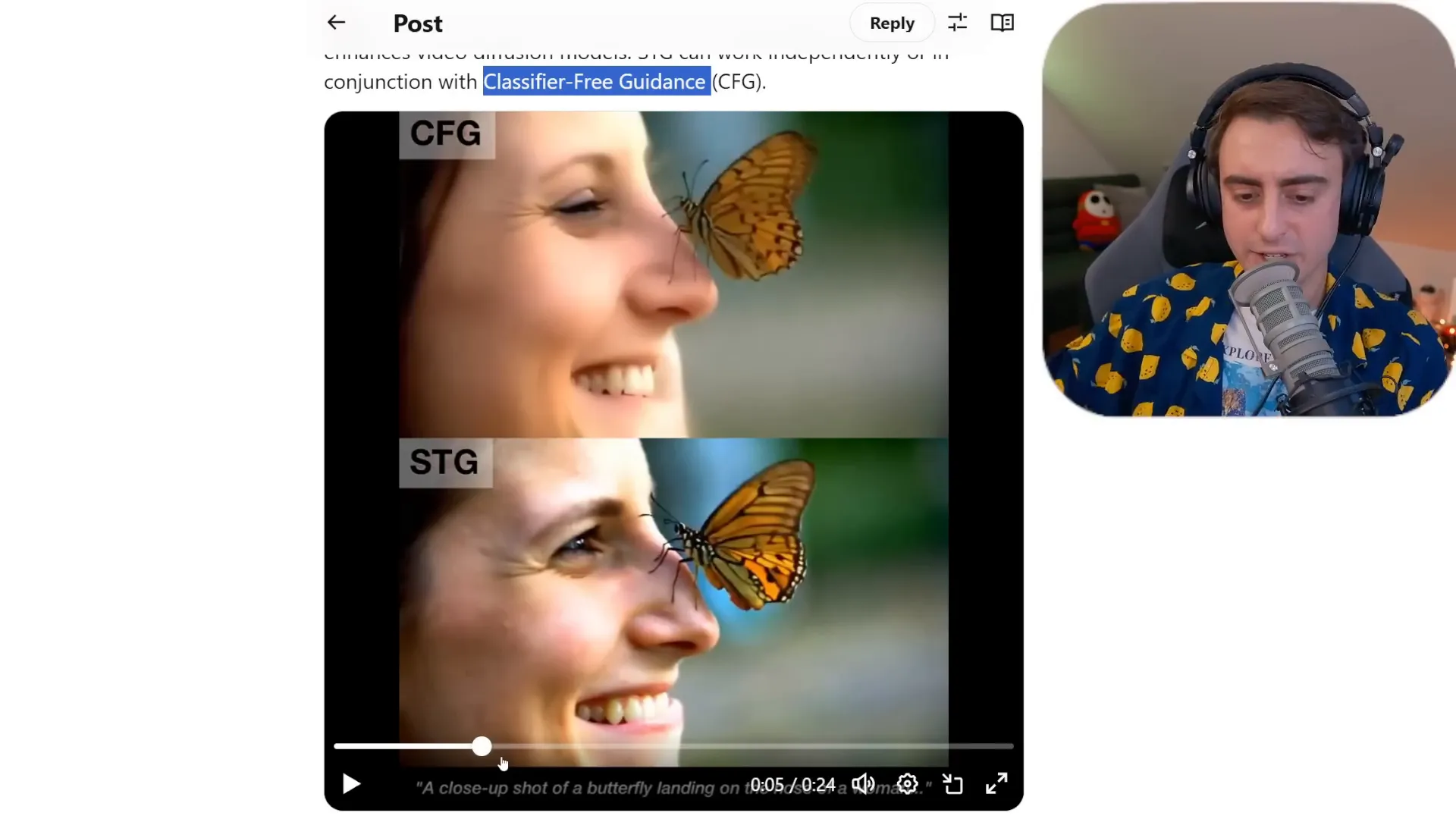

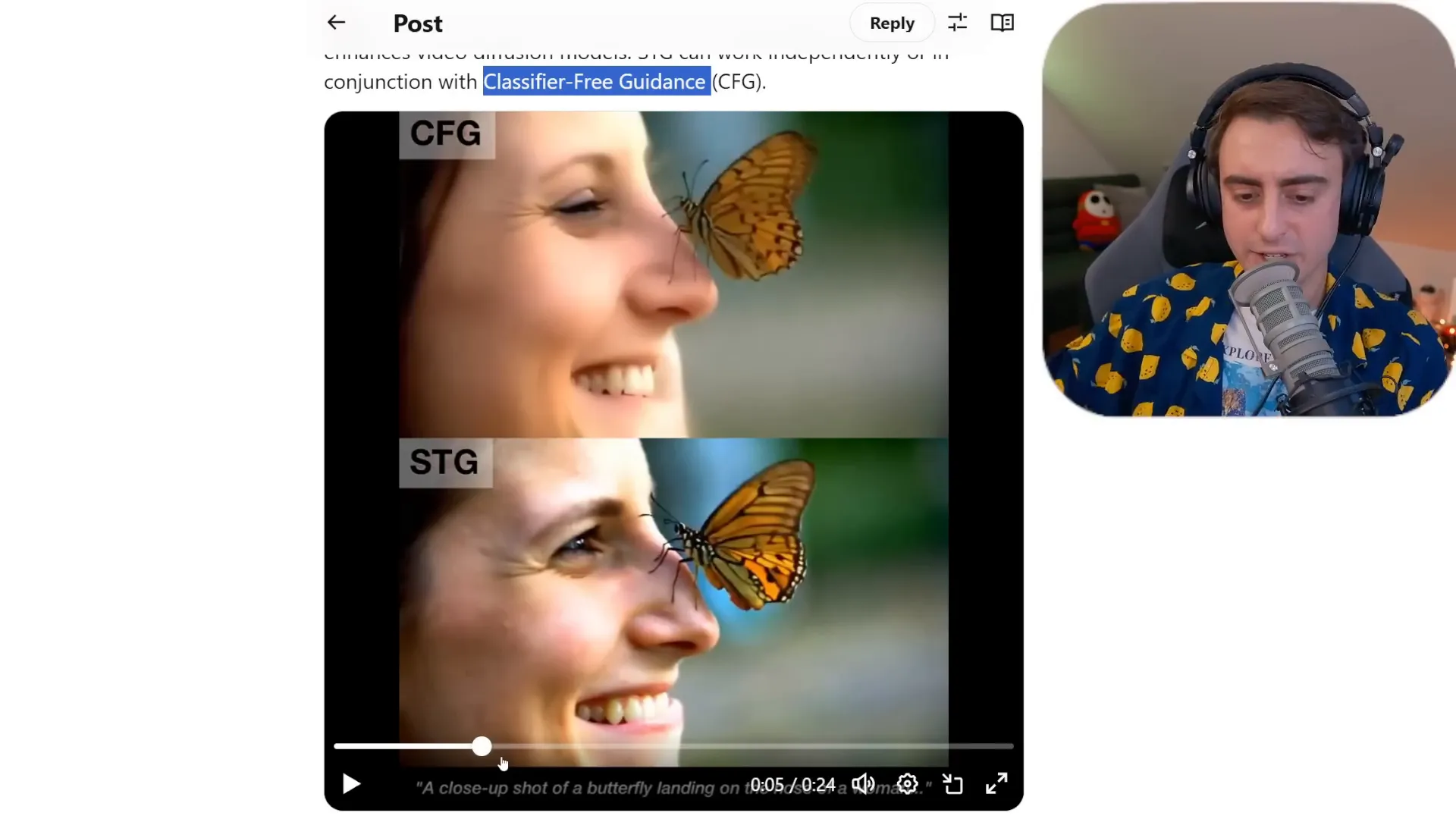

Demonstrating the Power of STG

In one example, a butterfly flutters gracefully across the screen. With traditional models, it might appear blurry or indistinct. However, with STG, every detail—the delicate wings, the antennae, even the colors—are vivid and sharp. It’s a transformation that elevates the entire viewing experience.💥 Impact of STG on AI Video Generation

The introduction of STG is not just a feature; it’s a paradigm shift in AI video generation. For developers, this means a more robust toolkit to create stunning visuals. For users, it translates into richer, more engaging content. The potential applications are vast, and the implications for industries like entertainment, education, and marketing are profound.

Raising the Bar for Quality

With STG, even lower-end models designed for consumer hardware can produce high-quality results. This democratization of technology means that anyone with a decent setup can create videos that were once the domain of high-end studios. Imagine the creativity that will be unleashed!

The Future of AI Video Generation

As STG catches on, we can expect competition to heat up in the AI video generation space. More developers will integrate this technology into their models, leading to an overall increase in quality. The future looks bright, and if the initial results are any indication, we’re just scratching the surface of what’s possible.

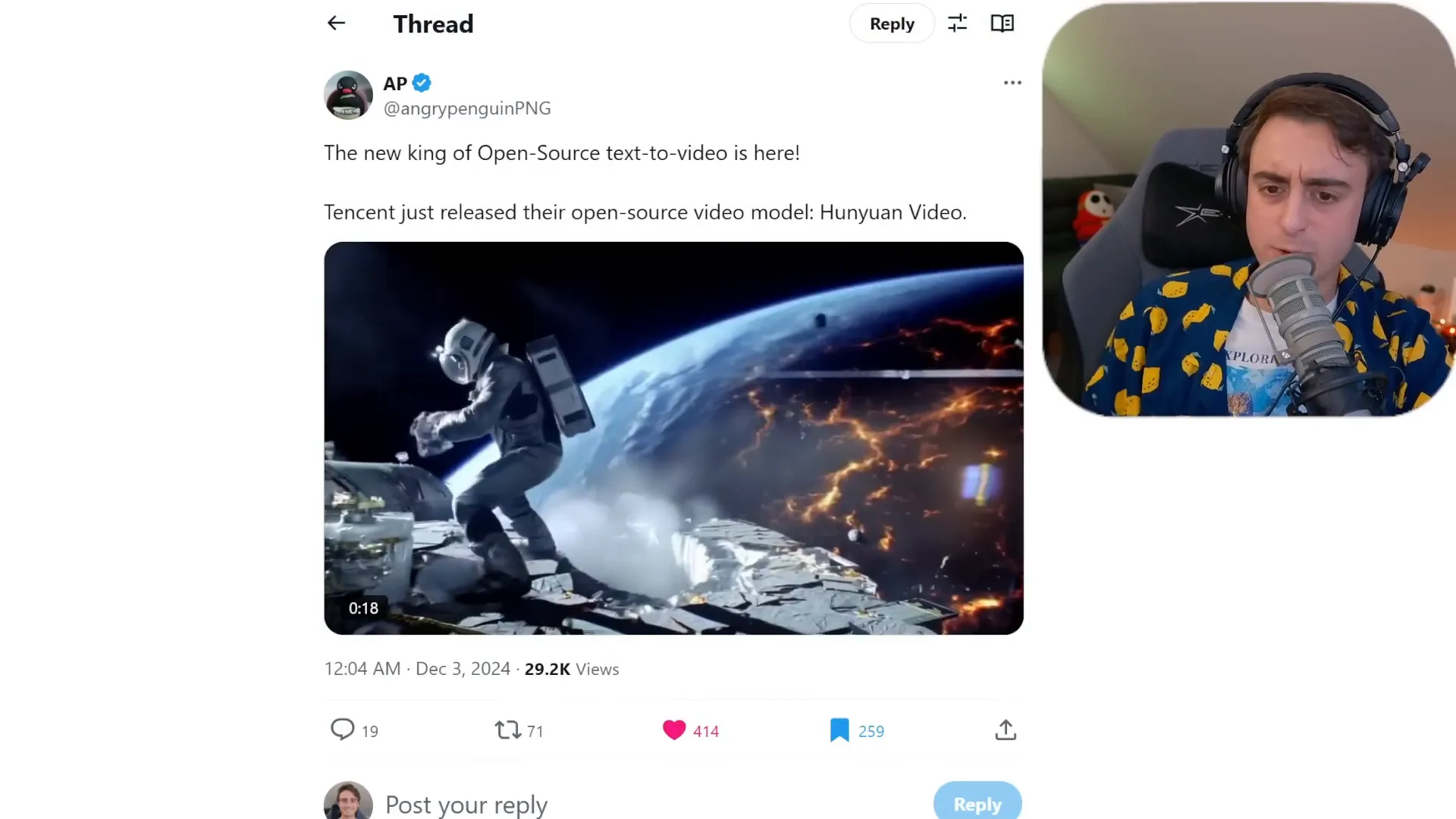

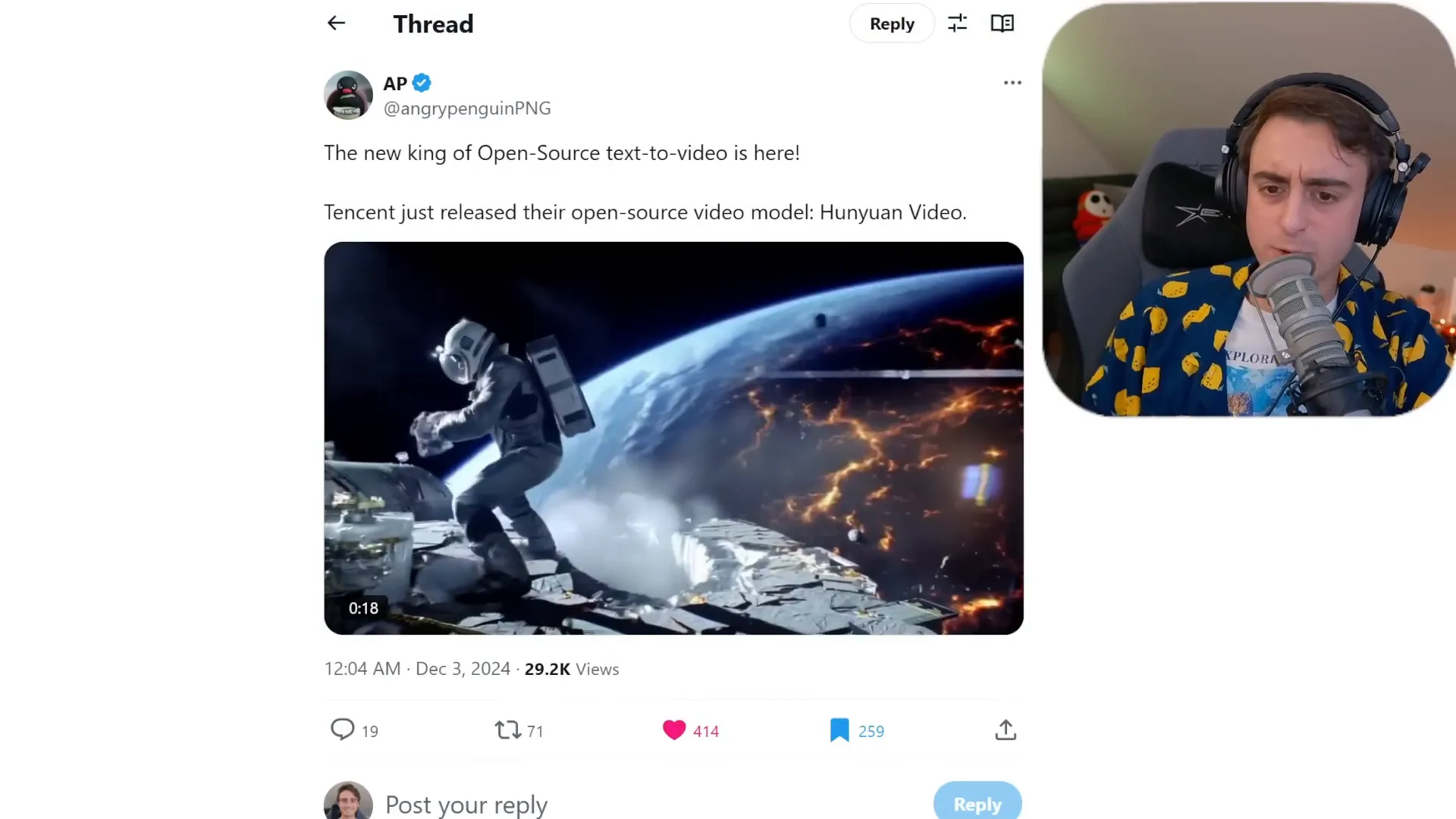

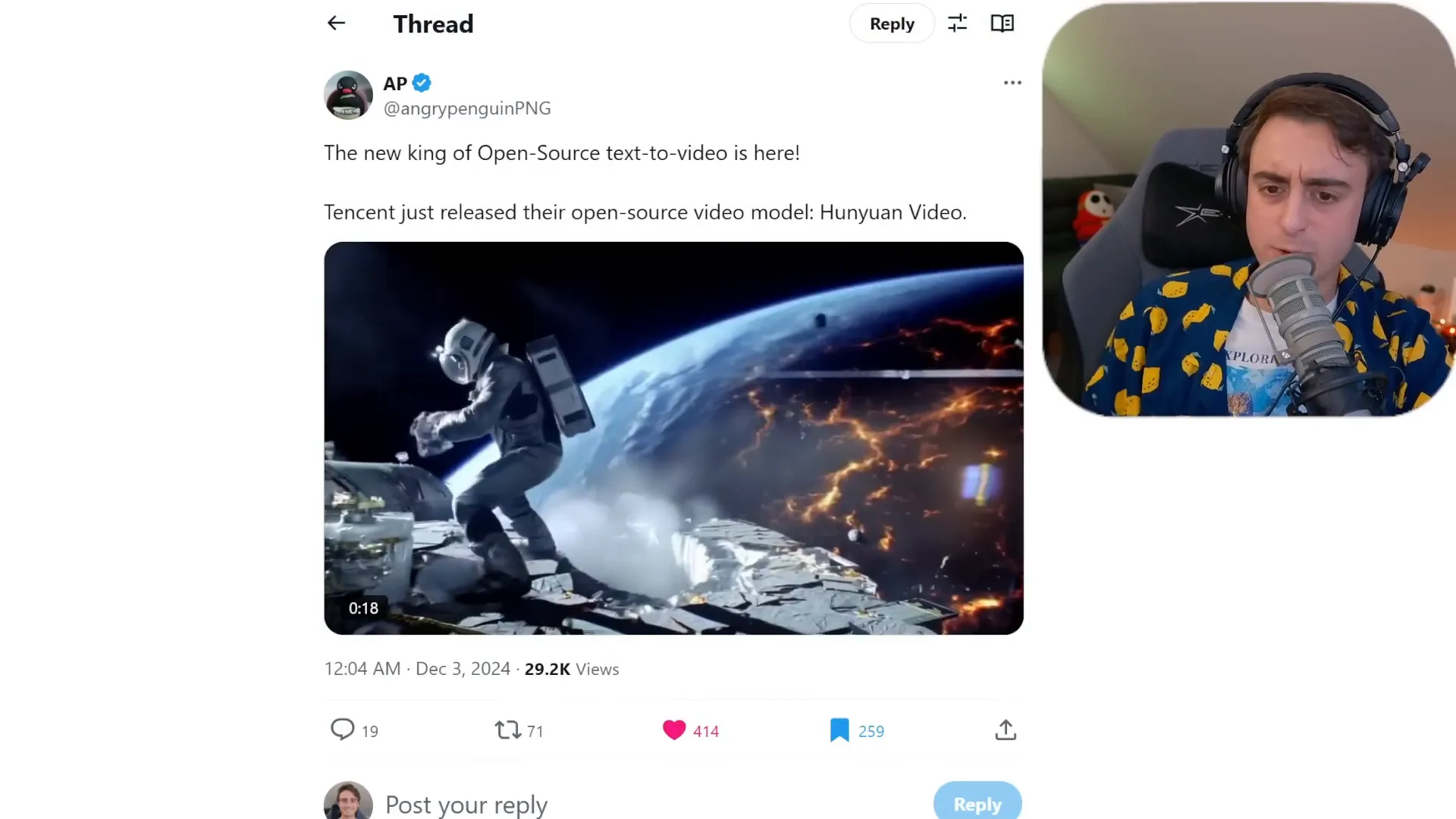

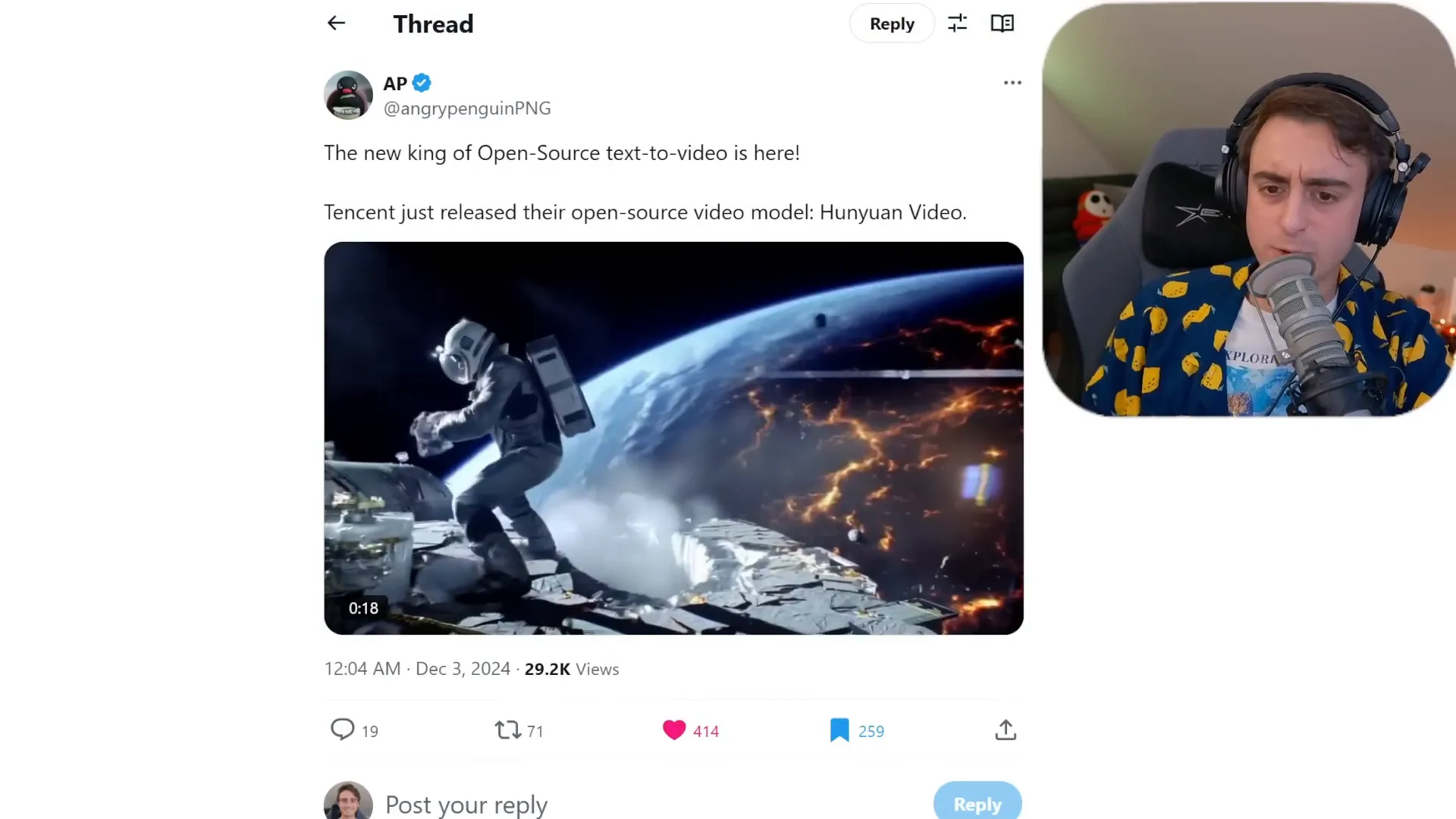

🎥 Tencent's Open Source Video Generation Model

Hold onto your hats, because Tencent has just dropped a bombshell in the world of AI video generation! This powerhouse has released a fully open-source video generation model that is raising the bar to astronomical heights. We’re talking top-tier quality that rivals the best in the business!

What Makes Tencent’s Model Stand Out?

This isn’t just any run-of-the-mill model; it’s equipped with an advanced understanding of physics and lighting that makes it a game changer. Picture this: smooth animations, lifelike movements, and stunning visuals that make you question reality. Tencent’s model is designed to produce high-quality videos that can be integrated into various workflows, making it incredibly versatile.

The VRAM Challenge

Now, let’s talk about the elephant in the room: VRAM. As impressive as this model is, it currently demands a whopping sixty gigabytes of VRAM! For those not in the know, that’s a hefty amount of video memory that most consumer-grade GPUs simply can’t handle. But fear not! The open-source community is already on the case, working tirelessly to make this technology accessible to the masses.

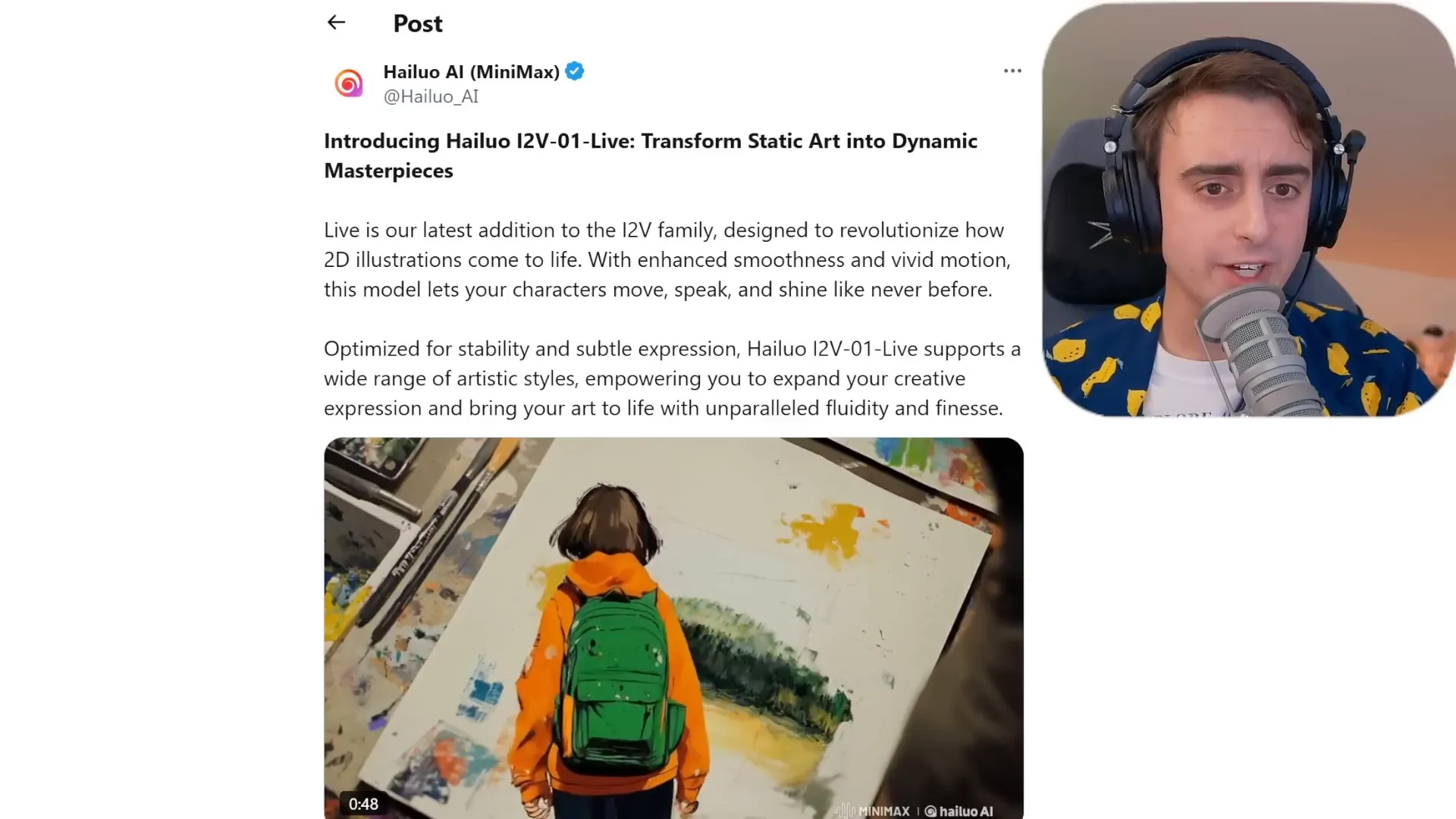

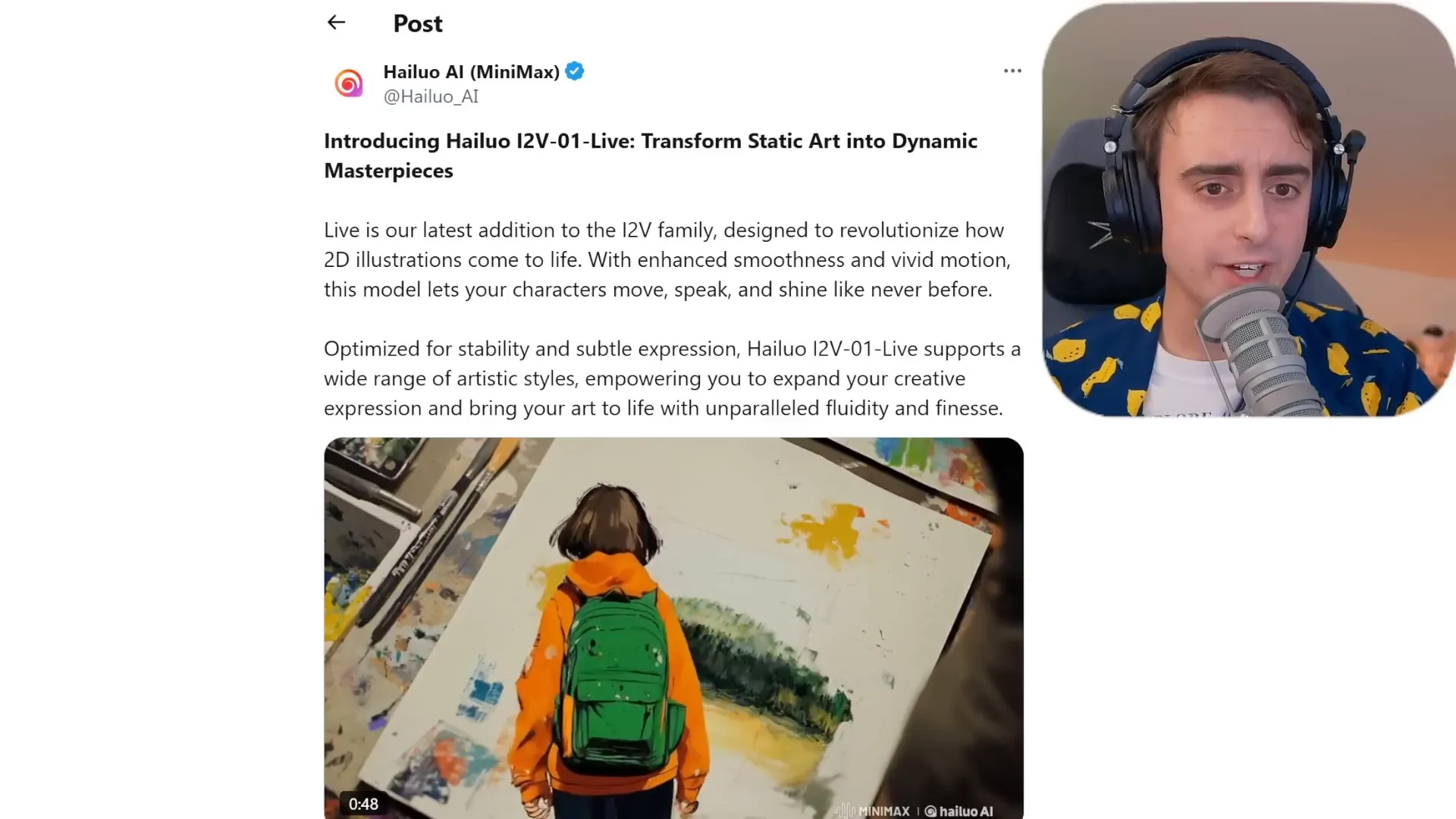

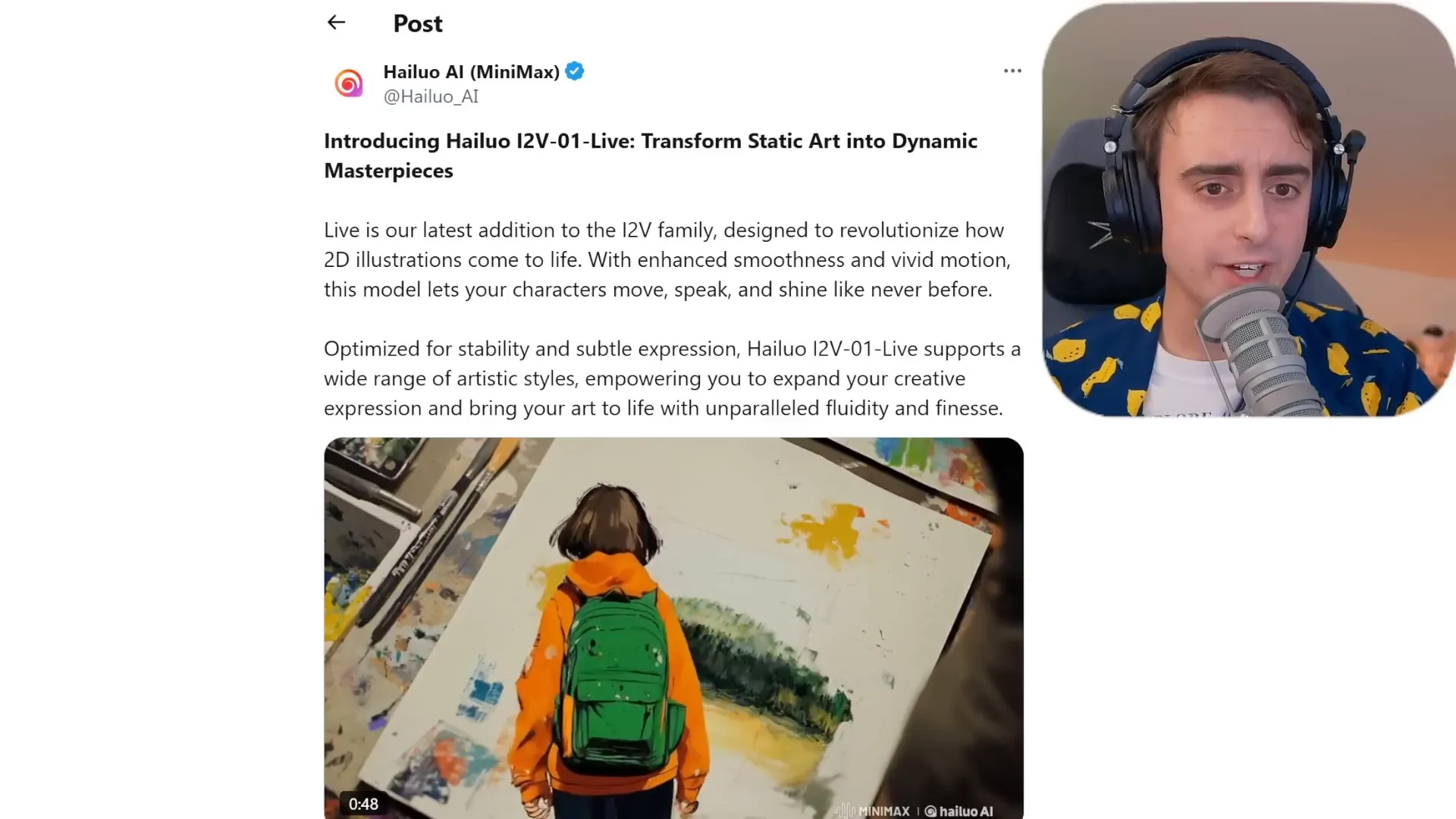

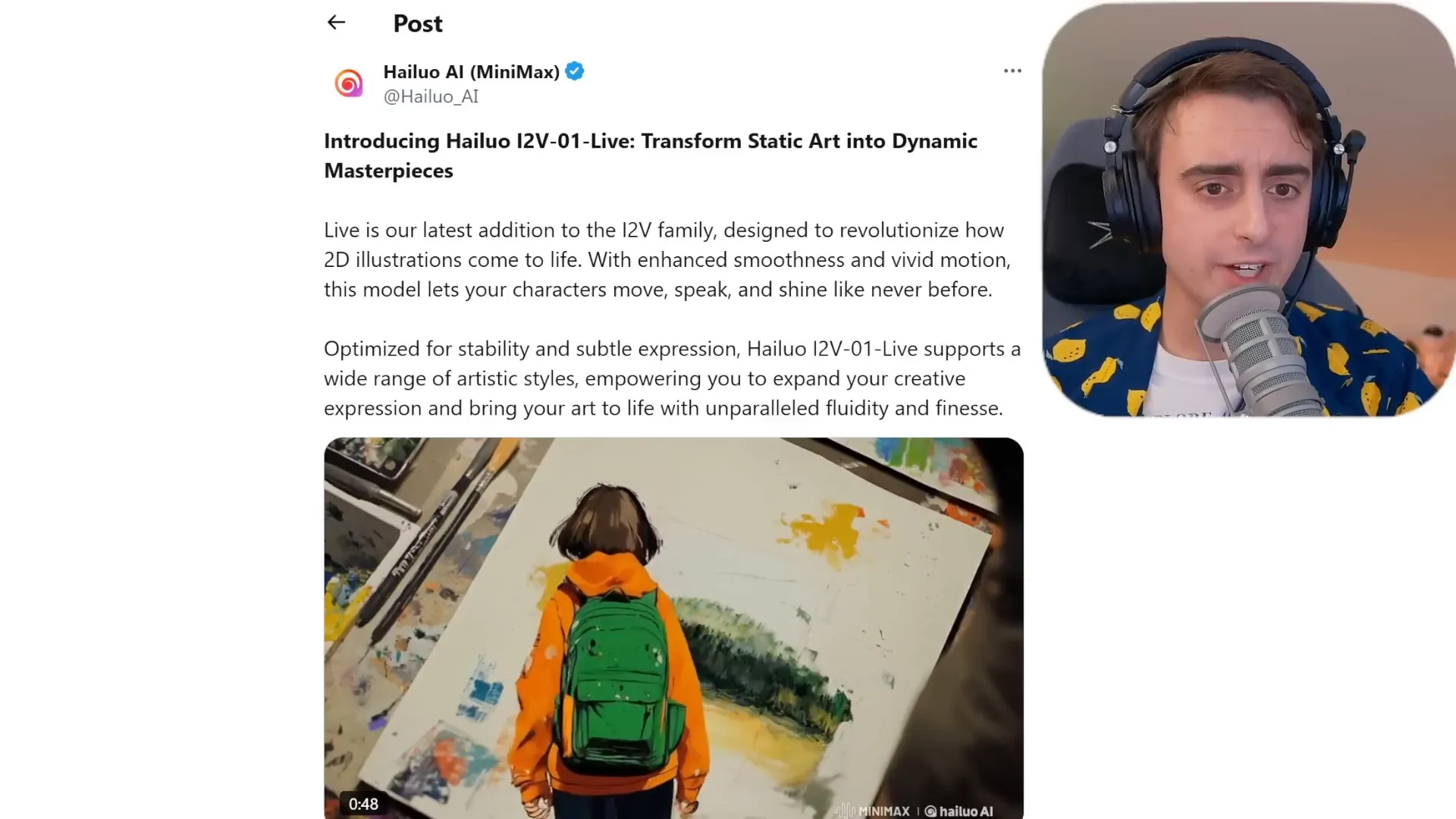

🖌️ Minimax's 12v01Live: Dynamic Motion for 2D Art

Next up, we have a thrilling new tool from Minimax, known as 12v01Live. This innovative model is specifically designed to breathe life into static 2D illustrations. Imagine your favorite characters moving and interacting in ways you never thought possible!

Transforming 2D Art into Motion

12v01Live is not just any animation tool; it’s a master at enhancing smoothness and vivid motion. It takes your static illustrations and transforms them into dynamic visuals, allowing characters to move and even speak! This is a game changer for artists and animators looking to add depth and interaction to their work.

What It Is and Isn’t

Let’s clarify what 12v01Live is not. This isn’t a model that takes your video footage and applies motion to it; rather, it’s a specialized tool for 2D art. The focus here is on creating fluid, animated sequences that stay true to the artistic style of the original piece. It’s a marriage of art and technology that genuinely enhances the creative process.

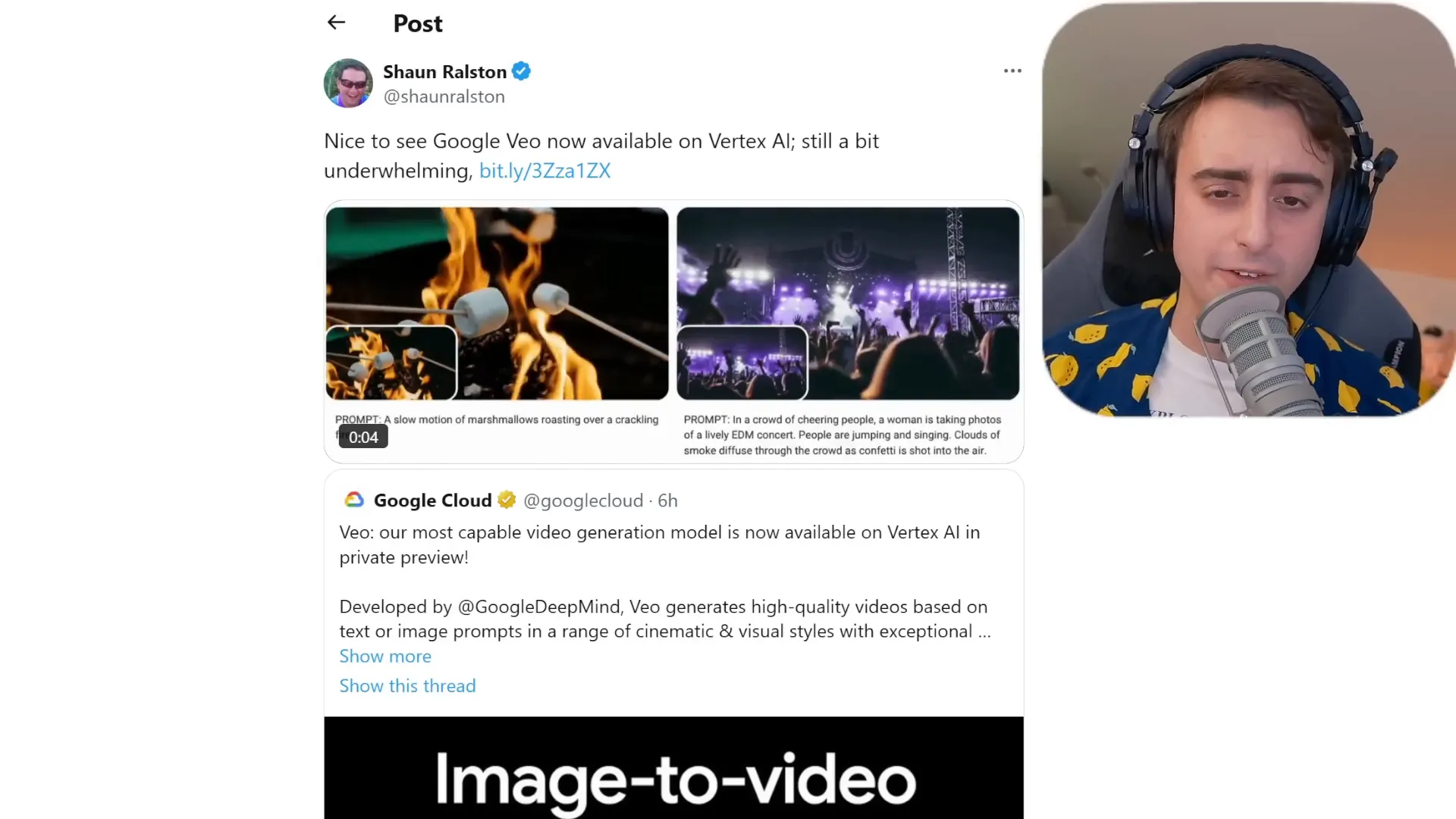

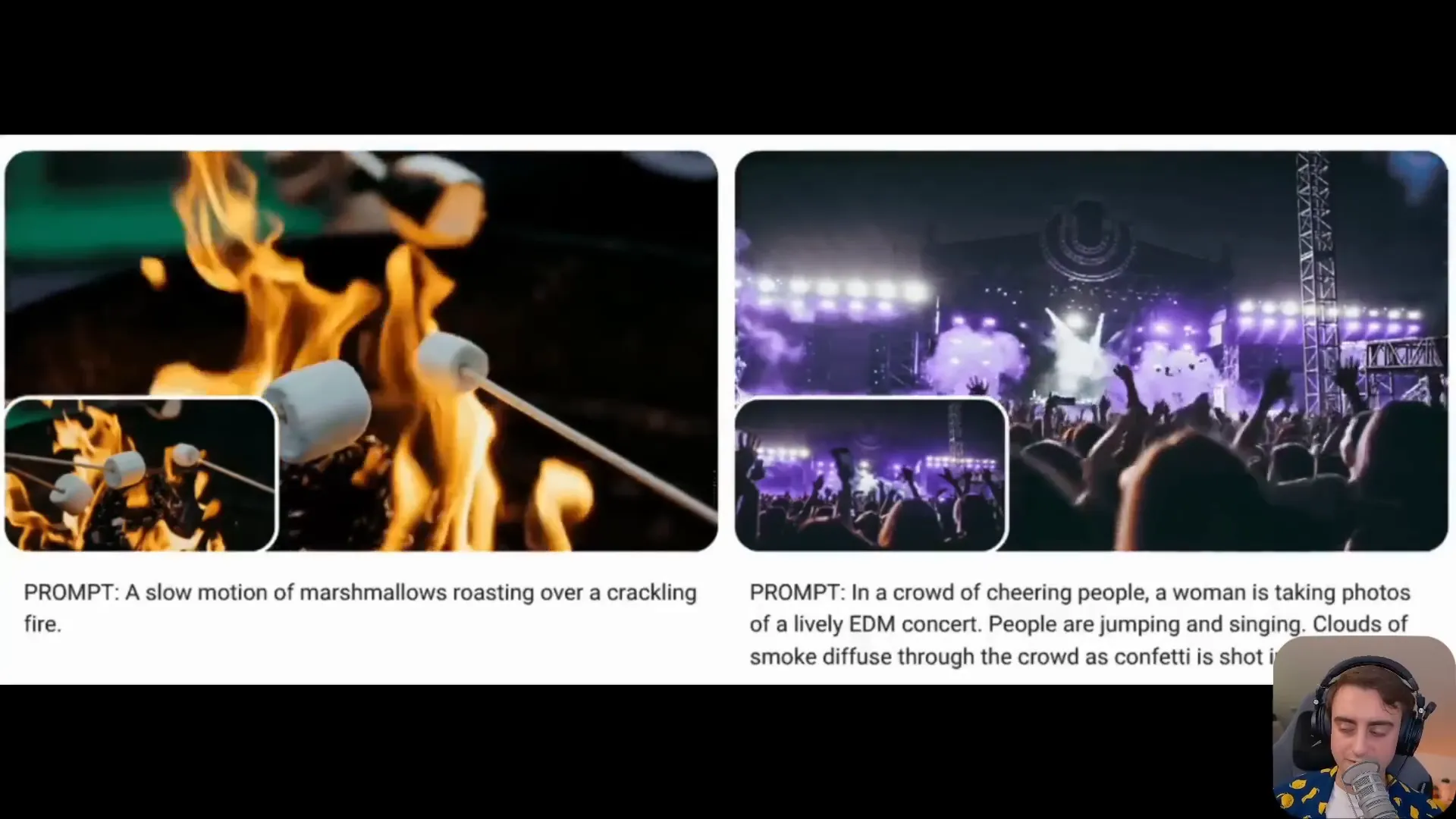

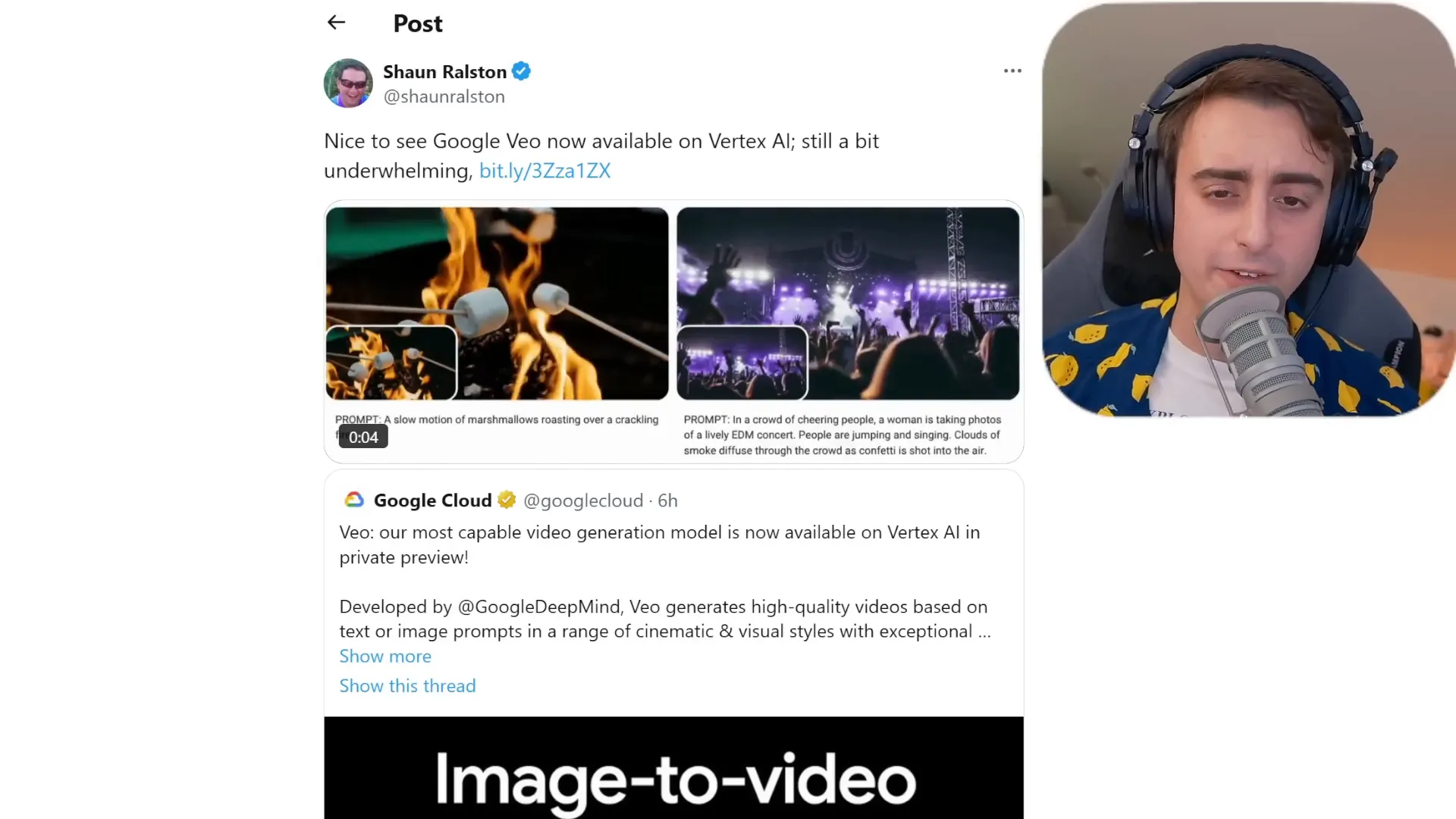

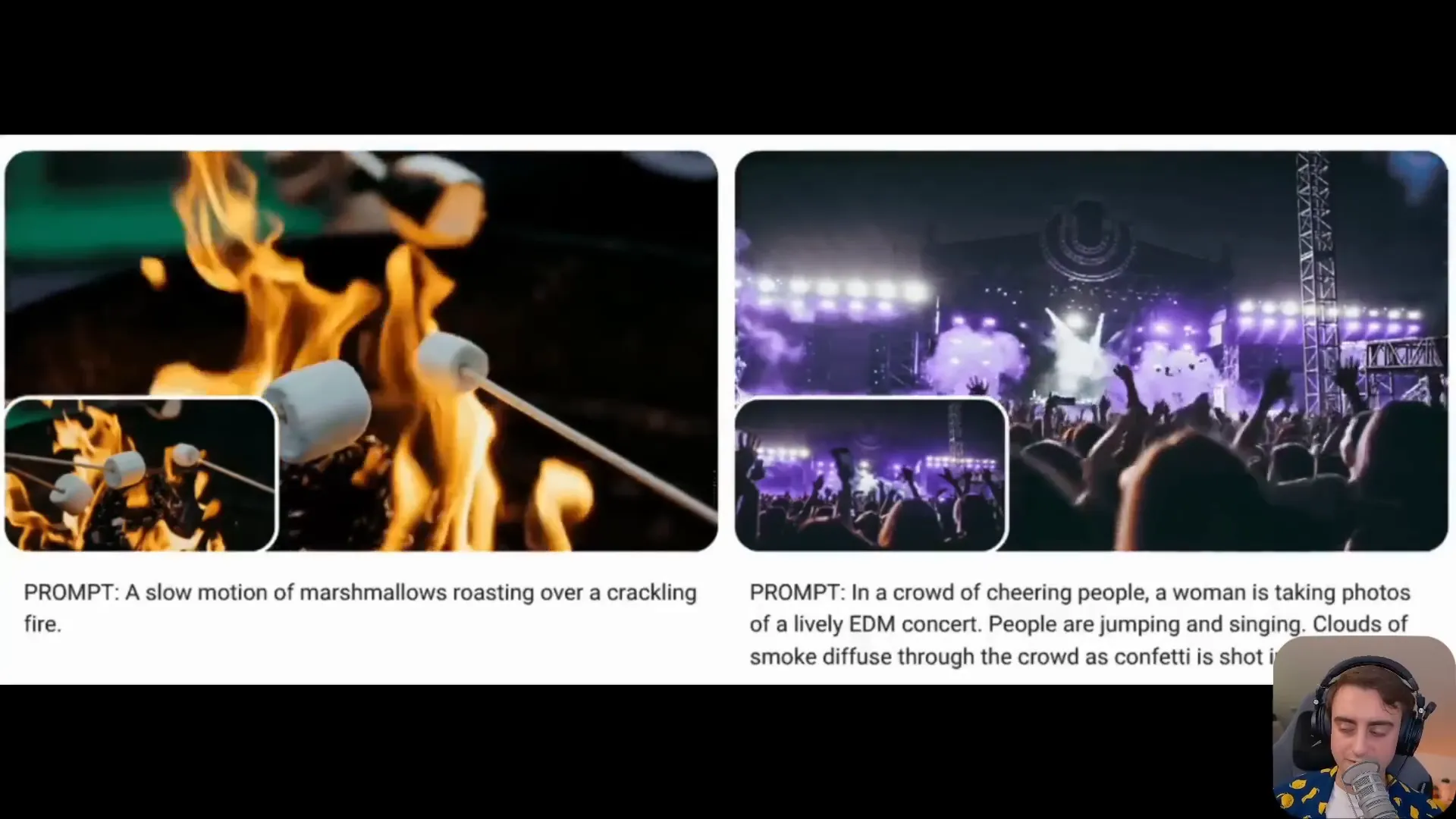

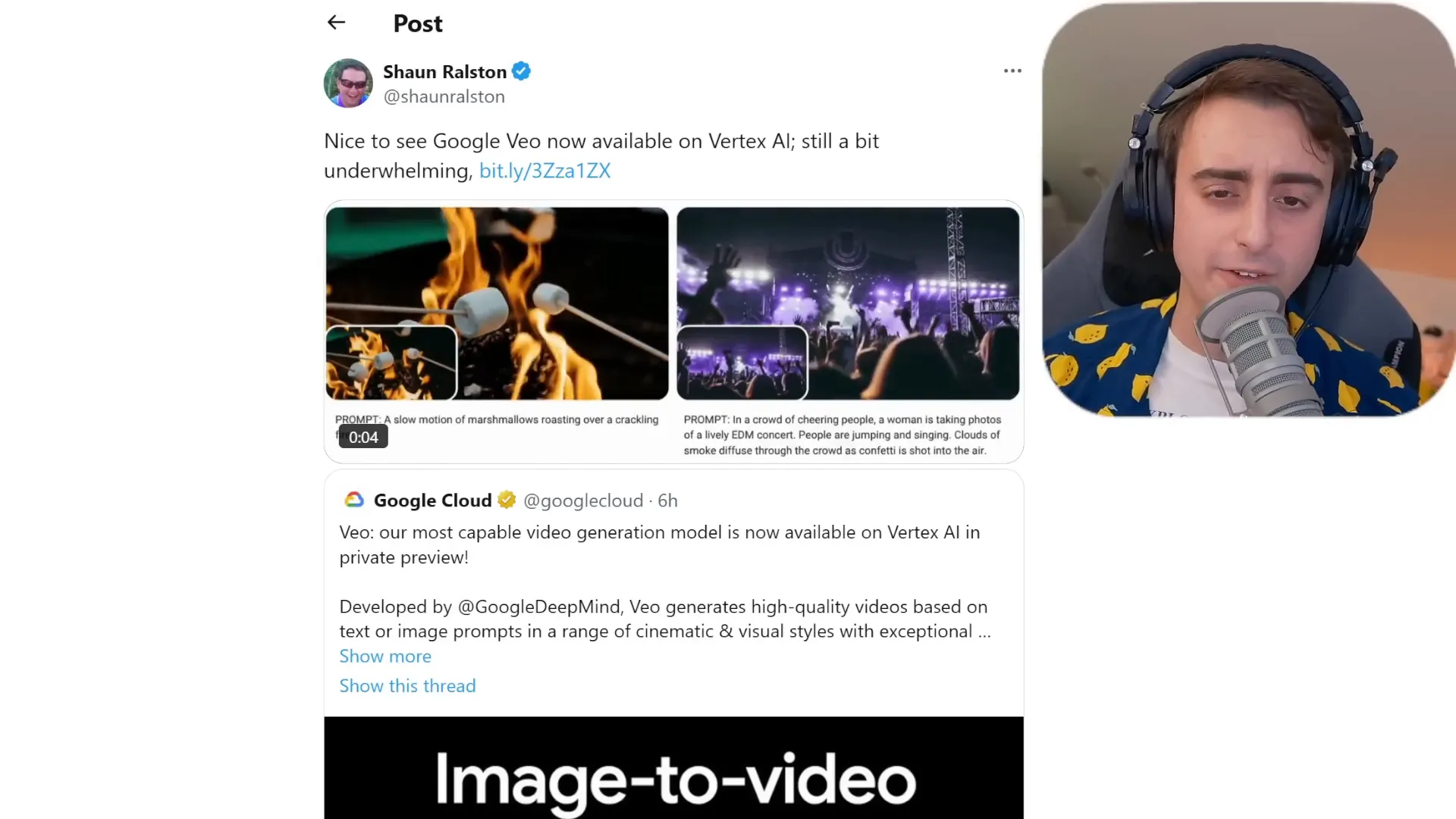

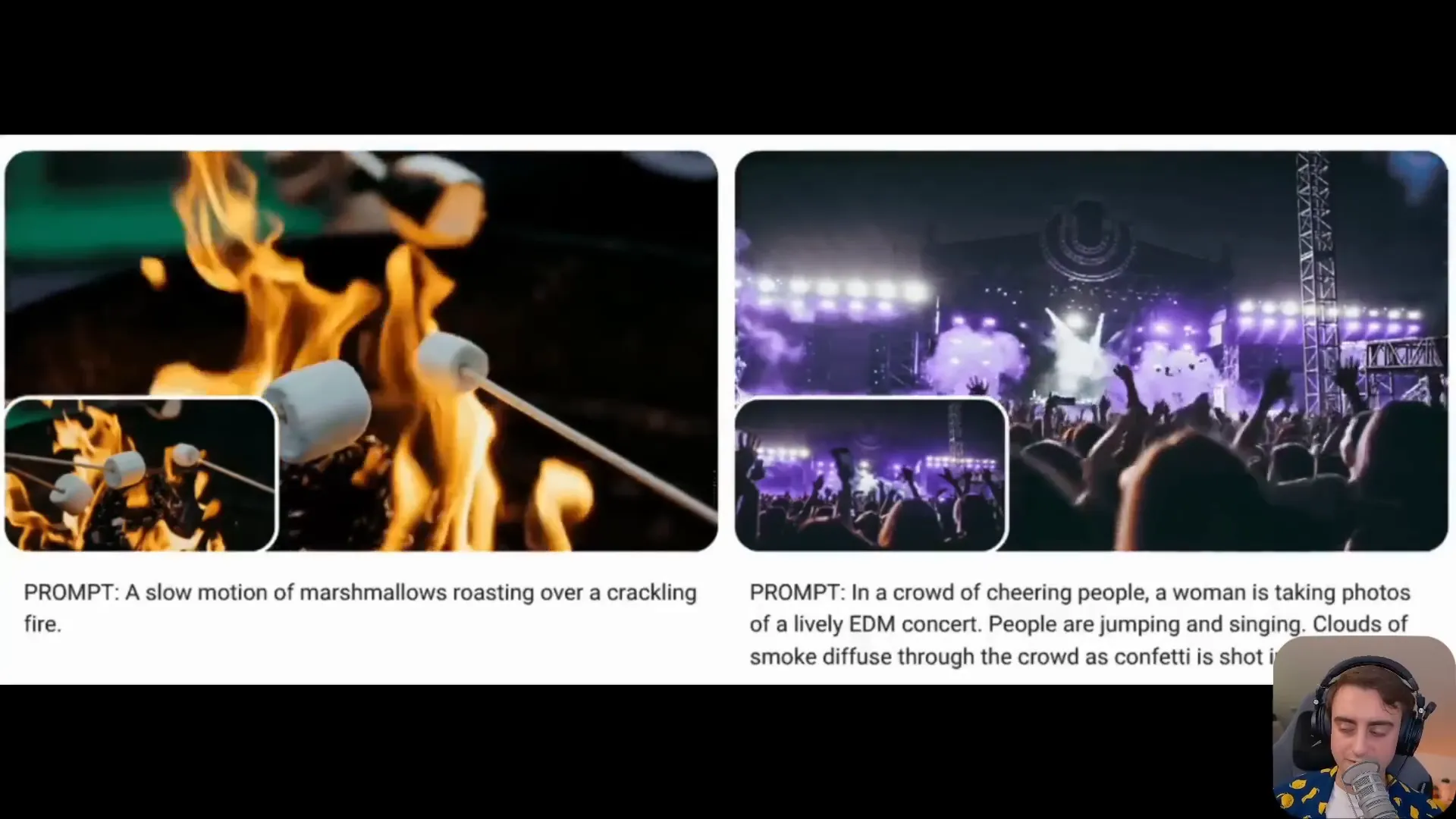

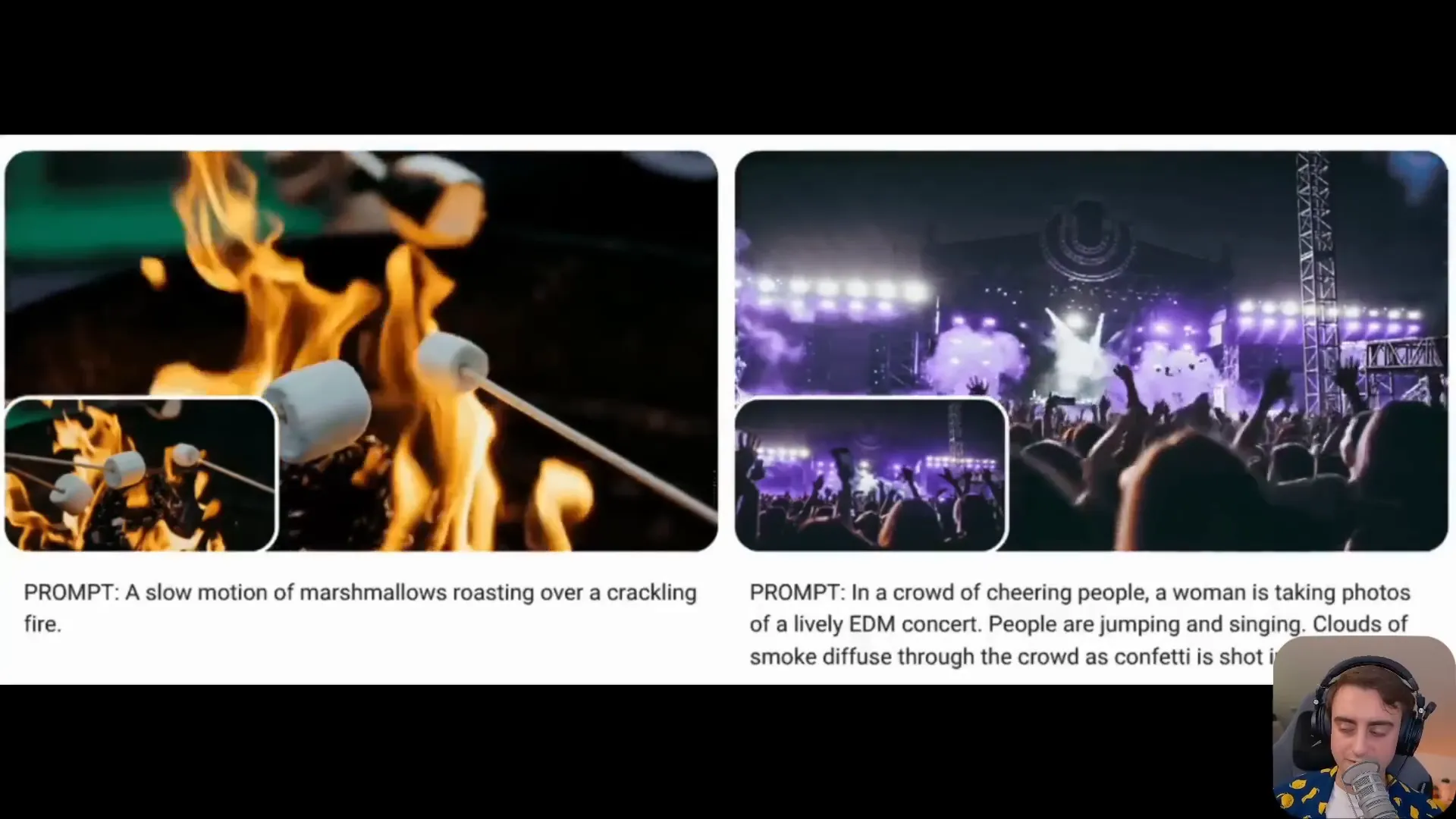

🔊 Google's VO AI Video Generation Model

Hold your applause, because Google has finally rolled out its VO AI video generation model on the Vertex AI platform! However, the excitement might be a bit tempered as this release is currently in private preview. So, what’s the verdict?

Underwhelming or Just Getting Started?

While the initial demos showcase some intriguing capabilities—like slow-motion marshmallows roasting and a lively crowd at an EDM concert—many feel it lacks the pizzazz of newer models. Google VO was once the talk of the town, but it seems to have lost some ground in the race. The real test will be how it handles complex prompts and multiple entities interacting seamlessly.

The Competition Heats Up

With OpenAI breathing down its neck, Google needs to step up its game. The buzz around the AI community is palpable, and everyone is eagerly awaiting what’s next. Will Google VO rise to the occasion, or will it be left in the dust? Only time will tell.

🔮 Upcoming Releases and Final Thoughts

As we look to the future, it’s clear that the AI video generation landscape is evolving at lightning speed. With groundbreaking models from Tencent, Minimax, and Google, the competition is fierce, and the innovation is relentless.

What’s Next?

Keep your eyes peeled, as exciting releases are on the horizon! The buzz around OpenAI’s potential new offerings has everyone on edge, and it seems like this week is going to be monumental for AI advancements. Will we see the release of Sora? Or perhaps more surprises? The anticipation is electric!

Final Thoughts

The future of AI video generation looks incredibly bright. With open-source contributions fostering innovation and competition heating up, creators and developers alike have a treasure trove of tools at their disposal. Buckle up, because we’re just getting started!

In the fast-evolving landscape of AI video generation, new technologies and models are emerging at an unprecedented rate. This blog explores the latest advancements, including Spatiotemporal Skip Guidance, Tencent's groundbreaking open-source model, and much more, highlighting how these innovations are set to enhance video quality and realism.

🚀 Exciting Video Generation Updates

Hold onto your seats, because the world of AI video generation just got a turbo boost! The latest breakthroughs are not just minor tweaks; they’re game changers. With the introduction of Spatiotemporal Skip Guidance (STG), we’re stepping into a new era where video generation models are more precise, realistic, and downright impressive. It’s not just about creating videos anymore; it’s about creating experiences that captivate and engage like never before.

What’s the Buzz About STG?

So, what exactly is Spatiotemporal Skip Guidance? Think of it as your video model’s new best friend. This innovative feature acts as a guiding hand, enhancing the output of video generation models. It can work its magic independently or team up with the classic classifier-free guidance, which is a staple in most image and video generation tools.

Why Should You Care?

The implications are massive! With STG, the details in video outputs are crisper, more lifelike, and much more engaging. Imagine producing videos where smoke billows realistically, and characters appear with depth and expression that almost feels human. This isn’t just an upgrade; it’s a revolution in how we think about video generation.

🌟 Introducing Spatiotemporal Skip Guidance (STG)

Let’s dive deeper into the marvel that is Spatiotemporal Skip Guidance. At its core, STG enhances the temporal consistency of video frames while ensuring that spatial details are not lost. This means that as you watch a video, you’ll notice that movements are smoother and more coherent across frames. The result? A viewing experience that feels seamless and immersive.

How Does STG Work?

STG operates by analyzing the motion dynamics within a video. It intelligently decides how to enhance specific frames based on the overall flow of the video. This ensures that elements like hair movement, facial expressions, and background details are not only preserved but enhanced. The technology behind STG is a blend of cutting-edge algorithms that prioritize detail and realism.

STG’s Impact on Creators

For creators and developers, this means more power at your fingertips. You can produce videos that are not just visually appealing but also rich in detail and emotion. Whether you’re in gaming, filmmaking, or content creation, the ability to harness STG can set your work apart. It’s about pushing boundaries and redefining what’s possible in video generation.

🔍 STG in Action: Detailed Examples

Now, let’s get to the juicy part—real-life examples of STG in action. The results speak for themselves, showcasing the dramatic improvements that STG brings to video generation.

A Closer Look at the Enhancements

Detailed Character Features: Characters rendered with STG look more human-like, capturing nuances in facial expressions and intricate details that were previously absent.

Realistic Environmental Effects: Elements like smoke and lighting are significantly enhanced, adding depth and realism to scenes.

Consistent Motion Across Frames: STG ensures that movements are fluid and consistent, reducing the jarring effects often seen in traditional video generation.

Demonstrating the Power of STG

In one example, a butterfly flutters gracefully across the screen. With traditional models, it might appear blurry or indistinct. However, with STG, every detail—the delicate wings, the antennae, even the colors—are vivid and sharp. It’s a transformation that elevates the entire viewing experience.💥 Impact of STG on AI Video Generation

The introduction of STG is not just a feature; it’s a paradigm shift in AI video generation. For developers, this means a more robust toolkit to create stunning visuals. For users, it translates into richer, more engaging content. The potential applications are vast, and the implications for industries like entertainment, education, and marketing are profound.

Raising the Bar for Quality

With STG, even lower-end models designed for consumer hardware can produce high-quality results. This democratization of technology means that anyone with a decent setup can create videos that were once the domain of high-end studios. Imagine the creativity that will be unleashed!

The Future of AI Video Generation

As STG catches on, we can expect competition to heat up in the AI video generation space. More developers will integrate this technology into their models, leading to an overall increase in quality. The future looks bright, and if the initial results are any indication, we’re just scratching the surface of what’s possible.

🎥 Tencent's Open Source Video Generation Model

Hold onto your hats, because Tencent has just dropped a bombshell in the world of AI video generation! This powerhouse has released a fully open-source video generation model that is raising the bar to astronomical heights. We’re talking top-tier quality that rivals the best in the business!

What Makes Tencent’s Model Stand Out?

This isn’t just any run-of-the-mill model; it’s equipped with an advanced understanding of physics and lighting that makes it a game changer. Picture this: smooth animations, lifelike movements, and stunning visuals that make you question reality. Tencent’s model is designed to produce high-quality videos that can be integrated into various workflows, making it incredibly versatile.

The VRAM Challenge

Now, let’s talk about the elephant in the room: VRAM. As impressive as this model is, it currently demands a whopping sixty gigabytes of VRAM! For those not in the know, that’s a hefty amount of video memory that most consumer-grade GPUs simply can’t handle. But fear not! The open-source community is already on the case, working tirelessly to make this technology accessible to the masses.

🖌️ Minimax's 12v01Live: Dynamic Motion for 2D Art

Next up, we have a thrilling new tool from Minimax, known as 12v01Live. This innovative model is specifically designed to breathe life into static 2D illustrations. Imagine your favorite characters moving and interacting in ways you never thought possible!

Transforming 2D Art into Motion

12v01Live is not just any animation tool; it’s a master at enhancing smoothness and vivid motion. It takes your static illustrations and transforms them into dynamic visuals, allowing characters to move and even speak! This is a game changer for artists and animators looking to add depth and interaction to their work.

What It Is and Isn’t

Let’s clarify what 12v01Live is not. This isn’t a model that takes your video footage and applies motion to it; rather, it’s a specialized tool for 2D art. The focus here is on creating fluid, animated sequences that stay true to the artistic style of the original piece. It’s a marriage of art and technology that genuinely enhances the creative process.

🔊 Google's VO AI Video Generation Model

Hold your applause, because Google has finally rolled out its VO AI video generation model on the Vertex AI platform! However, the excitement might be a bit tempered as this release is currently in private preview. So, what’s the verdict?

Underwhelming or Just Getting Started?

While the initial demos showcase some intriguing capabilities—like slow-motion marshmallows roasting and a lively crowd at an EDM concert—many feel it lacks the pizzazz of newer models. Google VO was once the talk of the town, but it seems to have lost some ground in the race. The real test will be how it handles complex prompts and multiple entities interacting seamlessly.

The Competition Heats Up

With OpenAI breathing down its neck, Google needs to step up its game. The buzz around the AI community is palpable, and everyone is eagerly awaiting what’s next. Will Google VO rise to the occasion, or will it be left in the dust? Only time will tell.

🔮 Upcoming Releases and Final Thoughts

As we look to the future, it’s clear that the AI video generation landscape is evolving at lightning speed. With groundbreaking models from Tencent, Minimax, and Google, the competition is fierce, and the innovation is relentless.

What’s Next?

Keep your eyes peeled, as exciting releases are on the horizon! The buzz around OpenAI’s potential new offerings has everyone on edge, and it seems like this week is going to be monumental for AI advancements. Will we see the release of Sora? Or perhaps more surprises? The anticipation is electric!

Final Thoughts

The future of AI video generation looks incredibly bright. With open-source contributions fostering innovation and competition heating up, creators and developers alike have a treasure trove of tools at their disposal. Buckle up, because we’re just getting started!

In the fast-evolving landscape of AI video generation, new technologies and models are emerging at an unprecedented rate. This blog explores the latest advancements, including Spatiotemporal Skip Guidance, Tencent's groundbreaking open-source model, and much more, highlighting how these innovations are set to enhance video quality and realism.

🚀 Exciting Video Generation Updates

Hold onto your seats, because the world of AI video generation just got a turbo boost! The latest breakthroughs are not just minor tweaks; they’re game changers. With the introduction of Spatiotemporal Skip Guidance (STG), we’re stepping into a new era where video generation models are more precise, realistic, and downright impressive. It’s not just about creating videos anymore; it’s about creating experiences that captivate and engage like never before.

What’s the Buzz About STG?

So, what exactly is Spatiotemporal Skip Guidance? Think of it as your video model’s new best friend. This innovative feature acts as a guiding hand, enhancing the output of video generation models. It can work its magic independently or team up with the classic classifier-free guidance, which is a staple in most image and video generation tools.

Why Should You Care?

The implications are massive! With STG, the details in video outputs are crisper, more lifelike, and much more engaging. Imagine producing videos where smoke billows realistically, and characters appear with depth and expression that almost feels human. This isn’t just an upgrade; it’s a revolution in how we think about video generation.

🌟 Introducing Spatiotemporal Skip Guidance (STG)

Let’s dive deeper into the marvel that is Spatiotemporal Skip Guidance. At its core, STG enhances the temporal consistency of video frames while ensuring that spatial details are not lost. This means that as you watch a video, you’ll notice that movements are smoother and more coherent across frames. The result? A viewing experience that feels seamless and immersive.

How Does STG Work?

STG operates by analyzing the motion dynamics within a video. It intelligently decides how to enhance specific frames based on the overall flow of the video. This ensures that elements like hair movement, facial expressions, and background details are not only preserved but enhanced. The technology behind STG is a blend of cutting-edge algorithms that prioritize detail and realism.

STG’s Impact on Creators

For creators and developers, this means more power at your fingertips. You can produce videos that are not just visually appealing but also rich in detail and emotion. Whether you’re in gaming, filmmaking, or content creation, the ability to harness STG can set your work apart. It’s about pushing boundaries and redefining what’s possible in video generation.

🔍 STG in Action: Detailed Examples

Now, let’s get to the juicy part—real-life examples of STG in action. The results speak for themselves, showcasing the dramatic improvements that STG brings to video generation.

A Closer Look at the Enhancements

Detailed Character Features: Characters rendered with STG look more human-like, capturing nuances in facial expressions and intricate details that were previously absent.

Realistic Environmental Effects: Elements like smoke and lighting are significantly enhanced, adding depth and realism to scenes.

Consistent Motion Across Frames: STG ensures that movements are fluid and consistent, reducing the jarring effects often seen in traditional video generation.

Demonstrating the Power of STG

In one example, a butterfly flutters gracefully across the screen. With traditional models, it might appear blurry or indistinct. However, with STG, every detail—the delicate wings, the antennae, even the colors—are vivid and sharp. It’s a transformation that elevates the entire viewing experience.💥 Impact of STG on AI Video Generation

The introduction of STG is not just a feature; it’s a paradigm shift in AI video generation. For developers, this means a more robust toolkit to create stunning visuals. For users, it translates into richer, more engaging content. The potential applications are vast, and the implications for industries like entertainment, education, and marketing are profound.

Raising the Bar for Quality

With STG, even lower-end models designed for consumer hardware can produce high-quality results. This democratization of technology means that anyone with a decent setup can create videos that were once the domain of high-end studios. Imagine the creativity that will be unleashed!

The Future of AI Video Generation

As STG catches on, we can expect competition to heat up in the AI video generation space. More developers will integrate this technology into their models, leading to an overall increase in quality. The future looks bright, and if the initial results are any indication, we’re just scratching the surface of what’s possible.

🎥 Tencent's Open Source Video Generation Model

Hold onto your hats, because Tencent has just dropped a bombshell in the world of AI video generation! This powerhouse has released a fully open-source video generation model that is raising the bar to astronomical heights. We’re talking top-tier quality that rivals the best in the business!

What Makes Tencent’s Model Stand Out?

This isn’t just any run-of-the-mill model; it’s equipped with an advanced understanding of physics and lighting that makes it a game changer. Picture this: smooth animations, lifelike movements, and stunning visuals that make you question reality. Tencent’s model is designed to produce high-quality videos that can be integrated into various workflows, making it incredibly versatile.

The VRAM Challenge

Now, let’s talk about the elephant in the room: VRAM. As impressive as this model is, it currently demands a whopping sixty gigabytes of VRAM! For those not in the know, that’s a hefty amount of video memory that most consumer-grade GPUs simply can’t handle. But fear not! The open-source community is already on the case, working tirelessly to make this technology accessible to the masses.

🖌️ Minimax's 12v01Live: Dynamic Motion for 2D Art

Next up, we have a thrilling new tool from Minimax, known as 12v01Live. This innovative model is specifically designed to breathe life into static 2D illustrations. Imagine your favorite characters moving and interacting in ways you never thought possible!

Transforming 2D Art into Motion

12v01Live is not just any animation tool; it’s a master at enhancing smoothness and vivid motion. It takes your static illustrations and transforms them into dynamic visuals, allowing characters to move and even speak! This is a game changer for artists and animators looking to add depth and interaction to their work.

What It Is and Isn’t

Let’s clarify what 12v01Live is not. This isn’t a model that takes your video footage and applies motion to it; rather, it’s a specialized tool for 2D art. The focus here is on creating fluid, animated sequences that stay true to the artistic style of the original piece. It’s a marriage of art and technology that genuinely enhances the creative process.

🔊 Google's VO AI Video Generation Model

Hold your applause, because Google has finally rolled out its VO AI video generation model on the Vertex AI platform! However, the excitement might be a bit tempered as this release is currently in private preview. So, what’s the verdict?

Underwhelming or Just Getting Started?

While the initial demos showcase some intriguing capabilities—like slow-motion marshmallows roasting and a lively crowd at an EDM concert—many feel it lacks the pizzazz of newer models. Google VO was once the talk of the town, but it seems to have lost some ground in the race. The real test will be how it handles complex prompts and multiple entities interacting seamlessly.

The Competition Heats Up

With OpenAI breathing down its neck, Google needs to step up its game. The buzz around the AI community is palpable, and everyone is eagerly awaiting what’s next. Will Google VO rise to the occasion, or will it be left in the dust? Only time will tell.

🔮 Upcoming Releases and Final Thoughts

As we look to the future, it’s clear that the AI video generation landscape is evolving at lightning speed. With groundbreaking models from Tencent, Minimax, and Google, the competition is fierce, and the innovation is relentless.

What’s Next?

Keep your eyes peeled, as exciting releases are on the horizon! The buzz around OpenAI’s potential new offerings has everyone on edge, and it seems like this week is going to be monumental for AI advancements. Will we see the release of Sora? Or perhaps more surprises? The anticipation is electric!

Final Thoughts

The future of AI video generation looks incredibly bright. With open-source contributions fostering innovation and competition heating up, creators and developers alike have a treasure trove of tools at their disposal. Buckle up, because we’re just getting started!

In the fast-evolving landscape of AI video generation, new technologies and models are emerging at an unprecedented rate. This blog explores the latest advancements, including Spatiotemporal Skip Guidance, Tencent's groundbreaking open-source model, and much more, highlighting how these innovations are set to enhance video quality and realism.

🚀 Exciting Video Generation Updates

Hold onto your seats, because the world of AI video generation just got a turbo boost! The latest breakthroughs are not just minor tweaks; they’re game changers. With the introduction of Spatiotemporal Skip Guidance (STG), we’re stepping into a new era where video generation models are more precise, realistic, and downright impressive. It’s not just about creating videos anymore; it’s about creating experiences that captivate and engage like never before.

What’s the Buzz About STG?

So, what exactly is Spatiotemporal Skip Guidance? Think of it as your video model’s new best friend. This innovative feature acts as a guiding hand, enhancing the output of video generation models. It can work its magic independently or team up with the classic classifier-free guidance, which is a staple in most image and video generation tools.

Why Should You Care?

The implications are massive! With STG, the details in video outputs are crisper, more lifelike, and much more engaging. Imagine producing videos where smoke billows realistically, and characters appear with depth and expression that almost feels human. This isn’t just an upgrade; it’s a revolution in how we think about video generation.

🌟 Introducing Spatiotemporal Skip Guidance (STG)

Let’s dive deeper into the marvel that is Spatiotemporal Skip Guidance. At its core, STG enhances the temporal consistency of video frames while ensuring that spatial details are not lost. This means that as you watch a video, you’ll notice that movements are smoother and more coherent across frames. The result? A viewing experience that feels seamless and immersive.

How Does STG Work?

STG operates by analyzing the motion dynamics within a video. It intelligently decides how to enhance specific frames based on the overall flow of the video. This ensures that elements like hair movement, facial expressions, and background details are not only preserved but enhanced. The technology behind STG is a blend of cutting-edge algorithms that prioritize detail and realism.

STG’s Impact on Creators

For creators and developers, this means more power at your fingertips. You can produce videos that are not just visually appealing but also rich in detail and emotion. Whether you’re in gaming, filmmaking, or content creation, the ability to harness STG can set your work apart. It’s about pushing boundaries and redefining what’s possible in video generation.

🔍 STG in Action: Detailed Examples

Now, let’s get to the juicy part—real-life examples of STG in action. The results speak for themselves, showcasing the dramatic improvements that STG brings to video generation.

A Closer Look at the Enhancements

Detailed Character Features: Characters rendered with STG look more human-like, capturing nuances in facial expressions and intricate details that were previously absent.

Realistic Environmental Effects: Elements like smoke and lighting are significantly enhanced, adding depth and realism to scenes.

Consistent Motion Across Frames: STG ensures that movements are fluid and consistent, reducing the jarring effects often seen in traditional video generation.

Demonstrating the Power of STG

In one example, a butterfly flutters gracefully across the screen. With traditional models, it might appear blurry or indistinct. However, with STG, every detail—the delicate wings, the antennae, even the colors—are vivid and sharp. It’s a transformation that elevates the entire viewing experience.💥 Impact of STG on AI Video Generation

The introduction of STG is not just a feature; it’s a paradigm shift in AI video generation. For developers, this means a more robust toolkit to create stunning visuals. For users, it translates into richer, more engaging content. The potential applications are vast, and the implications for industries like entertainment, education, and marketing are profound.

Raising the Bar for Quality

With STG, even lower-end models designed for consumer hardware can produce high-quality results. This democratization of technology means that anyone with a decent setup can create videos that were once the domain of high-end studios. Imagine the creativity that will be unleashed!

The Future of AI Video Generation

As STG catches on, we can expect competition to heat up in the AI video generation space. More developers will integrate this technology into their models, leading to an overall increase in quality. The future looks bright, and if the initial results are any indication, we’re just scratching the surface of what’s possible.

🎥 Tencent's Open Source Video Generation Model

Hold onto your hats, because Tencent has just dropped a bombshell in the world of AI video generation! This powerhouse has released a fully open-source video generation model that is raising the bar to astronomical heights. We’re talking top-tier quality that rivals the best in the business!

What Makes Tencent’s Model Stand Out?

This isn’t just any run-of-the-mill model; it’s equipped with an advanced understanding of physics and lighting that makes it a game changer. Picture this: smooth animations, lifelike movements, and stunning visuals that make you question reality. Tencent’s model is designed to produce high-quality videos that can be integrated into various workflows, making it incredibly versatile.

The VRAM Challenge

Now, let’s talk about the elephant in the room: VRAM. As impressive as this model is, it currently demands a whopping sixty gigabytes of VRAM! For those not in the know, that’s a hefty amount of video memory that most consumer-grade GPUs simply can’t handle. But fear not! The open-source community is already on the case, working tirelessly to make this technology accessible to the masses.

🖌️ Minimax's 12v01Live: Dynamic Motion for 2D Art

Next up, we have a thrilling new tool from Minimax, known as 12v01Live. This innovative model is specifically designed to breathe life into static 2D illustrations. Imagine your favorite characters moving and interacting in ways you never thought possible!

Transforming 2D Art into Motion

12v01Live is not just any animation tool; it’s a master at enhancing smoothness and vivid motion. It takes your static illustrations and transforms them into dynamic visuals, allowing characters to move and even speak! This is a game changer for artists and animators looking to add depth and interaction to their work.

What It Is and Isn’t

Let’s clarify what 12v01Live is not. This isn’t a model that takes your video footage and applies motion to it; rather, it’s a specialized tool for 2D art. The focus here is on creating fluid, animated sequences that stay true to the artistic style of the original piece. It’s a marriage of art and technology that genuinely enhances the creative process.

🔊 Google's VO AI Video Generation Model

Hold your applause, because Google has finally rolled out its VO AI video generation model on the Vertex AI platform! However, the excitement might be a bit tempered as this release is currently in private preview. So, what’s the verdict?

Underwhelming or Just Getting Started?

While the initial demos showcase some intriguing capabilities—like slow-motion marshmallows roasting and a lively crowd at an EDM concert—many feel it lacks the pizzazz of newer models. Google VO was once the talk of the town, but it seems to have lost some ground in the race. The real test will be how it handles complex prompts and multiple entities interacting seamlessly.

The Competition Heats Up

With OpenAI breathing down its neck, Google needs to step up its game. The buzz around the AI community is palpable, and everyone is eagerly awaiting what’s next. Will Google VO rise to the occasion, or will it be left in the dust? Only time will tell.

🔮 Upcoming Releases and Final Thoughts

As we look to the future, it’s clear that the AI video generation landscape is evolving at lightning speed. With groundbreaking models from Tencent, Minimax, and Google, the competition is fierce, and the innovation is relentless.

What’s Next?

Keep your eyes peeled, as exciting releases are on the horizon! The buzz around OpenAI’s potential new offerings has everyone on edge, and it seems like this week is going to be monumental for AI advancements. Will we see the release of Sora? Or perhaps more surprises? The anticipation is electric!

Final Thoughts

The future of AI video generation looks incredibly bright. With open-source contributions fostering innovation and competition heating up, creators and developers alike have a treasure trove of tools at their disposal. Buckle up, because we’re just getting started!